Meta releases open-source pre-trained code generation model for 'multi-token predictions'

Typically, most large-scale language models (LLMs) perform the task of 'predicting the next word,' and output one piece of data (token) at a time. In response to this, Meta proposed an approach called 'multi-token prediction' in its April 2024

In April we published a paper on a new training approach for better & faster LLMs using multi-token prediction. To enable further exploration by researchers, we've released pre-trained models for code completion using this approach on @HuggingFace ⬇️ https://t.co/OnUsGcDpYx

— AI at Meta (@AIatMeta) July 3, 2024

facebook/multi-token-prediction · Hugging Face

https://huggingface.co/facebook/multi-token-prediction

Meta drops AI bombshell: Multi-token prediction models now open for research | VentureBeat

https://venturebeat.com/ai/meta-drops-ai-bombshell-multi-token-prediction-models-now-open-for-research/

Meta open-sources new 'multi-token prediction' language models - SiliconANGLE

https://siliconangle.com/2024/07/04/meta-open-sources-new-multi-token-prediction-language-models/

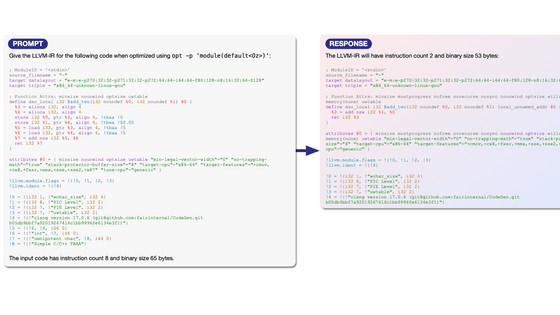

Most large-scale language models output one token at a time, which is simple and scalable, but has the drawback of requiring huge amounts of data for training and being inefficient. In contrast, multi-token prediction proposed by Meta generates multiple tokens at once, which is said to improve the performance and training efficiency of large-scale language models.

Meta open-sourced four pre-trained large-scale language models implementing multi-token prediction to Hugging Face on July 4. The four models focus on code generation tasks and each has 7 billion parameters.

According to technology media SiliconANGLE, each model outputs four tokens at a time. It is unclear why multi-token prediction produces higher quality code than traditional approaches, but Meta believes that multiple token generation may alleviate the limitations of a technique called ' teacher-forcing ' used to train large-scale language models.

Meta tested the performance of the multi-token prediction model on the coding task benchmarks MBPP and HumanEval , and found that it performed 17% better on MBPP and 12% better on HumanEval than conventional large-scale language models, and output speed was three times faster.

Technology media VentureBeat points out that multi-token prediction not only improves the efficiency of large-scale language models, but also has the potential to bridge the gap between humans and AI by enabling them to understand language structure and context with greater accuracy. On the other hand, it also lowers the barrier to potential misuse, such as the generation of misinformation and cyber attacks using AI, so there are both advantages and disadvantages to releasing advanced AI tools as open source.

Related Posts:

in Software, Web Service, Posted by log1h_ik