It was pointed out that GPT-3, which can generate ultra-high-precision sentences, is 'no longer the only option as a language model.'

The language model '

GPT-3 is No Longer the Only Game in Town --Last Week in AI

https://lastweekin.ai/p/gpt-3-is-no-longer-the-only-game

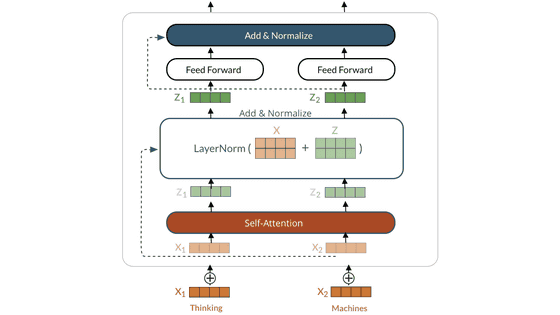

Introduced in June 2020, GPT-3 has inspired AI researchers and software developers around the world, and the paper introducing GPT-3 has received over 2000 citations at the time of writing, and OpenAI the application of more than 300 at the time of the may 2021 is using the GPT-3 it says . In addition, the article written by GPT-3 has emerged as the number one social news site, and it has been pointed out that there is an anti-Islamic bias. I was surprised.

However, Last Week in AI said, 'People's ability to build things based on GPT-3 has been hampered by one major factor: GPT-3 wasn't open to the public. Instead, OpenAI We chose to commercialize GPT-3 and provide access only via paid APIs. ' While this makes sense given the benefits of OpenAI, it was contrary to common practices as an AI researcher, allowing other researchers to build on their own research. ..

Therefore, multiple organizations are developing 'language models that compete with GPT-3', and at the time of writing the article, multiple 'GPT-3-like language models' have been developed, Last Week in AI said. increase.

There are some obstacles to overcome in order to develop 'Original GPT-3'. The first issue is 'computational power', which requires a large amount of GPU to run because the 175 billion parameters of the GPT-3 itself require 350GB of capacity.

In addition, training a language model requires a huge amount of training data, and GPT-3 is trained with as much as 45TB of data collected from the entire Internet. Taken together, GPT-3 training can cost between $ 10 million and $ 20 million (approximately ¥ 1,135 million to ¥ 2,271 million).

Nevertheless, a new language model is being developed that can be described by multiple teams as GPT-3. One of them is 'GPT-Neo ' announced by EleutherAI, an open source project by researchers. Eleuther AI created a smaller version of GPT-3, GPT-Neo, using a dataset called the Pile similar to the one used to train GPT-3, and the latest version of 'GPT-J-6B ' It has 6 billion parameters. 'Surprisingly, one of the early efforts to announce the results (developing a language model like GPT-3) was not a company with huge assets like OpenAI, but a grassroots activity by volunteers. It was, 'says Last Week in AI.

'GPT-Neo' aiming for a language model with performance close to 'GPT-3' with open source --GIGAZINE

In addition, a research team from Tsinghua University in China and the Beijing Institute of Artificial Intelligence (BAAI) announced a language model called 'Chinese Pretrained Language Model (CPM)' six months after GPT-3 was announced. This is a language model with 2.6 billion parameters trained in 100GB of Chinese text, which is far from the scale of GPT-3, but more suitable for use in Chinese. In addition, Huawei has announced a language model called 'PanGu-α ' that is trained in 1.1TB of Chinese text and has 200 billion parameters.

Similar movements are spreading outside of China, South Korea's Never has released a language model 'HyperCLOVA ' with 240 billion parameters, and Israeli AI21 Labs has announced 'Jurassic-1 ' with 178 billion parameters. In addition, NVIDIA and Microsoft announced the Megatron-Turing NLG with 530 billion parameters in October 2021. It seems that the number of parameters in the language model does not necessarily mean high performance, but it is clear that the number of large-scale language models that compete with GPT-3 is increasing, and the number will increase in the next few years. There is a possibility that it will increase.

Last Week in AI said, 'Given that these language models are so powerful, we are concerned about the fact that they are both exciting and at the same time'capable of companies with large financial resources to create these models'. Pointed out. AI researchers at Stanford University have launched the 'Center for Research on Foundation Models ' to study GPT-3 and similar basic language models, and are developing large-scale language models. He said he is investigating the problem. 'It's unclear how long this trend of scaling up the language model will continue and whether there will be new discoveries beyond GPT-3, but so far we are in the middle of this journey and in the next few years. It's very interesting to see what happens, 'said Last Week in AI.

Related Posts: