It is clear that 'GPT-3' that can automatically generate ultra-high precision sentences outputs more than 4.5 billion words per day

The AI 'GPT' created by the AI research organization OpenAI, which can automatically generate sentences, was technically said

GPT-3 Powers the Next Generation of Apps

https://openai.com/blog/gpt-3-apps/

OpenAI's text-generating system GPT-3 is now spewing out 4.5 billion words a day --The Verge

https://www.theverge.com/2021/3/29/22356180/openai-gpt-3-text-generation-words-day

One of the biggest trends in machine learning is text generation, and many AI-based systems are trying to improve the accuracy of text generation by learning from the myriad of texts that exist on the Internet. Text generation using AI is much more advanced than in other fields, and you can already write sentences that look like human blogs, or talk to humans on the Internet bulletin board Reddit without anyone noticing. You have reached the possible level.

The most famous text generator using AI is OpenAI's 'GPT-3'. An API has also been released to make GPT-3 easy to use, and it was initially provided free of charge, but from July 2021 it has been provided as a paid API.

OpenAI can use the AI model using the ultra-high precision language model 'GPT-3' as an API, and there is also a demo movie that searches the contents of Wikipedia by 'question' --GIGAZINE

OpenAI's first commercial product, the GPT-3 API, has been used by more than 300 different applications in just nine months since its launch, generating an average of 4.5 billion words per day.

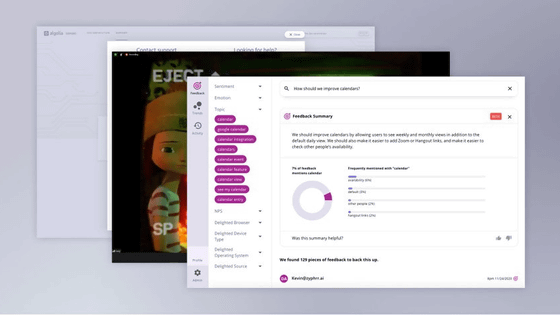

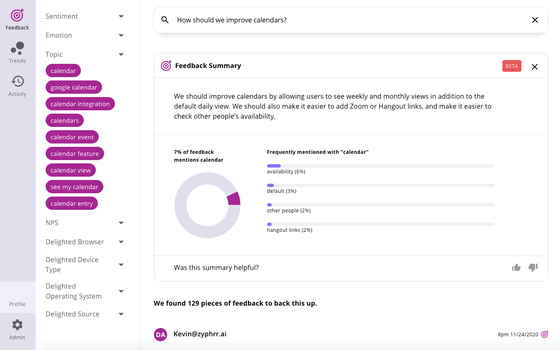

A tool called Viable that analyzes customer feedback uses GPT-3 to identify emotions and themes from textual information such as chat logs and reviews, and provide users with insights. For example, when asked, 'Are there any factors that are frustrating to the customer regarding payment?' Viable said, 'Customers are dissatisfied with the payment process because it takes too long to load. Also, the address is given at the time of payment. We also need to be able to edit and save multiple payment methods. ' 'With GPT-3, we can help our marketing team better understand the customer experience, customer needs, and more,' said Daniel Erickson, CEO of Viable.

In addition, the digital content studio ' Fable Studio ' uses GPT-3 for Lucy, the main character of the VR game 'Wolves in the Walls', to enable natural dialogue between the player and Lucy. doing. Edward Saatchi, CEO of Fable Studio, said, 'GPT-3 brought us to life with characters. Combining the artist's vision with AI and emotional intelligence to create a powerful story. I'm excited to be able to do it, 'he said, emphasizing that GPT-3 plays an important role in' Wolves in the Walls. '

You can check the actual dialogue between the player and Lucy in the following movie.

Lucy Premieres at Sundance --Highlights on Vimeo

In addition, OpenAI widely advertises that the use cases of API version GPT-3 are increasing, but the technology media says that the language model tends to accumulate biased knowledge due to harmful bias like other algorithms. Pointed out by The Verge. In fact, research papers have been published showing that there is an anti-Islamic bias towards GPT-3.

It turns out that there is an anti-Islamic bias in the ultra-high-precision sentence generation AI 'GPT-3' --GIGAZINE

On the other hand, in order to prevent bias and misuse, OpenAI is conducting a review on whether or not to finally approve the use of GPT-3 for all applications that use the API version of GPT-3. It states. In addition, developers are also required to implement safety measures such as rate limiting, user verification, and human-in-the-loop before moving to the production environment. In addition, we have formed a 'red team ' that actively monitors misuse and application vulnerabilities, and we are also appealing that we are conducting rigorous evaluations.

Related Posts: