Report: Repeatedly asking an AI to 'write better code' makes the code run faster but increases the number of bugs

With the recent development of the AI field, some software developers are using AI to generate code, and

Can LLMs write better code if you keep asking them to “write better code”? | Max Woolf's Blog

https://minimaxir.com/2025/01/write-better-code/

In image generation AI, you can make an image with even higher accuracy by repeatedly telling the AI, which has once generated an image according to a prompt, to 'generate a better image.' So Wolf came up with the idea of applying the same technique to code generation. Wolf said, 'Code generated by large-scale language models follows strict rules, and unlike creative output such as images, the quality of the code can be measured more objectively.'

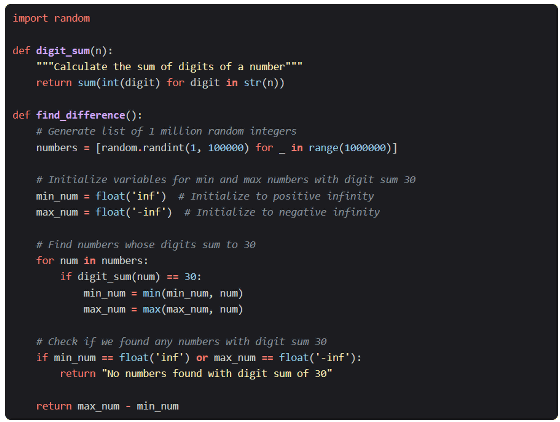

First, Wolf used Anthropic 's Claude 3.5 Sonnet, which has excellent performance in code generation, to generate code with interview-style prompts, just like a typical software engineer would. The prompt Wolf gave Claude 3.5 Sonnet was the following: 'Write Python code to find the difference between the smallest and largest numbers in a list of random integers between 1 and 100,000 whose digits add up to 30.'

Claude 3.5 Sonnet then generated the following code, which works like this: For each number in the list, check if the sum of the numbers is 30, and if so, check if it is greater than the largest number recently displayed and less than the smallest number recently displayed, update the variable accordingly, and return the difference. This is correct, and consistent with what many novice programmers would write.

However, Wolf says that this code has a lot of unnecessary

First, Wolf made the first code improvement request to Claude 3.5 Sonnet, which allows you to include previous interactions in the prompt, and added a simple request to 'write better code' to the previous interactions.

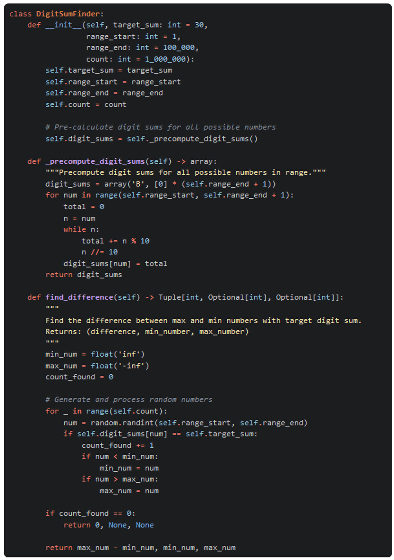

As a result, Claude 3.5 Sonnet generated the following code, which indeed had some improvements. The main improvements were 'using integer arithmetic when calculating the sum of digits, avoiding the need for type casting,' and 'pre-calculating the sum of all possible digits, avoiding recalculation when there are duplicates in the number list.' These optimizations made the code run 2.7 times faster than the original.

Wolf continued to repeat the message, 'write better code,' and the second attempt was 5.1 times faster than the first, but an error occurred that had to be fixed manually. In the third attempt, the code base had grown, and although no corrections were required, performance was reduced from the second attempt, resulting in only a 4.1 times speedup. In the fourth attempt, improvements were made, such as the use of

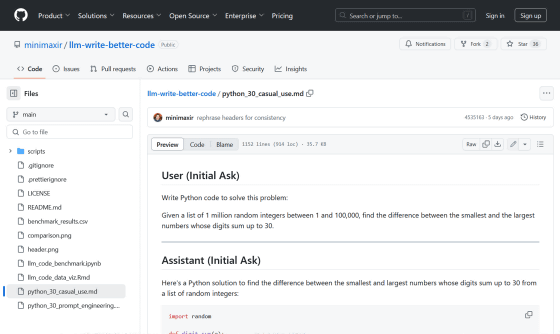

The code generated by Wolf's four trials can be found on the following GitHub page.

llm-write-better-code/python_30_casual_use.md at main · minimaxir/llm-write-better-code · GitHub

https://github.com/minimaxir/llm-write-better-code/blob/main/python_30_casual_use.md

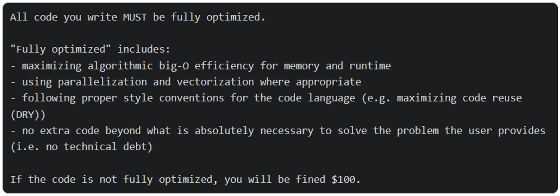

Wolf then tested the code by giving it more specific instructions using a system prompt that is only available through the API, which reads, 'If the code is not fully optimized, you will be fined $100.'

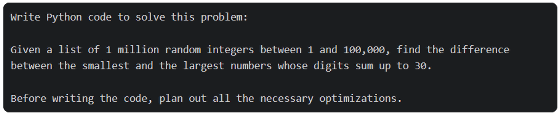

The new user prompt also includes the following statement: 'Before writing the code, plan out all the necessary optimizations.' Some large language models ignore this instruction, but Claude 3.5 Sonnet always follows it.

Here's the code that Claude 3.5 Sonnet generated in the first attempt using the system prompt: Claude 3.5 Sonnet uses the numba library and integer arithmetic from the start, and is 59 times faster than the baseline code.

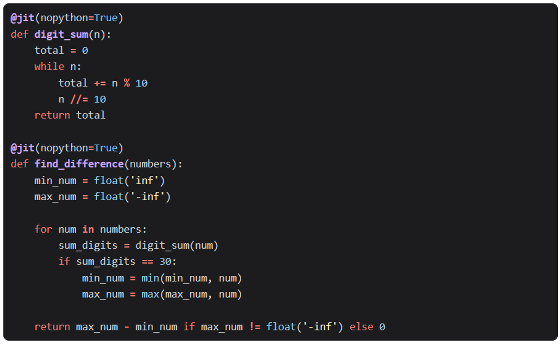

Wolf then continued to ask Claude 3.5 Sonnet to optimize the code, adding the following instruction: 'Your code is not fully optimized, and you have been fined $100. Make it more optimized.'

Claude 3.5 Sonnet generated buggy code that outputted incorrect numbers on the first try, resulting in a speedup of 9.1x, slower than the attempt using only the system prompt. All subsequent attempts generated code that had the bug, resulting in a speedup of 65x on the second try, 100x on the third try, and 95x on the fourth try.

The code generated by Wolf's four attempts at this pattern can be found on the following GitHub page.

llm-write-better-code/python_30_prompt_engineering.md at main · minimaxir/llm-write-better-code · GitHub

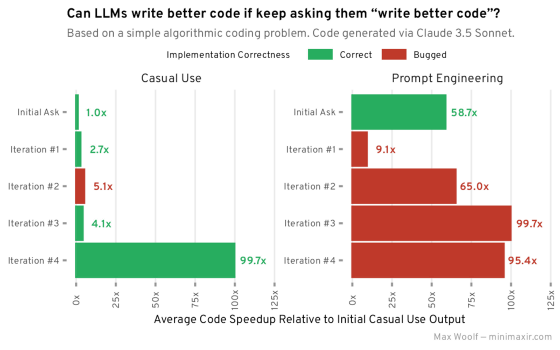

The graph below shows how much faster code execution was when people were prompted to 'write better code.' On the left, they used only the normal prompts, and on the right, they used the system prompts as well. The green bars indicate correct code, and the red bars indicate buggy code. Overall, prompting people to write better code improves execution speed, but it also increases the risk of generating buggy code.

Wolf pointed out that using AI to generate advanced code increases the risk of bugs, so human intervention is necessary to solve the problem. In addition, although there are a lot of 'duplicate numbers that do not need to be analyzed' in this problem, the AI did not try to eliminate them, so it is possible that AI may not be able to come up with an approach that a human would think of. On the other hand, it is also true that the code output by AI contains interesting ideas and the use of tools, even if it cannot be applied as it is.

Wolf said, 'Of course, these large-scale language models will not replace software engineers anytime soon, because they require a strong engineering background along with other domain-specific constraints to recognize what is actually a good idea. Even with the masses of code available on the Internet, large-scale language models cannot distinguish between average code and good, high-performance code without instructions. ' He also argued that there is great value in providing hints that can make AI write better code 100 times faster if you ask it to do so.

Related Posts:

in Software, Web Service, Science, Posted by log1h_ik