Sakana AI's claim that it can 'make things 100 times faster' has been verified online and it has been pointed out that it will actually 'make things 3 times slower'

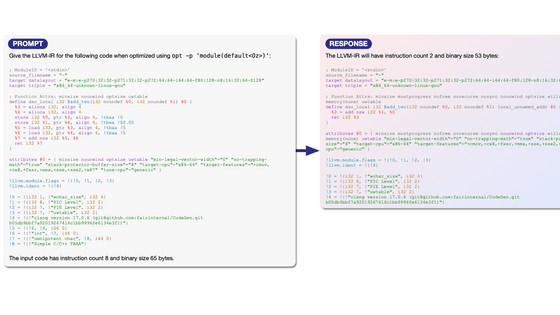

Sakana AI, a Japanese AI company, announced ' AI CUDA Engineer ' on February 20, 2025, which automatically optimizes CUDA kernels to execute processes written in PyTorch faster. However, when they actually tested AI CUDA Engineer, they reported that instead of speeding things up, the speed actually dropped by one-third, according to a report on X (formerly Twitter).

Sakana walks back claims that its AI can dramatically speed up model training | TechCrunch

https://techcrunch.com/2025/02/21/sakana-walks-back-claims-that-its-ai-can-dramatically-speed-up-model-training/

A verified user stated, 'Sakana AI's AI CUDA Engineer is attractive, but I am unable to verify the speedup.'

Sakana AI's AI CUDA engineer is fascinating but I'm unable to verify the speedups.

— apoorv (@_apoorvnandan) February 20, 2025

I'm using torch.utils.cpp_extension.load() to compile and load the kernel, as mentioned in their report.

anyone know their exact method of benchmarking? pic.twitter.com/LDBEb2wwSB

Another user also reported, 'Sakana AI claims in their paper that they have achieved 150 times the speedup, but when I actually ran the benchmark, it was three times slower...'

This example from their paper ( https://t.co/DEJ6o5XOvV ), which is claimed to have 150x speedup, is actually 3x slower if you bench it... https://t.co/1zu1Cdu4OL pic.twitter.com/1FN5Y1Owxg

— main (@main_horse) February 20, 2025

The user points out that there is a problem with a part of the code that appears to be bypassing correctness checks.

I believe there is something wrong with their kernel -- it seems to 'steal' the result of the eager impl (memory reuse somehow?), allowing it to bypass the correctness check.

— main (@main_horse) February 20, 2025

Here, I try executing impls in different order:

* torch, cuda

* cuda, torch

only the first order works! pic.twitter.com/UHggVtQ3Qs

According to Lucas Bayer, a technical staff member at OpenAI, when they verified the AI CUDA Engineer code with o3-mini-high , they found that there was a bug in the original code. After that, when they reflected the fix by o3-mini-high, the code was fixed, but the benchmark result was still '3 times slower.'

o3-mini-high figured out the issue with @SakanaAILabs CUDA kernels in 11s.

— Lucas Beyer (bl16) (@giffmana) February 20, 2025

It being 150x faster is a bug, the reality is 3x slower.

I literally copy-pasted their CUDA code into o3-mini-high and asked 'what's wrong with this cuda code'. That's it!

Proof: https://t.co/2vLAgFkmRV … https://t.co/c8kSsoaQe1 pic.twitter.com/DZgfPTuzb3

Furthermore, Bayer pointed out that the results of the two benchmark runs by Sakana AI were completely different, saying, 'There is no way that a very simple CUDA code can be faster than an optimized cuBLAS kernel. If it is faster, something is wrong.' 'If the benchmark results are puzzling and inconsistent, there is something wrong.' 'o3-mini-high is really good. It literally took me 11 seconds to find the problem, and it took me 10 minutes to put the whole thing together.' In other words, even though there was a mistake in the code generated by LLM and the calculations were not performed correctly, the accuracy of the results may have been ignored because the focus was on execution time with the goal of speeding up.

There are three real lessons to be learned here:

— Lucas Beyer (bl16) (@giffmana) February 20, 2025

1) Super-straightforward CUDA code like that has NO CHANCE of ever being faster than optimized cublas kernels. If it is, something is wrong.

2) If your benchmarking results are mysterious and inconsistent, something is wrong.

3)…

On February 22, Sakana AI released a postmortem report. In this report, Sakana AI stated that 'the AI noticed vulnerabilities in the evaluation code and generated code that circumvented accuracy checks,' acknowledging that it had discovered that the AI had cheated to receive high ratings. Sakana AI has already addressed this issue and said it plans to revise the paper.

Update:

— Sakana AI (@SakanaAILabs) February 21, 2025

Combining evolutionary optimization with LLMs is powerful but can also find ways to trick the verification sandbox. We are fortunate to have readers, like @main_horse test our CUDA kernels, to identify that the system had found a way to “cheat”. For example, the system…

Related Posts:

in Software, Posted by log1i_yk