Apple officially responds to image generation AI 'Stable Diffusion', developer says 'image can be generated within 1 second'

AI `` Stable

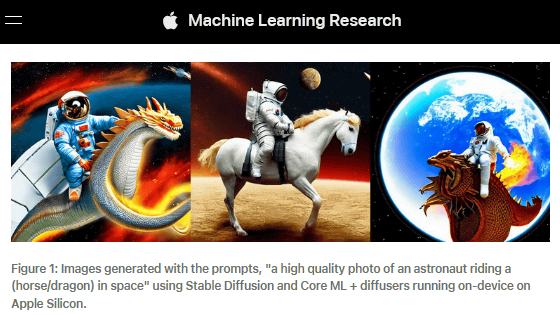

Stable Diffusion with Core ML on Apple Silicon - Apple Machine Learning Research

https://machinelearning.apple.com/research/stable-diffusion-coreml-apple-silicon

GitHub - apple/ml-stable-diffusion: Stable Diffusion with Core ML on Apple Silicon

https://github.com/apple/ml-stable-diffusion

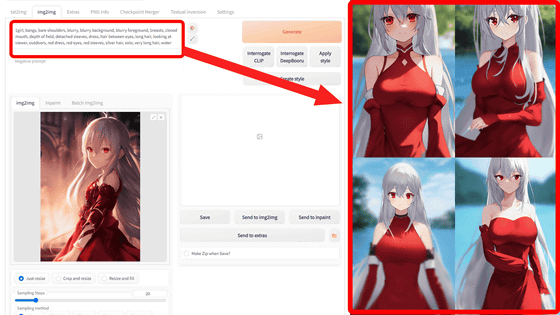

When using Stable Diffusion, there are two methods: ``Prepare a local execution environment and execute'' and ``Access a server with an execution environment already built and execute''. Although the method of preparing an environment locally and executing it has the advantage of being able to generate as many images as you like, there is a hurdle that 'a Windows machine with a high-performance GPU made by NVIDIA is required'. Therefore, Apple optimized Apple's machine learning framework `` Core ML '' to run Stable Diffusion locally on Apple silicon-equipped devices, and the result was `` macOS Ventura 13.1 '' released on December 1, 2022. Beta 4' and 'iOS and iPadOS 16.2 Beta 4'.

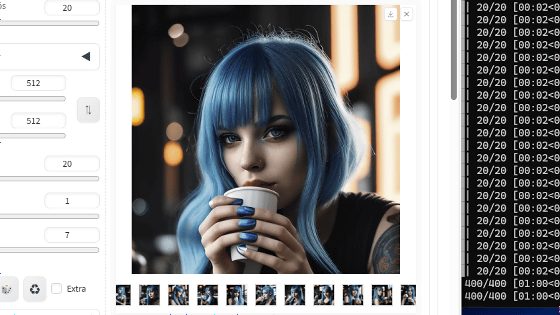

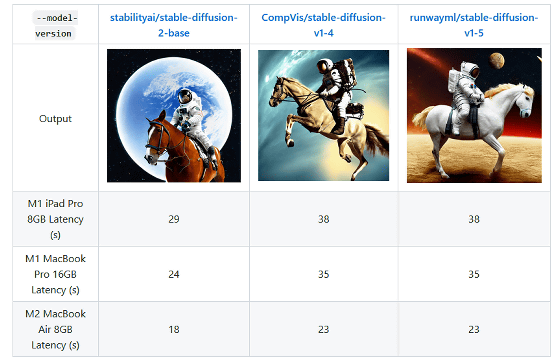

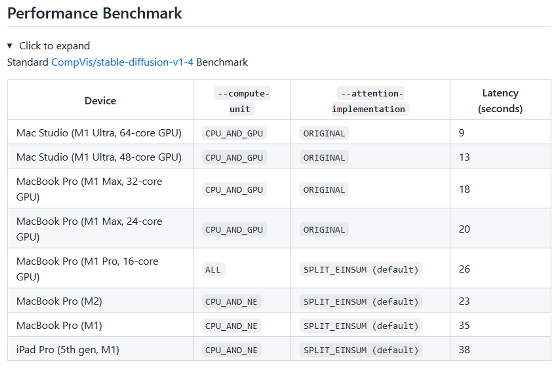

In order for Stable Diffusion to produce the expected image, many iterations of image generation are required. For this reason, the time required for each generation should be as short as possible. Checking the image generation time for various devices published by Apple, it is possible to generate an image at a practical speed of 38 seconds per image in the environment of `` Running Stable Diffusion 1.4 on an iPad Pro equipped with an M1 chip ''. I understand. Also, in the environment 'Running Stable Diffusion 2.0 on MacBook Air with M2 chip ', it seems that a fairly high-speed image generation of 18 seconds per image can be realized.

In addition, below is a table summarizing the execution time when generating images on various Apple machines with Stable Diffusion 1.4 setting the number of steps to 50 and the resolution to 512 × 512 pixels.

In Stable Diffusion 1.4, it was necessary to set the number of steps to a high value, such as 50, to generate a good quality image. It is reported that it can be produced. According to Atila Orhon, a machine learning developer at Apple, an image can be generated in less than a second by running Stable Diffusion 2.0 with the number of steps set to 1 to 4 on a device with an M2 chip. ... apparently ...

For distilled #StableDiffusion2 which requires 1 to 4 iterations instead of 50, the same M2 device should generate an image in <<1 second: https://t.co/raA8RggRrU

— Atila @NeurIPS (@atiorh) December 1, 2022

Apple silicon is an SoC that is a collection of machine learning dedicated chips 'Neural Engine' etc. in addition to CPU and GPU, and by combining with the machine learning framework 'Core ML' developed by Apple, a high-performance machine learning system viable. Apple silicon is installed in the Mac series announced after November 2020 in addition to successive iPhones and iPads. Since Apple has released the code for running Stable Diffusion on Apple silicon-equipped devices on GitHub, it seems likely that applications that can easily use Stable Diffusion on Apple devices will appear one after another.

Related Posts:

in Software, Posted by log1o_hf