What is 'Glocking', the secret to dramatically improving the performance of generation AI?

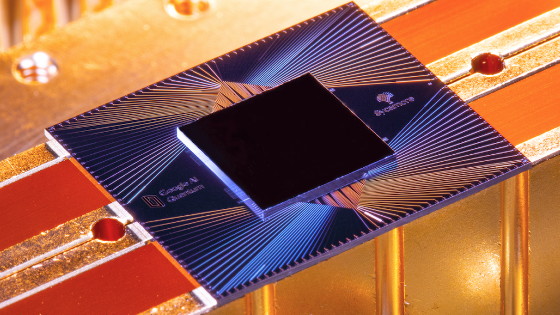

Google's AI researchers have investigated the relationship between phase change and ``

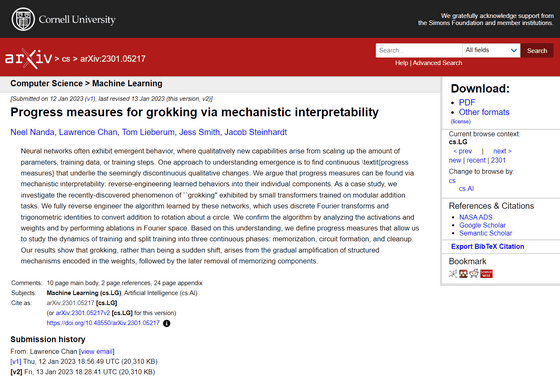

[2301.05217] Progress measures for grokking via mechanistic interpretability

https://arxiv.org/abs/2301.05217

A Mechanistic Interpretability Analysis of Grokking - AI Alignment Forum

https://www.alignmentforum.org/posts/N6WM6hs7RQMKDhYjB/a-mechanistic-interpretability-analysis-of-grokking

Neural networks often exhibit new behavior by scaling up parameters, training data, training steps, etc. One approach to understanding this is to find measures of continuous progress that underlie seemingly discontinuous qualitative change.

Neil Nanda, who belongs to Google DeepMind, an AI research company under Google, believes that this ``measure of progress'' can be found by reverse engineering the learned behavior into individual components. We are conducting an investigation into a phenomenon called 'glocking' discovered by OpenAI.

I've spent the past few months exploring @OpenAI 's grokking result through the lens of mechanistic interpretability. I fully reverse engineered the modular addition model, and looked at what it does when training. So what's up with grokking? A ????.. (1/17) https://t.co/AutzPTjz6g

— Neel Nanda (@NeelNanda5) August 15, 2022

Regarding the phenomenon of 'glocking' discovered by OpenAI, the research team said, ``A small AI model trained to perform a simple task like modular addition first records the training data, but it takes a long time. Over time, you suddenly start generalizing to unseen data.' In addition, generalization refers to the state of 'applying the information obtained in training to new problems and answering'.

The research team pointed out that glocking is closely related to phase change. Phase change refers to a sudden change in a model's performance for some ability during training, and is a common phenomenon that occurs when training a model.

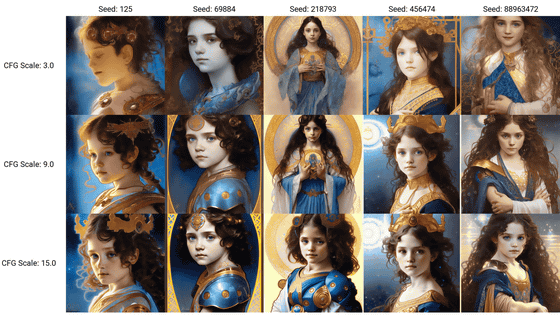

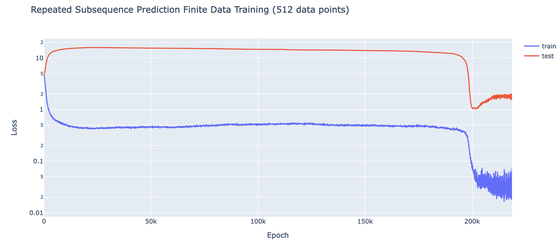

The graph below summarizes the number of times of training (horizontal axis) and the incorrect answer (vertical axis) of the model output when continuing iterative training with a ``small AI model trained to perform modular addition''. . You can see that the number of incorrect answers decreased sharply before the number of training times reached 1000, and a big change occurred.

Furthermore, the research team points out that this change manifests as glocking if you regularize and add enough data to generalize.

The phase change occurs because it is 'difficult' for the model to come up with a general solution, Nanda said.

The research team said that the model takes longer to reach the phase change because something about the error in training is affecting it. On the other hand, since it is very easy to just record an event, the model records the training content first.

The model slowly interpolates the processing of the data from recording to generalization until a phase change occurs to reduce errors. After the interpolation from recording to generalization is completed, the output result changes dramatically, that is, a phase change occurs.

Based on these, Mr. Nanda said, 'Although we do not fully understand phase change, we argue that confusion about glocking can be replaced by confusion about phase change!' rice field.

I don't claim to fully understand phase changes, but I DO claim to have reduced my confusion about grokking to my confusion about phase changes!

— Neel Nanda (@NeelNanda5) August 15, 2022

More specifically, the research team said, ``If you choose a data amount that gives a slight advantage to the 'generalized solution' over the 'recorded solution' due to regularization, you will see glocking.' , explains that glocking and phase change are closely related.

The research team uses a small AI model to analyze glocking, but it seems that this phase change cannot be confirmed in a large model. However, for small AI models, I explained that I can notice this strange change.

The research team said, ``One of the core claims of mechanistic interpretability is that neural networks are comprehensible, not mysterious black boxes, but learn interpretable algorithms that can be reverse engineered and understood. It means that we are doing it, ”he explained, explaining that this study was to demonstrate the concept.

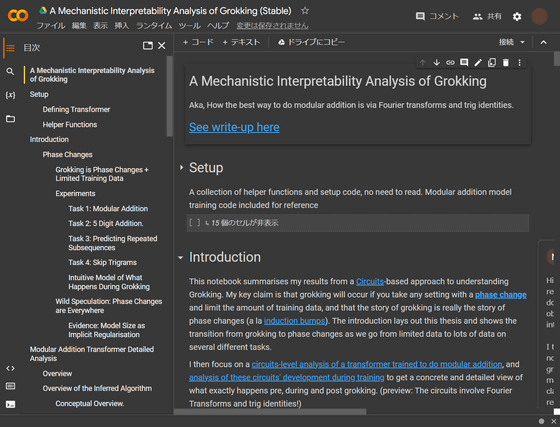

The research team summarizes the technical details and discussions in this analysis on the following page.

A Mechanistic Interpretability Analysis of Grokking (Stable) - Colaboratory

https://colab.research.google.com/drive/1F6_1_cWXE5M7WocUcpQWp3v8z4b1jL20

Related Posts:

in Software, Posted by logu_ii