'Transformer Explainer' lets you see how large language models work

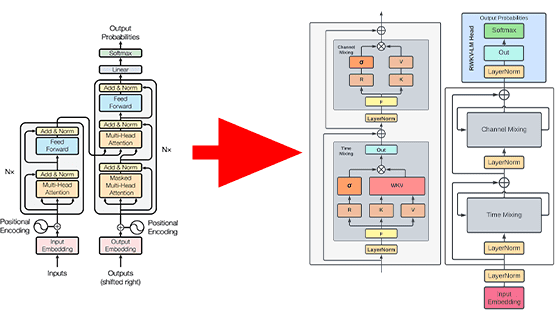

Large-scale language models such as GPT-4, Llama, and Claude are built on a framework called '

Transformer Explainer

https://poloclub.github.io/transformer-explainer/

You can see how to view the Transformer Explainer in one shot by watching the movie below.

Transformer Explainer: Learn How LLM Transformer Models Work - YouTube

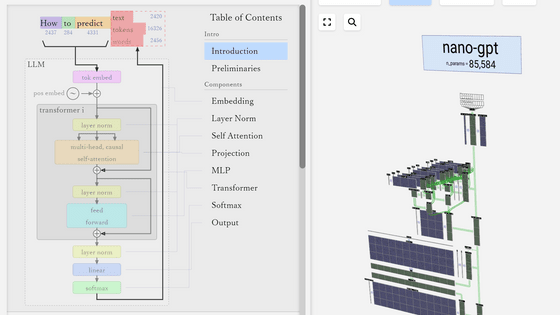

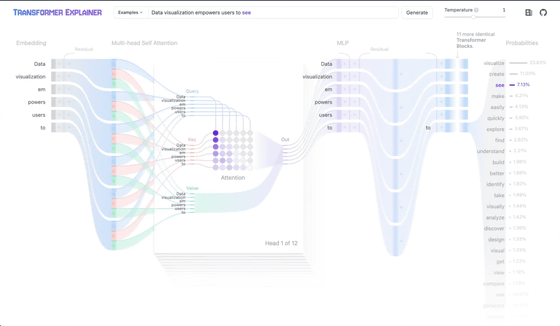

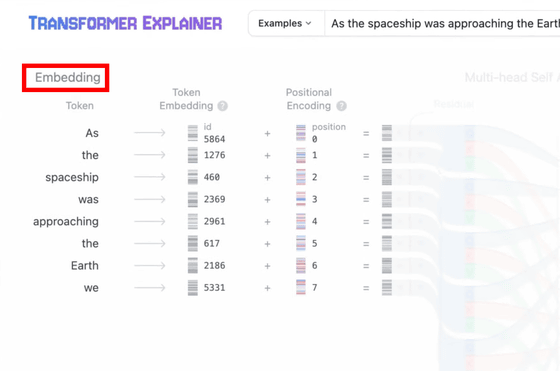

When you access Transformer Explainer, it looks like this.

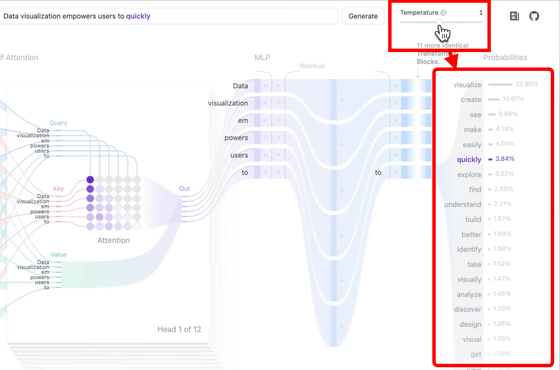

'Temperature' in the upper right is a variable that affects the probability distribution when predicting the next word. Moving the slider left or right changes the probability distribution, and the next output word also changes.

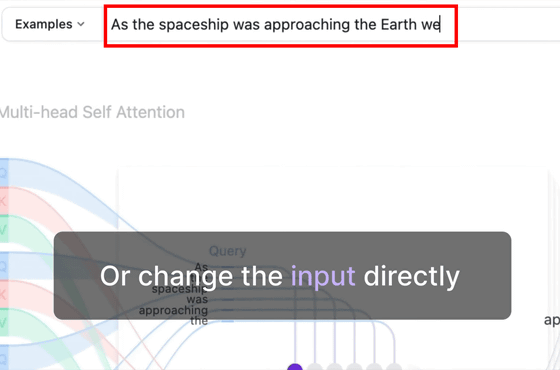

You can also enter text directly into the input field at the top.

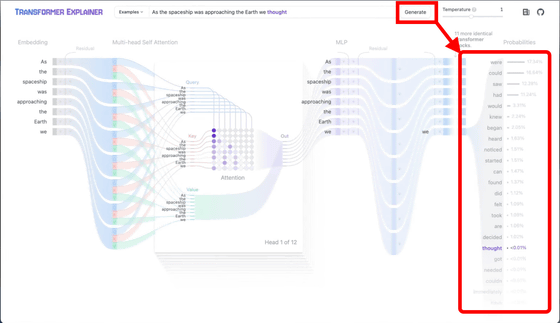

When you click the 'Generate' button to the right of the input field, a probability distribution will be calculated based on the set Temperature and the next word will be output.

You can see how this probability distribution is generated by looking at 'Embedding' on the left. In this embedding, the input string is broken down into units called tokens and converted into vectors. Clicking on the 'Embedding' part of the Transformer Explainer will visualize how the tokens are transformed.

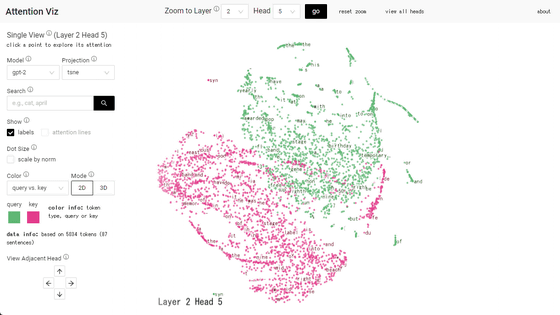

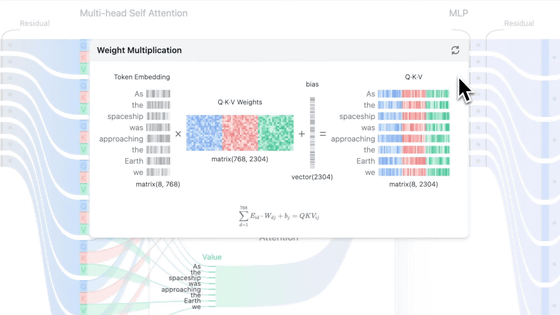

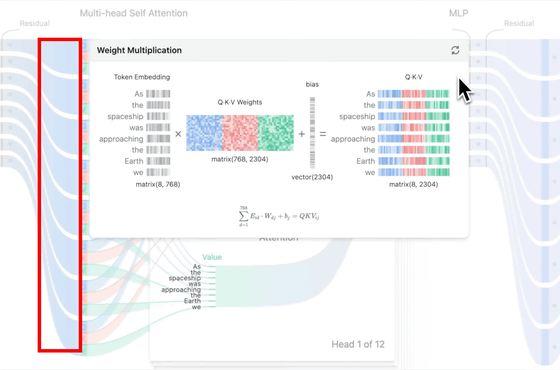

Then, from this vector, we calculate three inputs: Query, Key, and Value. You can see this calculation process by clicking on the blue line extending from the token.

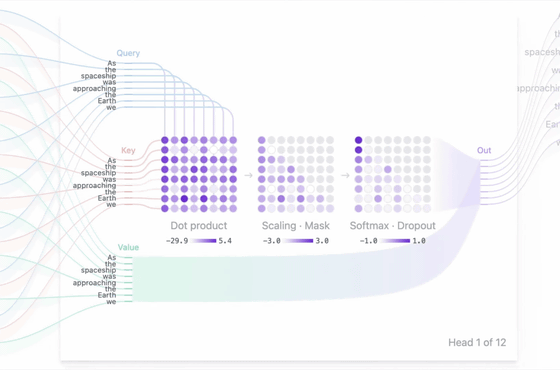

The core of Transformer is a mechanism called 'Attention,' which weights and focuses on data that is useful for prediction. Looking at the 'Multi-Head Attention' in the center, you can see that the inner product of Query and Key is normalized with a function called

Transformer Explainer is developed as open source and the source code is available on GitHub under the MIT License .

GitHub - poloclub/transformer-explainer: Transformer Explained: Learn How LLM Transformer Models Work with Interactive Visualization

https://github.com/poloclub/transformer-explainer

Related Posts:

in AI, Video, Software, Web Application, Posted by log1i_yk