High school students develop 'Audio Decomposition,' an open source tool that breaks down music into musical instrument sounds and creates musical scores from scratch

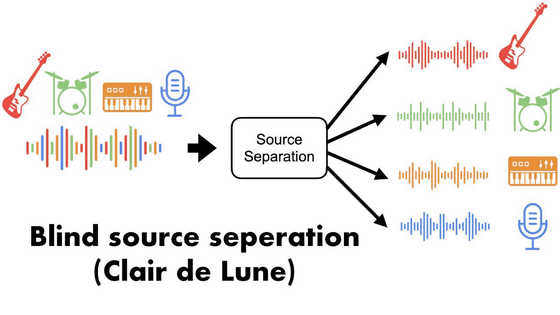

Matthew Bird, a high school student studying computer science, has released a tool called `` Audio Decomposition '' that uses a simple algorithm to break down music made up of the overlapping sounds of various instruments and turn it into sheet music.

Matthew Bird - Audio Decomposition

GitHub - mbird1258/Audio-Decomposition

https://github.com/mbird1258/Audio-Decomposition

According to Bird, Audio Decomposition was originally started as a project driven by his own need to convert music into sheet music and the lack of open source, simple algorithmic sound source separation tools. It is developed from scratch without using any external instrument separation libraries.

When you input a music file into Auto Decomposition, it separates the parts of each instrument contained in the file and analyzes which notes each instrument is playing and when. In the movie below, you can see the result of converting Debussy's 'Clair de Lune' played on the piano into a sequence.

Audio Decomposition (Clair de Lune) - YouTube

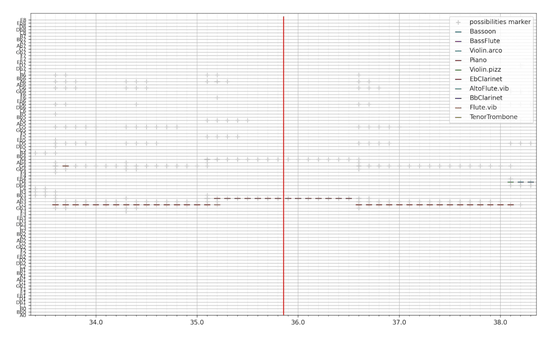

The movie below shows the result of applying Audio Decomposition to ' Isabella's Song .' The decomposed instruments are displayed in the upper right corner, and each is musically scored in sequence.

Audio Decomposition (Isabella's Lullaby) - YouTube

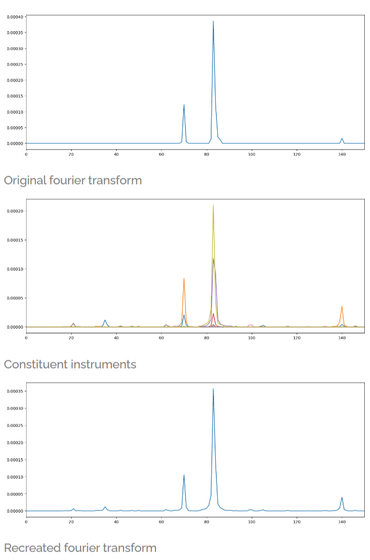

Audio Decomposition is based on Fourier transform and envelope analysis.

The Fourier transform analysis involves performing a Fourier transform on the music file every 0.1 seconds. The Fourier transforms of each stored instrument are then combined to recreate that 0.1 second of music. The instrument data used here was taken from the instrument database at the University of Iowa's Electronic Music Studio .

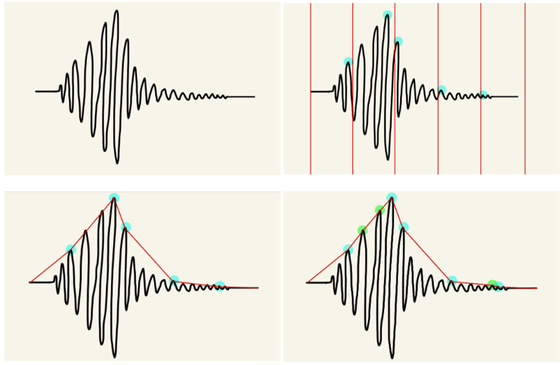

In envelope analysis, the sound wave is first split into small chunks and the maximum value of each chunk is taken to create an envelope, which is then split into three parts: the attack, sustain and release parts of the sound.

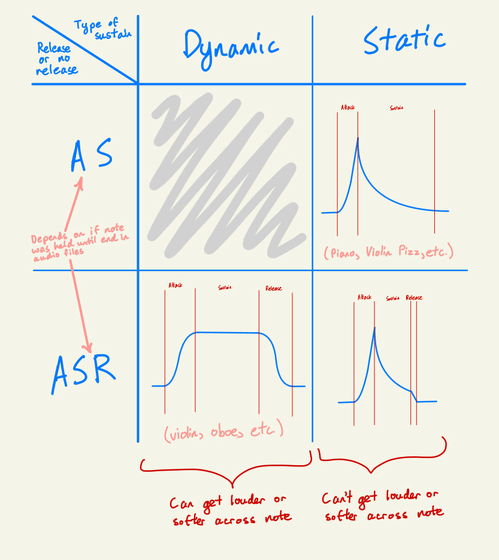

Different instruments have different envelope patterns, such as a piano, which has a static decay that decays exponentially, and a violin, which can grow and shrink in volume. Audio Decomposition distinguishes between these decay patterns to identify instruments and detect notes.

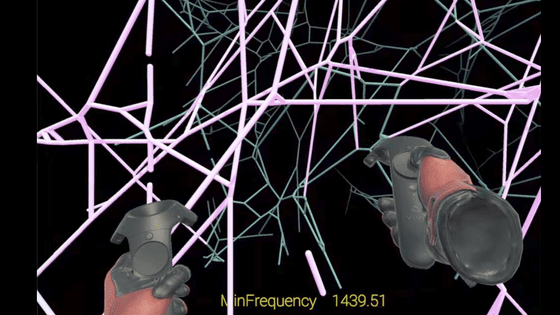

Specifically, we first apply a bandpass filter to the frequency of each note. Then, for each instrument, we calculate the normalized attack and release cross-correlation to identify the note start and end, and calculate the MSE of the filtered audio and the instrument waveform to determine the cost. The final amplitude is determined by multiplying the amplitude found by the Fourier transform by the inverse of the cost of the envelope step.

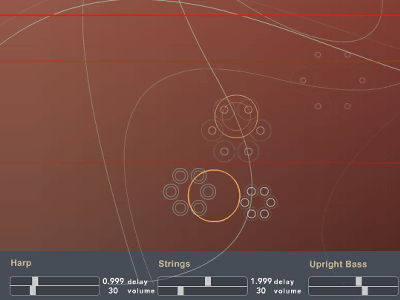

The final output is displayed using

Bird has actually used Auto Decomposition to identify pitches from performance videos posted on YouTube and create piano scores .

Auto Decomposition is developed as open source and the source code is available on GitHub.

Related Posts: