MIT spinoff Liquid AI releases non-Transformer AI models LFM 1B, 3B, and 40B MoE

As the word

Liquid Foundation Models: Our First Series of Generative AI Models

https://www.liquid.ai/liquid-foundation-models

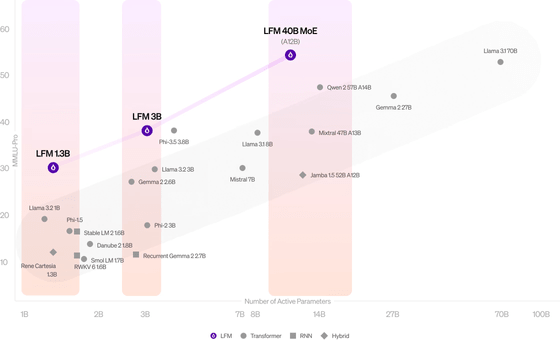

The generative AI LFM announced this time is available in three sizes: LFM 1.3B, which is ideal for resource-constrained environments; LFM 3B, a medium-sized model of experts; and LFM 40B MoE, a mixed expert model (MoE) designed to tackle more complex tasks. According to Liquid AI, a spinoff company of the Massachusetts Institute of Technology (MIT), LFM has excellent performance relative to its size.

Liquid AI explained, 'LFM is a large-scale neural network built with computational units deeply rooted in the theory of dynamical systems, signal processing, and numerical linear algebra. This unique combination leverages decades of theoretical advances in these fields to make intelligence at any scale possible. In this way, LFM has become a general-purpose AI model that can be used to model any kind of sequential data, including video, audio, text, time series, and signals.'

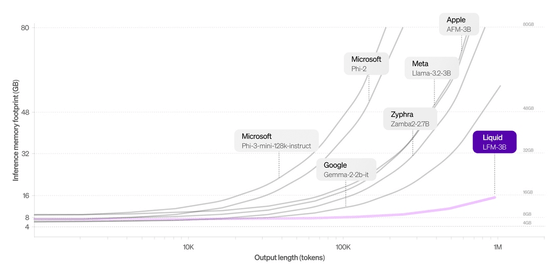

LFM is more memory efficient than Transformer-based models, and by efficiently compressing the input, it is possible to process longer sequences on the same hardware.

Below is a graph of the memory required for inference on a 3B class model, showing how small the memory footprint of the LFM 3B is.

You can also try out LFM for yourself.

Liquid Labs

When you access the above URL, you will see something like this.

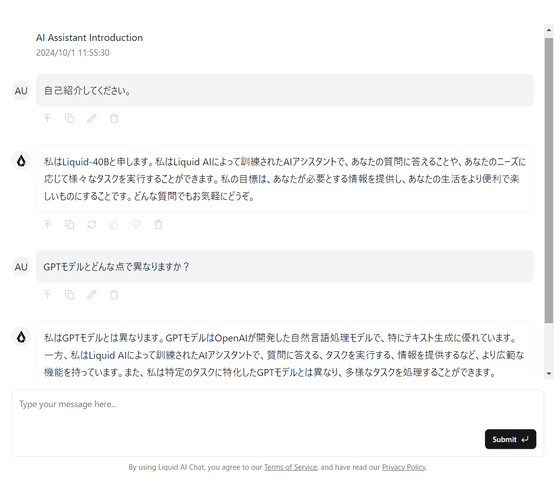

Since it is not a multimodal model, it does not support image generation, but it will respond naturally even if you input in Japanese.

According to Liquid AI, the name 'Liquid' pays homage to the company's roots in dynamic, adaptive learning systems.

Related Posts:

in Software, Posted by log1l_ks