'Fugaku-LLM', a Japanese-specific large-scale language model trained on the supercomputer 'Fugaku', is released

' Fugaku-LLM ', a large-scale language model with 13 billion parameters trained using the supercomputer 'Fugaku', was released on Friday, May 10, 2024. Fugaku-LLM is trained using its own training data without relying on existing large-scale language models, and is touted for its superior performance compared to existing Japanese-specific large-scale language models.

'Fugaku-LLM,' a large-scale language model trained on the supercomputer 'Fugaku,' is released. It has excellent Japanese language skills and is expected to be used in research and business | Tokyo Tech News | Tokyo Institute of Technology

Fujitsu releases 'Fugaku-LLM,' a large-scale language model trained on the supercomputer 'Fugaku'

https://pr.fujitsu.com/jp/news/2024/05/10.html

Fugaku-LLM is a large-scale language model that has been developed since May 2023 by Tokyo Institute of Technology, Tohoku University, Fujitsu, and the RIKEN Institute, and from August 2023, Nagoya University, CyberAgent, and Kotoba Technologies also joined the research.

The research team ported the Transformer model group ' Megatron-DeepSpeed ' to Fugaku and succeeded in optimizing Fugaku for Transformer. This increased the computational speed by six times when training large-scale language models using Fugaku. In addition, communication speed was increased three times by optimizing communication using Fugaku's high-dimensional connection technology 'Tofu Interconnect D '.

Generally, it is said that GPUs are more suitable than CPUs for training large-scale language models. However, thanks to improvements in computing and communication speeds, the research team succeeded in training a large-scale language model in a realistic time frame using the domestically manufactured Fujitsu CPUs installed on Fugaku. Given the current situation where GPUs are difficult to obtain worldwide, this is said to be 'an important achievement in terms of utilizing Japan's semiconductor technology and economic security.'

There are already many large-scale language models specialized for Japanese, but most of these models are developed by adding Japanese learning data to overseas models. In contrast, Fugaku-LLM is unique in that it is trained from scratch using original learning data collected by CyberAgent. 60% of the learning data is Japanese content, with the rest including English, mathematics, and code, and the total number of tokens reaches approximately 400 billion.

Additionally, while many large-scale language models specialized for Japanese have 7 billion parameters, Fugaku-LLM has 13 billion parameters, making it larger in scale than other models. The research team explained the reason for adopting 13 billion parameters as follows: 'Even larger models have been developed overseas, but large-scale language models require large computational resources to use, so models with too many parameters are difficult to use. In light of the current computing environment in 2024, we chose 13 billion parameters for Fugaku-LLM, which is high-performance and well-balanced.'

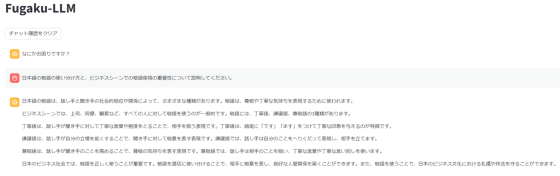

Fugaku-LLM achieved an average score of 5.5 in the Japanese MT-Bench , a benchmark test that measures the Japanese language performance of large-scale language models. This is said to be the highest performance among open models made in Japan and trained with original data. In particular, it achieved a high score of 9.18 in humanities and social science tasks, and is expected to be able to conduct natural conversations that take into account Japanese language features such as honorific language.

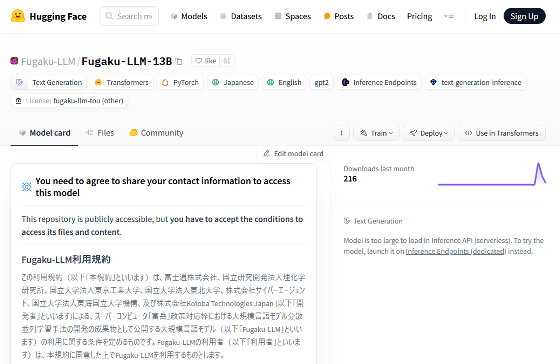

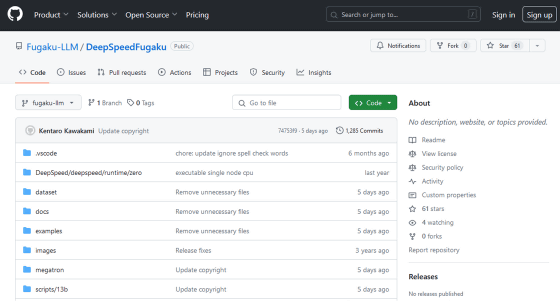

The model data of Fugaku-LLM is publicly available on Hugging Face and GitHub, and can be used for research and commercial purposes under the terms of the license. It is also available on Fujitsu's advanced technology trial environment, the Fujitsu Research Portal .

Fugaku-LLM/Fugaku-LLM-13B Hugging Face

https://huggingface.co/Fugaku-LLM/Fugaku-LLM-13B

GitHub - Fugaku-LLM/DeepSpeedFugaku

https://github.com/Fugaku-LLM/DeepSpeedFugaku

Related Posts:

in Software, Posted by log1o_hf