CyberAgent releases a model based on the 'DeepSeek-R1' derivative model with additional learning in Japanese

On January 27, 2025,

[Model release announcement]

— CyberAgent Public Relations & IR (@CyberAgent_PR) January 27, 2025

We have released an LLM that was trained using Japanese data based on DeepSeek-R1-Distill-Qwen-14B/32B. We will continue to contribute to the development of natural language processing technology in Japan through model release and industry-academia collaboration. https://t.co/Oi0l2ITzhh

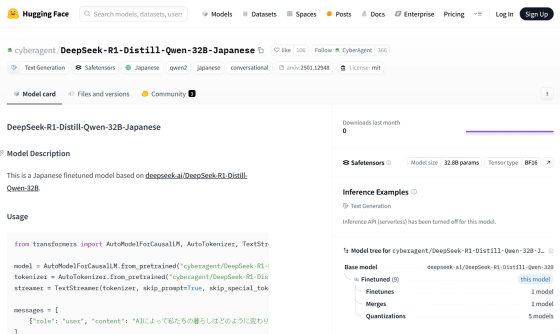

cyberagent/DeepSeek-R1-Distill-Qwen-32B-Japanese · Hugging Face

https://huggingface.co/cyberagent/DeepSeek-R1-Distill-Qwen-32B-Japanese

cyberagent/DeepSeek-R1-Distill-Qwen-14B-Japanese · Hugging Face

https://huggingface.co/cyberagent/DeepSeek-R1-Distill-Qwen-14B-Japanese

DeepSeek, a Chinese AI development company, attracted a lot of attention by announcing the large-scale language model 'DeepSeek-R1-Lite-Preview' specialized for inference in November 2024. Then, in December, it announced the large-scale language model 'DeepSeek-V3' that is comparable to OpenAI's GPT-4o, and in January 2025, it released the inference models 'DeepSeek-R1-Zero' and 'DeepSeek-R1' trained based on DeepSeek-V3 as open source .

DeepSeek's rapid succession of big announcements has made it the center of attention in the tech industry, and its app 'DeepSeek - AI' has reached number one in the free app rankings on the US App Store. At the time of writing, it remains at the top of the list.

Chinese AI development company 'DeepSeek' is rapidly emerging as a hot topic in the technology industry, and has also ranked first in the App Store's free app rankings - GIGAZINE

CyberAgent has now released the large-scale language models 'DeepSeek-R1-Distill-Qwen- 32B-Japanese' and 'DeepSeek-R1-Distill-Qwen-14B-Japanese, ' which are based on ' DeepSeek-R1-Distill-Qwen-14B/32B' and have been additionally trained with Japanese data, on its AI development platform Hugging Face.

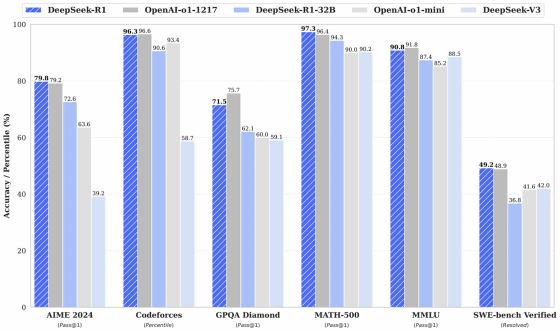

The graph below compares the performance of the original 'DeepSeek-R1-Distill-Qwen-32B (light blue)' with 'DeepSeek-R1 (blue stripes)', 'OpenAI-o1-1217 (dark gray)', 'OpenAI-o1-mini (light gray)', and 'DeepSeek-V3 (light blue)'. It can be seen that 'DeepSeek-R1-Distill-Qwen-32B' outperforms 'OpenAI-o1-mini' in multiple benchmarks.

'DeepSeek-R1' is an inference model that performs inference through thought chains, and shows various chains or 'thought' flows in response to user input, presenting the thought process of 'why the answer was reached.'

Users who have actually used 'DeepSeek-R1-Distill-Qwen-32B-Japanese' have reported that their thought process is in Japanese.

Your thoughts are in Japanese! That's great! pic.twitter.com/d2N0bVcPLx

— Azunyan1111 (@Azunyan1111_) January 27, 2025

It has been reported that DeepSeek-R1 complies with Chinese government censorship and does not give clear answers to sensitive topics such as Tiananmen Square, Taiwan, and the treatment of the Uighurs.

DeepSeek's AI model 'DeepSeek-R1' complies with Chinese government restrictions on sensitive topics such as Tiananmen Square, Taiwan, and the treatment of the Uighurs - GIGAZINE

Related Posts:

in Software, Web Service, Posted by log1h_ik