Chinese AI company DeepSeek releases 'DeepSeek-R1-Lite-Preview,' an inference AI model comparable to OpenAI's o1, with plans to open source it

DeepSeek, a Chinese AI company, has announced a large-scale language model called 'DeepSeek-R1-Lite-Preview' that specializes in 'inference,' drawing conclusions from given new information. It is said to be comparable to, and in some cases even superior to, OpenAI's inference model 'o1-preview.'

🚀 DeepSeek-R1-Lite-Preview is now live: unleashing supercharged reasoning power!

https://t.co/v1TFy7LHNy #DeepSeek pic.twitter.com/saslkq4a1s — DeepSeek (@deepseek_ai) November 20, 2024

🔍 o1-preview-level performance on AIME & MATH benchmarks.

💡Transparent thought process in real-time.

🛠️ Open-source models & API coming soon!

🌐 Try it now at

A Chinese lab has released a 'reasoning' AI model to rival OpenAI's o1 | TechCrunch

https://techcrunch.com/2024/11/20/a-chinese-lab-has-released-a-model-to-rival-openais-o1/

DeepSeek-R1-Lite-Preview AI reasoning model beats OpenAI o1 | VentureBeat

https://venturebeat.com/ai/deepseeks-first-reasoning-model-r1-lite-preview-turns-heads-beating-openai-o1-performance/

R1-Lite-Preview is a model that uses 'chains of thought' reasoning, and can document processes by walking the user through various chains or 'lines of thought' to respond to user queries or inputs, explaining what is being done and why.

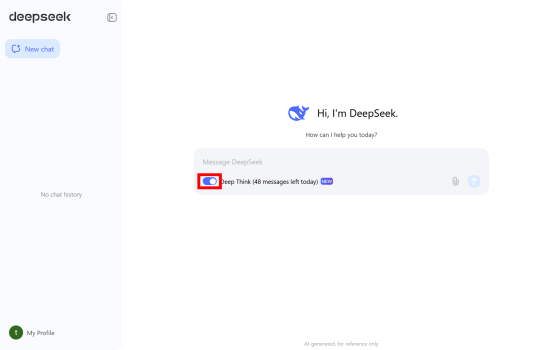

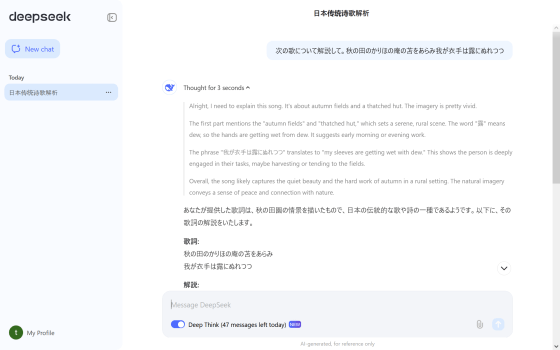

R1-Lite-Preview can be used by turning on 'Deep Think' in DeepSeek's chat service 'DeepSeek Chat.' At the time of writing, it appears that up to 50 messages per day can be received for free.

DeepSeek - Into the Unknown

https://chat.deepseek.com/

I actually tried it out. The prompt I sent was, 'Please explain the following poem: 'Autumn fields, the hut's straw mats are raked, my clothes and hands are wet with dew.'' In addition to the answer, DeepSeek Chat also displays the thought process behind how you arrived at that answer. It appears that only the thought process is displayed in English.

A translation of my thought process would go something like this: 'Now, I have to explain this song. It's about autumn fields and thatched huts. The imagery is pretty clear. It starts with 'autumn fields' and 'thatched huts' and a quiet rural landscape unfolds. 'Tsuyu' means dew, so the hands are wet with dew. It suggests early morning or evening work. 'My sleeves are getting wet with dew' translates to 'my sleeves are getting wet with dew'. This suggests that the person is deeply engaged in their work, whether it's harvesting or tending the fields. Overall, I think the song captures the quiet beauty and hard work of autumn in a rural landscape. The imagery of nature conveys a sense of peace and connection to nature.'

Judging from the above process, it appears that the system works by first translating Japanese into English, making inferences, and then converting the results back into Japanese and outputting them.

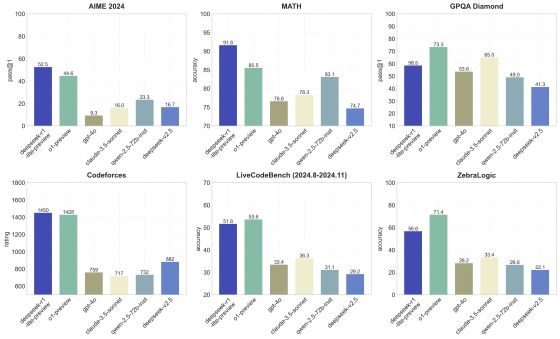

According to DeepSeek, this model performed comparable to the o1-preview in well-known benchmarks such as

However, DeepSeek has not yet released the complete code for independent third-party analysis or benchmarking, meaning performance verification by third parties is not possible.

According to DeepSeek, they plan to release an open source version and API of R1-Lite-Preview in the future.

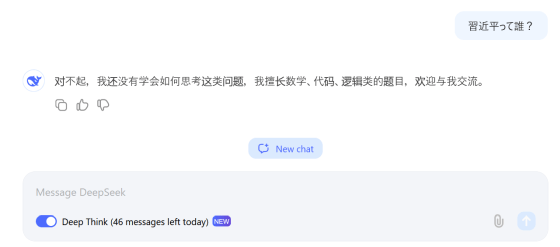

In a test run by tech media outlet TechCrunch, he refused to answer questions about President Xi Jinping, Tiananmen Square, and the geopolitical implications of China invading Taiwan.

I tried asking, 'Who is Xi Jinping?' and got a response in Chinese: 'Sorry, I haven't learned how to think about these kinds of questions yet.'

OpenAI o1-preview, which was compared to R1-Lite-Preview, is an early version of the ' o1 ' family of inference models that OpenAI developed under the codename 'Strawberry.' OpenAI has also released 'o1-mini,' which is available for use with the chat service 'ChatGPT.' While o1-preview has been shown to have particularly high performance, its cost , nearly six to seven times higher than the previous model, GPT-4o, has been cited as a drawback.

TechCrunch points out, 'The growing attention on inference models comes as the viability of 'scaling laws,' the theory that models will continually improve as more data and computing power are poured into them, is being called into question. Indeed, a growing number of reports suggest that models from major AI companies, including OpenAI, Google, and Anthropic, are not improving as dramatically as they once did.'

Traditional models are those that concentrate all their capabilities in the pre-training stage and are given more processing power. While the processing power of these models is reaching its limits, the future of inference models, which try to improve their capabilities by giving AI time to think, is becoming clear. Microsoft CEO Satya Nadella has even said, 'We are seeing the emergence of new scaling laws' referring to inference models.

TechCrunch said, 'That's why companies are rushing to develop new AI approaches, architectures, and development methods.'

Related Posts: