Meta develops “V-JEPA”, an architecture that learns by “watching” videos

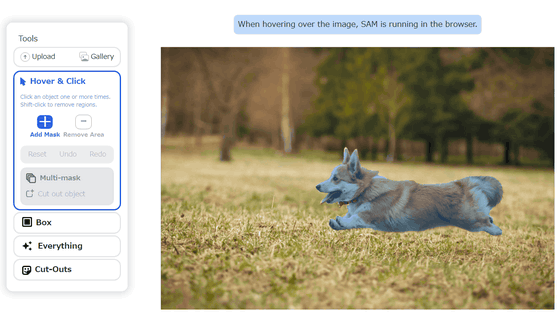

Meta has developed V-JEPA , an architecture that learns by predicting masked and missing parts in videos. Although it is impossible to generate any kind of video using this architecture, it is said that it may be used as an approach to developing new models.

Revisiting Feature Prediction for Learning Visual Representations from Video | Research - AI at Meta

V-JEPA is a method that learns and predicts what is happening in a video, and is particularly good at distinguishing between detailed interactions between objects and detailed interactions that occur over time. For example, when inferring ``what kind of action is actually occurring?'' from a video where someone's actions are masked, such as whether someone is putting down a pen, picking up a pen, or pretending to put down a pen, V-JEPA can It is said to be very superior compared to existing methods.

The learning method based on V-JEPA is to show a video with most of it masked and ask them to fill in the invisible parts. This allows the machine learning model to learn how to infer temporally and spatially varying images.

Unlike generative approaches that try to fill in all masked or missing parts, V-JEPA has the flexibility to discard unpredictable information, which increases training and sample efficiency by ~1.5x. It is said to be improved by 6 times. For example, even if a tree appears in the video, based on V-JEPA, the small movements of individual leaves may be cut out without being predicted. Meta explains that these characteristics could be used to help develop learning models that generate videos.

In addition, V-JEPA is not a model specialized for a specific task, but is also the first video model that specializes in a learning method called ``frozen evaluation'' that is versatile. . Meta aims to make predictions possible with longer videos, expand its current video-only performance, and incorporate a multimodal approach. V-JEPA is published under the CC BY-NC license.

GitHub - facebookresearch/jepa: PyTorch code and models for V-JEPA self-supervised learning from video.

https://github.com/facebookresearch/jepa

Related Posts:

in Software, Posted by log1p_kr