Meta releases 'Segment Anything Model 2 (SAM 2),' an AI model that can accurately identify objects in images and videos in real time

Meta has announced Segment Anything Model 2 (SAM 2), an integrated AI model that can accurately identify which pixels in an image or video are associated with which object. SAM 2 can be used to segment any object and track it consistently in real time across all frames of a video, making it a potentially transformative tool in video editing and mixed reality.

Our New AI Model Can Segment Anything – Even Video | Meta

Introducing SAM 2: The next generation of Meta Segment Anything Model for videos and images

https://ai.meta.com/blog/segment-anything-2/

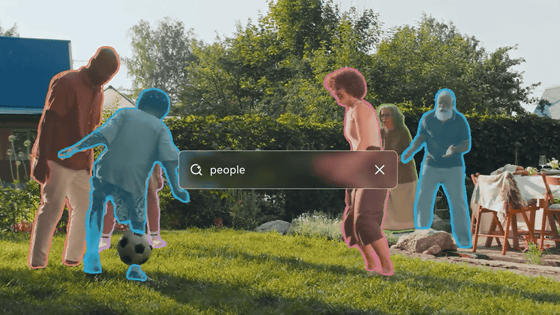

Correctly identifying which pixels belong to which object is called 'segmentation,' which is useful for tasks such as scientific image analysis and photo editing. Meta has developed its own segmentation AI model, the Segment Anything Model (SAM) , which is used by Instagram's AI features ' Backdrop ' and ' Cutouts .'

Beyond Instagram, SAM has also been a catalyst for diverse applications in science, medicine, and many other industries: in marine science, it's been used to segment sonar images to analyze coral reefs , in disaster relief to analyze satellite imagery , and in medicine to segment cell images to help detect skin cancer .

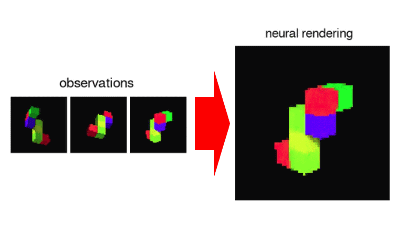

Meta has announced SAM 2, the next-generation model of SAM. SAM 2 extends SAM's segmentation capabilities to video, allowing you to segment any object in an image or video and track it consistently in real time across all frames of the video. Not only has segmentation capability been extended to video, but operation time can also be reduced by up to one-third.

Video segmentation is much more difficult than images, so existing AI models have not been able to achieve this. In videos, objects can move quickly, change appearance, or be hidden by other objects or parts of the scene. The construction of SAM 2 has succeeded in solving many of these challenges.

To get a sense of how accurately SAM 2 can identify objects in a video, check out this video posted by Meta CEO Mark Zuckerberg:

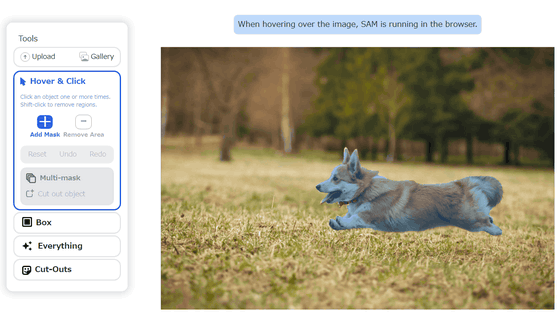

Meta has also released a demonstration web app for SAM 2, allowing you to see its segmentation capabilities in action.

SAM 2 Demo | By Meta FAIR

https://sam2.metademolab.com/

Click 'Try it now' to experience the demo.

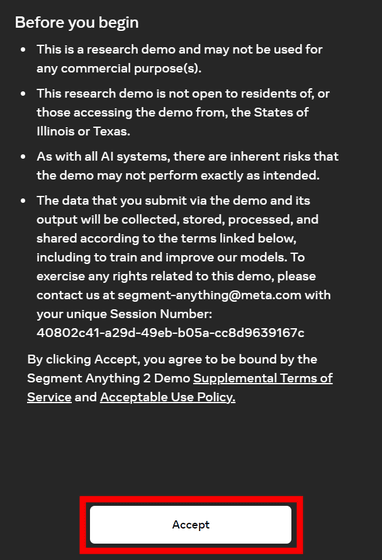

A notice will then be displayed. The SAM 2 demo is for research purposes only and cannot be used for commercial purposes. In addition, this demo is not available to residents of Illinois and Texas. In addition, it states that the demo may not work as intended, and that data sent through the demo and its output will be collected, stored, processed, and shared in accordance with the terms and conditions linked below, and will be used to train and improve AI models. Click 'Accept' at the bottom of the screen.

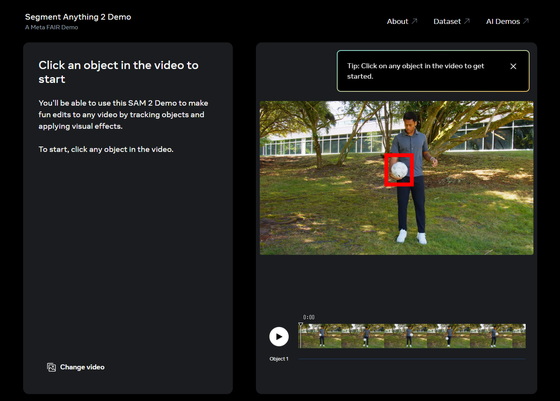

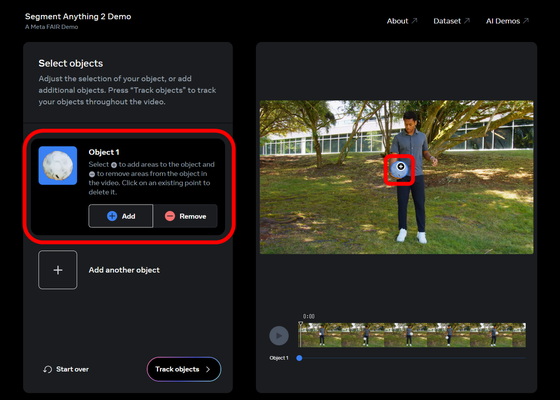

The demo screen is as follows. Click on the object on the screen.

This will identify the object as follows:

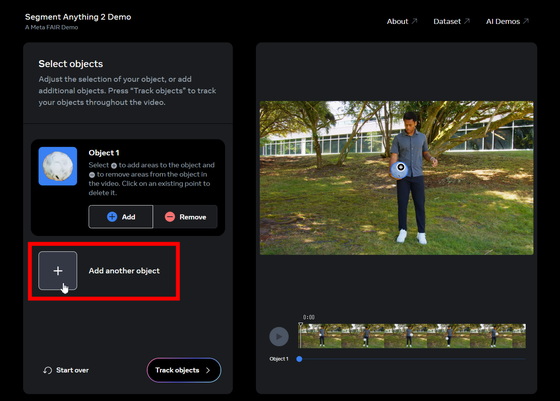

If you want to identify a new object, click 'Add another object.'

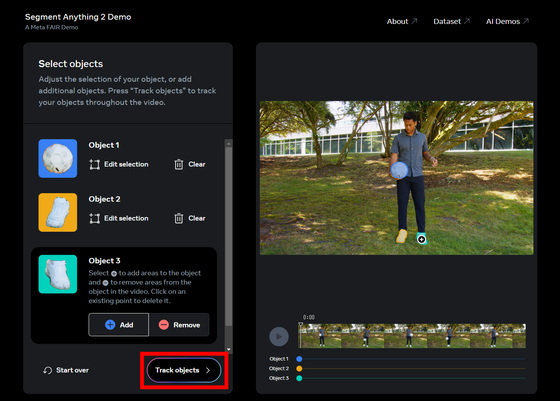

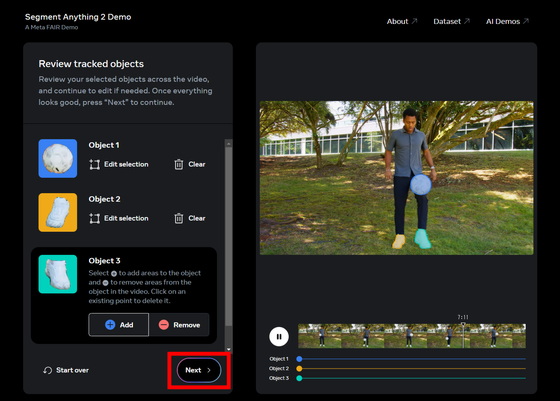

You can identify up to three objects at the same time. After selecting the objects, click 'Track objects'.

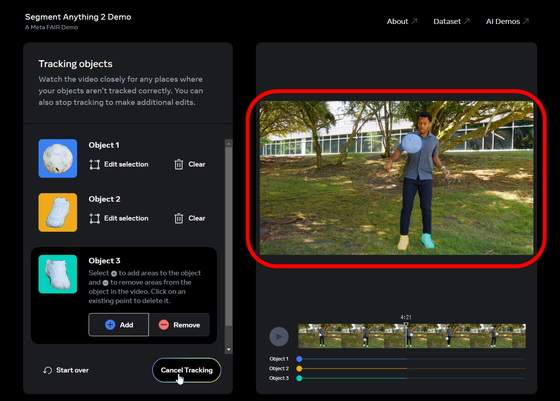

The video in the red frame will then be played. Both the ball and the shoes are white in color, and there are many moments when the two overlap, but SAM 2 successfully identifies each object accurately.

Click “Next”.

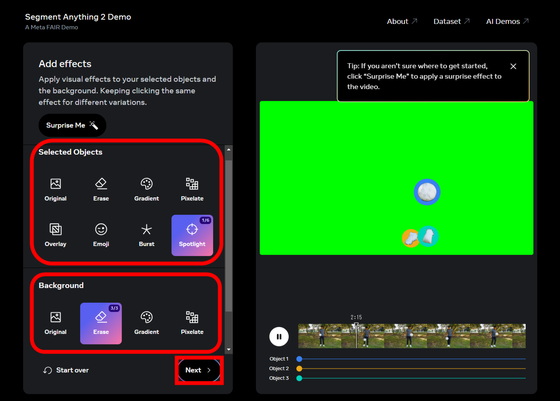

Clicking on the 'Selected Objects' section will change how the selected objects are highlighted, and clicking on the 'Background' section will change how the background other than the selected objects is processed. Click 'Next'.

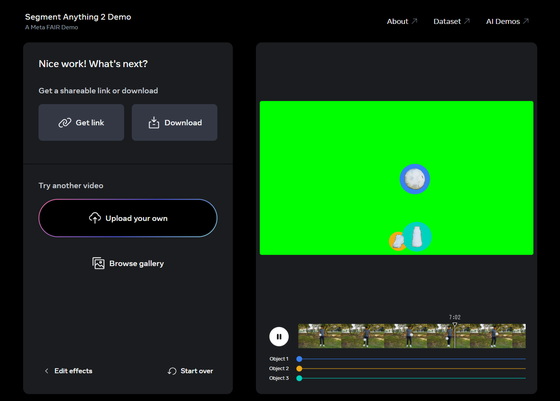

You can also download the segmented video. You can also upload a video and segment it using 'Upload your own'.

The following video shows a demo of SAM 2.

I tried out a demo of Meta's segmentation AI model 'Segment Anything Model 2 (SAM 2)' - YouTube

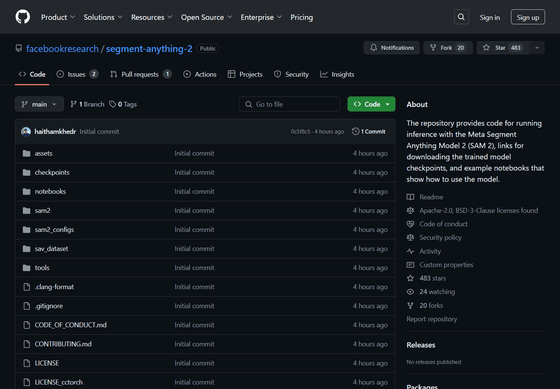

SAM 2 is open source under the Apache 2.0 license and is available on GitHub.

GitHub - facebookresearch/segment-anything-2: The repository provides code for running inference with the Meta Segment Anything Model 2 (SAM 2), links for downloading the trained model checkpoints, and example notebooks that show how to use the model.

https://github.com/facebookresearch/segment-anything-2

In addition, Meta has released the dataset used to train SAM 2, 'SA-V', under the CC BY 4.0 license.

SA-V | Meta AI Research

https://ai.meta.com/datasets/segment-anything-video/

Related Posts: