Google develops technology to track hand movement with high accuracy in real time with smartphone camera

by

Google of AI development team, the international conference on computer vision CVPR 2019 in, the machine learning system to track the movement of the hand in real time, an open source framework provided by Google MediaPipe announced that it will implement to. Existing hand tracking systems that track hand and finger movements have relied on a high-performance desktop environment, but Google has made it possible to track in real time with a new smartphone camera and track multiple hands. Explain that you can.

Google AI Blog: On-Device, Real-Time Hand Tracking with MediaPipe

https://ai.googleblog.com/2019/08/on-device-real-time-hand-tracking-with.html

Tracking hand shapes and finger movements can improve the user experience across different technical disciplines and platforms, as it enables people to understand hand gestures and sign language that they do on the screen. . It is possible that the sign language and gestures can be displayed in the augmented reality world by the computer reading sign language and gestures.

However, humans can naturally recognize the shape of human hands and finger movements visually, but it seems to be a difficult task for computers. The hand often forms a fist or handshake, and hides the finger that was previously visible. Also, because the hand does not have different parts such as eyes, nose and mouth like a human face, it is explained in the Google AI blog that it is relatively difficult to identify each part.

by

Therefore, the Google AI development team built a difficult hand and finger tracking system by combining multiple models using machine learning. The following three machine learning models are used in Google's system.

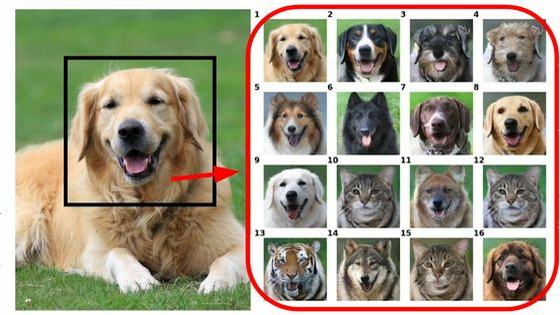

・ 'Palm detector model (BlazePalm)' to identify palm direction and boundary

・ 'Hand mark model' that returns high precision 3D key points of the hand in the area trimmed by the palm detector model

・ 'Gesture identification model' that classifies the key points detected by the hand mark model into individual sets of gestures

By combining these models, Google has enabled efficient real-time tracking of hands and fingers. By accurately trimming the palm that should be tracked first from the image, the amount of data used by the subsequent model will be greatly reduced, and Google AI will be able to devote computer resources to improving accuracy such as coordinate prediction The team says.

by

BlazePalm, a palm detector model, must be able to detect hands that vary in size across the entire image, as well as hands that have lost their finger or other patterns by making fists or shaking hands. Hmm. Also, since there is no clear contrast like eyes and nose like the face, BlazePalm uses additional contexts such as arms and body to specify the exact hand position. The Google AI team claimed that BlazePalm can detect palms with 95.7% accuracy.

In the next hand mark model that works, the hand region can be broken down into 21 coordinates such as roots and joints, and each 3D coordinate returned accurately. With machine learning training, it is possible to accurately obtain coordinates for parts that are only partially visible and fists. The Google AI team seems to combine high-quality synthetic hand models rendered on real-world images to improve detection accuracy.

Next, based on the 3D coordinates of the hand, a gesture that matches the gesture identification model is derived. The state of each finger is bent or straight depending on the cumulative angle of the joint, and it is mapped whether there is something that fits the predefined gesture set. The Google AI team has created a set of hand gestures from multiple cultures such as the United States, Europe, China at the time of article creation, `` Sams Up '' `` Fist '' `` OK Sign '' `` Koruna (Rock and Roll) ' ' `` Peace Sign ”Etc. can be identified. Click on the image below to see the machine learning model identify hand gestures that change rapidly (upper GIF movie is about 6.9MB, lower GIF movie is about 5.7MB).

![]()

The machine learning model developed by the Google AI team is implemented in the open source framework `` MediaPipe '' that can be used for image detection by machine learning provided by Google, and developers can freely use hand tracking systems for applications etc. I can do it. Google plans to continue to extend the hand tracking functionality in a robust and secure way, and what will be developed by stimulating the creative ideas and applications of the researcher and developer community. He said he was looking forward to it.

Related Posts: