Meta announces next-generation AI architecture ``Megabyte'' that enables content generation of over 1 million tokens, far exceeding the token limit of existing generation AI

Meta's AI research team has proposed the `` Megabyte ''

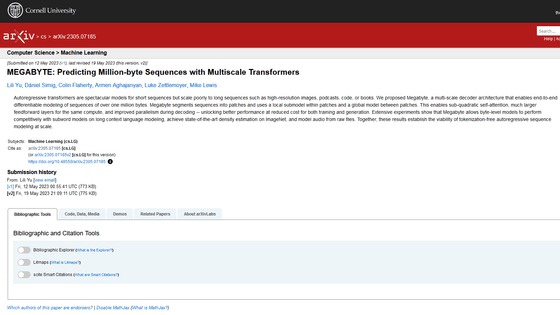

[2305.07185] MEGABYTE: Predicting Million-byte Sequences with Multiscale Transformers

https://doi.org/10.48550/arXiv.2305.07185

Meta AI Unleashes Megabyte, a Revolutionary Scalable Model Architecture - Artisana

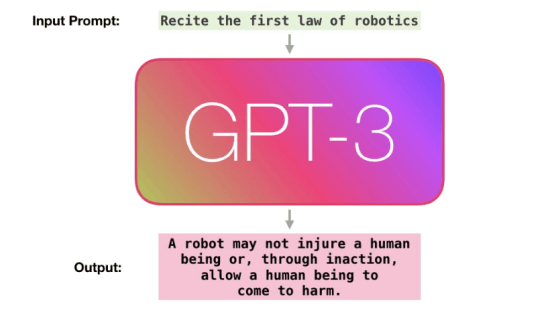

High-performance generative AI models such as GPT-4 developed by OpenAI are based on the Transformer architecture, which helps understand complex user input and generate long sentences. However, Meta's AI research team points out that 'the Transformer architecture has two limitations.' One of the limitations the AI research team points out is that as user input and AI model output become longer, the Transformer architecture becomes more computationally intensive, 'making it difficult to process token-heavy sequences efficiently.' The point is that

Another limitation is that the '

Due to these factors, it has been pointed out that the current Transformer architecture may have reached its limit in terms of efficiently processing input and output with a large amount of tokens. Meta's AI research team therefore developed an AI architecture focused on addressing these limitations and unlocking new possibilities for content generation.

Developed by Meta, Megabyte employs a unique system that splits the sequences associated with inputs and outputs into 'patches' rather than individual tokens. Each patch is processed by a local AI model, and then a global AI model merges all the patches to produce the final output.

Megabyte's approach is purported to address the challenges faced by current AI models, where a single feed-forward neural network works on patches containing multiple tokens in parallel to It is believed that these challenges can be overcome.

By building a patch-based system instead of tokens, Megabyte is able to perform computations in parallel, unlike traditional Transformer architectures that perform computations serially. Parallel processing can achieve significant efficiency improvements over AI models based on the Transformer architecture, even with a large number of parameters for Megabyte-powered AI models.

Experiments conducted by the research team showed that Megabyte, which had 1.5 billion parameters, could generate sequences about 40% faster than the Transformer model, which had 350 million parameters.

In addition, GPT-4 was limited to 32,000 tokens, and Anthropic's text generation AI ' Claude ' was limited to 100,000 tokens, while the Megabyte model was able to process sequences of over 1.2 million tokens. I was. Capable of processing 1.2 million tokens, the Megabyte model is expected to open up new possibilities for content generation and become an architecture that exceeds the limits of current AI models.

``Megabyte is promising in that it can abolish tokenization in large-scale language models,'' said Andrei Karpathy, lead AI engineer at OpenAI, to Megabyte. Furthermore, ``ChatGPT is excellent at tasks such as creative writing and summarization, but is not good at tasks such as restoring summarized sentences because of tokenization.''

Promising. Everyone should hope that we can throw away tokenization in LLMs. Doing so naively creates (byte-level) sequences that are too long, so the devil is in the details.

—Andrej Karpathy (@karpathy) May 15, 2023

Tokenization means that LLMs are not actually fully end-to-end. There is a whole separate stage with… https://t.co/t240ZPxPm7

Meta's AI research team acknowledges that the Megabyte architecture is a breakthrough technology, but suggests that there may be other avenues for optimization. Technology news media Artisana said, ``We have extended the capabilities of the traditional Transformer architecture in areas such as a more efficient encoder model that employs patch techniques and a decoding model to decompose the sequence into smaller blocks. We may be able to accommodate next-generation models.'

Related Posts:

in Software, Posted by log1r_ut