What is the 'strawberry problem' that large-scale language models such as GPT-4 and Claude have?

While AI based on

The 'strawberry' problem: How to overcome AI's limitations | VentureBeat

https://venturebeat.com/ai/the-strawberrry-problem-how-to-overcome-ais-limitations/

Generative AI such as ChatGPT and Stable Diffusion can easily demonstrate advanced capabilities to anyone, such as writing advanced sentences and codes, and outputting illustrations and realistic images. However, for example, image generation AI has a vulnerability called the 'one banana problem.' When Daniel Hook, CEO of IT news site Digital Science, generated an image with the prompt 'A single banana,' the output was 'two bananas in one bunch.' According to CEO Hook, AI has a bias such as 'there are two bananas in the photo,' and AI that does not actually understand what the prompt specifies is thought to be pulled by the bias learned in the dataset.

What is the 'one banana problem' that highlights the problems faced by generative AI? - GIGAZINE

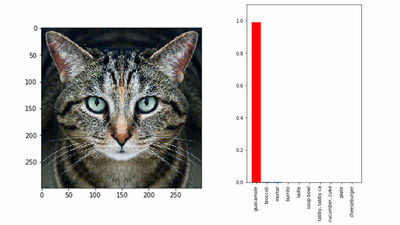

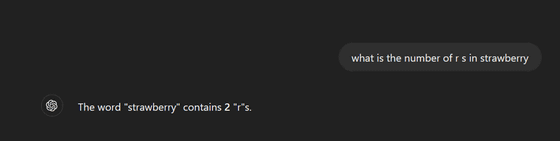

A similar problem that Jog has raised as an issue with LLMs that deal with text is the 'Strawberry Problem.' In the image below, Jog asks ChatGPT, 'what is the number of rs in strawberry?' As anyone can see by looking at the word 'strawberry,' there are three 'r's in total, the third, eighth, and ninth letters. However, in the image below, ChatGPT answers, 'The word 'strawberry' contains 2 'r's.'

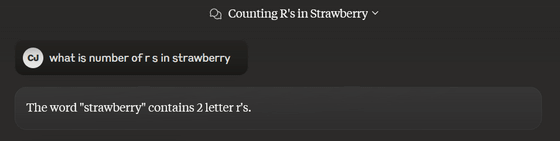

In the example below, we asked

In other examples, LLM-based AI can make mistakes when counting the 'm' in 'mammal' and the 'p' in 'hippopotamus.' According to Jog, the strawberry problem stems from the characteristics of LLM.

Nearly all high-performance LLMs are built on Transformer , a deep learning model published by Google researchers and others. Transformer does not take in input text directly, but instead uses a process of 'tokenizing' text into a numerical representation. A token can be a complete word or part of a word. For example, if there is a token for the word 'strawberry,' it will be read as is, but depending on the word, the input may be taken in the form of a combination of two tokens, 'straw' and 'berry.' By breaking down the input into tokens, the model can more accurately predict which token will come next in the sentence.

So while LLM-based AI that works with tokens is good at predicting the content of a sentence based on context, it has difficulty breaking down words into alphabetical units. 'This problem may not arise in model architectures that can look at individual characters directly without tokenizing them, but with the current Transformer architecture, this is not possible,' says Jog.

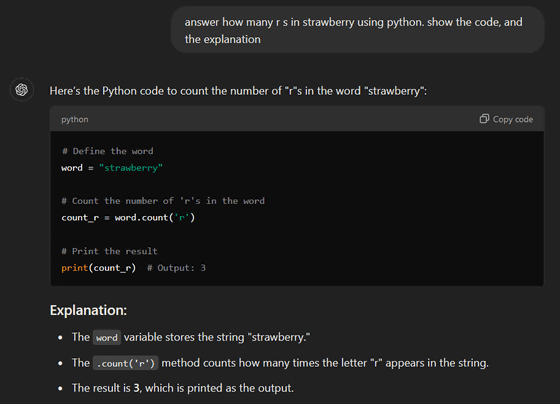

Taking into account the problems with LLM, Jog also explains how to avoid the Strawberry problem. The method is to ask the user to use programming language code instead of talking in normal sentences. Below is a scene where Jog asked ChatGPT to 'answer how many rs in strawberry using python. show the code, and the explanation.' ChatGPT was able to use Python's count function and answer 'Output = 3', which is the correct number of 'r'.

'A simple character count experiment reveals a fundamental limitation of LLM. It shows that LLM is a token pattern-matching prediction algorithm, not an 'intelligence' capable of understanding or reasoning. However, knowing in advance what prompts work well can help mitigate the problem to some extent. As AI becomes more integrated into our lives, it is important to be aware of its limitations in order to use it responsibly and have realistic expectations,' Jog concludes.

Related Posts:

in Software, Posted by log1e_dh