Apple discards the implementation of ``a system that checks for images of sexual child abuse in iCloud Photos''

Apple announced plans to scan photos stored in iCloud by users to detect whether they contain sexual child abuse content (CSAM), but voices against this were mentioned a lot. Apple has reportedly scrapped plans to introduce this CSAM detection system.

Apple Kills Its Plan to Scan Your Photos for CSAM. Here's What's Next | WIRED

https://www.wired.com/story/apple-photo-scanning-csam-communication-safety-messages/

Apple Abandons Controversial Plans to Detect Known CSAM in iCloud Photos - MacRumors

https://www.macrumors.com/2022/12/07/apple-abandons-icloud-csam-detection/

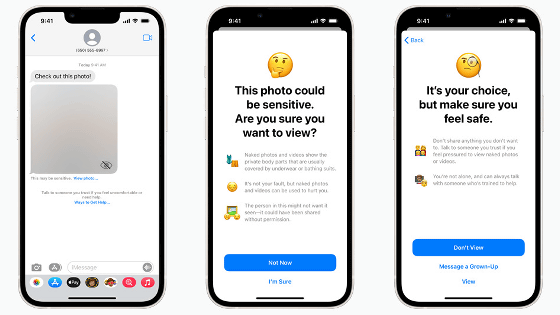

In August 2021, Apple announced that it would introduce a future system to detect CSAM stored in iCloud Photos.

`` Efforts to scan iPhone photos and messages '' to prevent sexual exploitation of children announced by Apple will be carried out in accordance with the laws of each country - GIGAZINE

This CSAM detection system checks image hashes registered in known CSAM databases and hashes of images in iCloud Photos, Apple said that it can check for CSAM without compromising user privacy. rice field. However, this method has been shown to be imperfect and has been criticized by many, including organizations such as the Electronic Frontier Foundation , human rights groups , politicians, and Apple employees .

It turns out that there is a big hole in Apple's ``child pornography detection system in the iPhone'' that receives fierce opposition from all over the world - GIGAZINE

Apple initially said it would implement the CSAM detection system in iOS 15 and iPadOS 15 by the end of 2021, but in response to critical reactions, it implemented ``based on feedback from customers, advocacy groups, researchers, and others.'' will be postponed,' he said.

Apple announces postponement of `` function to detect child pornographic images in iPhone ''-GIGAZINE

Apple hasn't talked about the CSAM detection system for more than a year since the postponement announcement, but through the news site WIRED, ``We have consulted extensively with experts and gathered feedback on our proposed child protection efforts in 2021. As a result, we have decided to deepen our investment in communication safety features that we first provided in December 2021. In addition, we have decided not to proceed with the previously proposed CSAM detection tool for iCloud Photos.' Did.

'We will continue to work with governments, child advocacy groups and other businesses to help protect young people, protect their privacy rights, and make the internet a safer place for children and all of us,' Apple said. I will continue to do so,” he said.

Related Posts:

in Web Service, Smartphone, Posted by log1i_yk