Apple announces postponement of 'function to detect child pornographic images in iPhone'

To prevent the spread of sexually abused content to children, Apple scans 'Child Sexual Abuse Material (CSAM)' images stored in users' iCloud Photos and makes them public. On August 5, 2021, we announced a new safety feature to report to the target agency. Apple has announced that it will postpone the release of the new feature, following a huge backlash that the new feature 'damages user privacy.'

Child Safety-Apple

https://www.apple.com/child-safety/

Apple delays controversial child protection features after privacy outcry --The Verge

https://www.theverge.com/2021/9/3/22655644/apple-delays-controversial-child-protection-features-csam-privacy

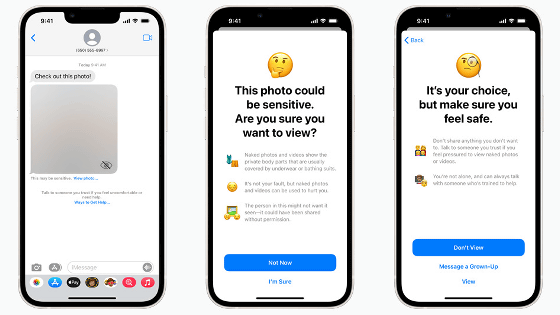

Apple announced on August 5th that there are two main safety features, one is to warn parents when their child is sending and receiving sexually explicit photos and blur the image. thing. The other is to 'scan the CSAM image stored in the user's iCloud Photos and report it to Apple's moderators, who will report the report to the National Center for Missing and Exploited Children (NCMEC).' In the latter, the machine learning algorithm 'NeuralHash' is used to detect the CSAM by collating the hash value of the photo with the database, but this is said to 'damage the privacy of the user' and there is a big backlash from inside and outside the company. calling.

Apple announced that it will scan iPhone photos and messages to prevent sexual exploitation of children, and protests from the Electronic Frontier Foundation and others that 'it will impair user security and privacy' --GIGAZINE

Apple explained in the FAQ that 'detection of child sexual abuse material does not compromise privacy,' but NeuralHash, which is used to detect CSAM, has a ' hash collision ' in which the same hash is detected from different images. It was pointed out that there is a defect, there is a possibility of false detection, and it is possible to intentionally 'abuse an image that is recognized as a child sexual abuse image even if it is seemingly harmless'. In addition, there have been reports of cases where hash collisions occurred due to accidental matching between images that were not intended to cause false positives.

Apple's 'Child Pornography Detection System in iPhone' Turns Out to Have a Big Hole-GIGAZINE

In response to such a backlash, Apple updated the page 'Child Safety' on September 3, 2021. Originally, the above safety features were planned to be released within 2021, but Apple said, 'Previously, we used communication tools to protect children from perpetrators who exploit them and spread child sexual exploitation content. With feedback from consumers, advocacy groups, researchers, and others, we'll spend more time on this feature, which is important for child safety, in the coming months. We decided to improve it, 'he said, and announced that he would postpone the implementation of new features. In addition, it is unknown at the time of writing the article when the release date and time after the postponement will be concrete.

Related Posts: