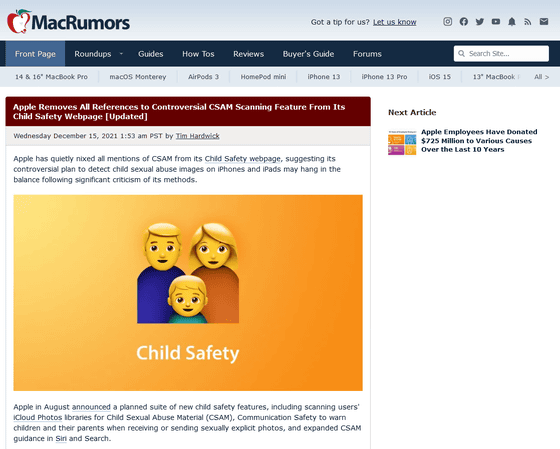

Apple removes mention of child sexual abuse material (CSAM) detection

Apple Removes All References to Controversial CSAM Scanning Feature From Its Child Safety Webpage [Updated] --MacRumors

https://www.macrumors.com/2021/12/15/apple-nixes-csam-references-website/

Apple scrubs controversial CSAM detection feature from webpage but says plans haven't changed --The Verge

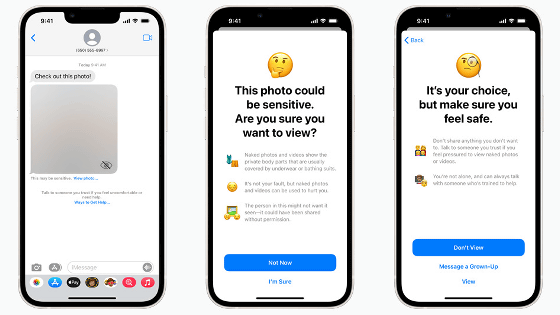

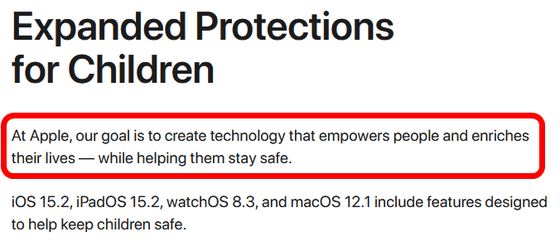

This is what the news site MacRumors pointed out. On that page, Apple's goal is to 'create technology that empowers people and enriches their lives, helping people stay safe,' and children send naked photos in a messaging app. It explains that there is a function to issue a warning when receiving or receiving.

Child Safety-Apple

https://www.apple.com/child-safety/

Until December 10, 2021, in addition to the goals set out earlier, 'We use communication tools to reach out to children, protect them from predators, and protect them from predators. We would like to help curb the spread of sexual abuse material (CSAM). ' This wording has disappeared with

The following is the Internet Archive that saved the pages as of December 10.

Child Safety-Apple

https://web.archive.org/web/20211210163051/https://www.apple.com/child-safety/

MacRumors speculates that Apple has abandoned its plans for scanning CSAM with the removal of the mention.

However, when the news site The Verge contacted Apple, public relations Shane Bauer said that 'Apple's position has not changed since September 2021 when it announced the postponement of the function', and the function was added. I don't seem to have given up.

Related Posts:

in Note, Posted by logc_nt