Why did Apple give up on developing a child pornography detection tool?

In August 2021, Apple announced plans to implement a feature that scans photos and messages on iPhone to delete child abuse sexual content (CSAM), but scanning information on iPhone compromises user privacy. It was criticized for impairing the functionality, and it was reported in December 2022 that the implementation of this feature was abandoned. In response to questions from child protection organizations, Apple has newly revealed the reason for abandoning the implementation of the data scanning function.

Apple's Decision to Kill Its CSAM Photo-Scanning Tool Sparks Fresh Controversy | WIRED

https://www.wired.com/story/apple-csam-scanning-heat-initiative-letter/

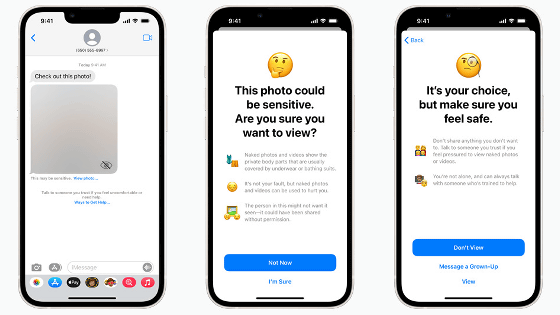

Apple has previously added features to the iOS standard Messages app, such as a feature that blurs CSAM and a feature that warns you when you try to send a CSAM, a feature that verifies whether photos saved in iCloud are CSAM, and a feature that uses Siri. The goal was to introduce a function that would alert you when searching for CSAM.

Apple announces that it will scan iPhone photos and messages to prevent child sexual exploitation, and protests from the Electronic Frontier Foundation and others say it ``undermines user security and privacy'' - GIGAZINE

Apple had planned to implement the CSAM detection tool by the end of 2021, but it was implemented in September 2021 after receiving complaints from the Electronic Frontier Foundation and others that it would ``compromise user privacy'' immediately after the announcement. announced a postponement.

Apple announces postponement of ``feature to detect child pornography images on iPhone'' - GIGAZINE

In December 2022, Apple announced, ``After extensive consultation with experts and gathering feedback regarding child protection initiatives proposed in 2021, we will invest in communication safety features that were first offered in December 2021. In addition, we have decided not to proceed with the development of the CSAM detection tool for iCloud Photos that we had previously proposed,'' and clarified that the implementation of the CSAM detection tool has been discarded. However, as of December 2022, Apple had not disclosed the reason for abandoning the implementation of the CSAM detection tool.

Apple abandons implementation of ``system to check iCloud Photos for images of sexual child abuse'' - GIGAZINE

Newly, Apple gave up on implementing the CSAM detection tool in response to the question ``Why did you give up on implementing a feature that is extremely important for child protection'' from the child protection organization ``The Heat Initiative''? clarified.

According to Apple's response, implementing the ``feature to scan data on iCloud'' required for the CSAM detection tool may result in the scan function being exploited by attackers. In addition, concerns that ``once CSAM scanning begins, the scope of scanning will gradually expand, leading to extensive scanning of encrypted messages,'' is said to have led to the abandonment of implementing the CSAM detection tool.

In addition, as of 2021, Apple was considering scanning encrypted messages for CSAM scanning, but at the time of writing the article, it is focusing on protecting encrypted messages. For example, when a bill was proposed in the United Kingdom that would give the Home Office the power to force companies to withdraw their E2E encryption functions, they issued a statement saying that the bill should be rejected in order to protect security. Masu.

Apple opposes the UK surveillance law amendment bill, saying it is extraterritorial and poses a security risk and should be rejected - GIGAZINE

Related Posts:

in Smartphone, Posted by log1o_hf