Apple's 'Efforts to Scan iPhone Photos and Messages' to Prevent Child Sexual Exploitation Will Be Followed by Country Laws

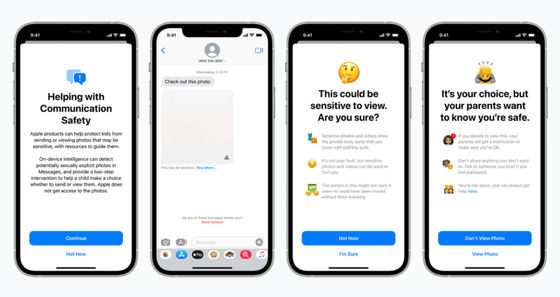

On August 5, 2021, Apple scanned messages and photos of Apple device users in the United States in an effort to prevent the spread of 'Child Sexual Abuse Material (CSAM)'. Announced that it will check for CSAM related content. There have been many criticisms about this initiative, such as 'It is an invasion of privacy,' but the new initiative outside the United States will be carried out on a country-by-country basis in accordance with local laws and regulations. Apple has made clear the policy.

Apple says any expansion of CSAM detection outside of the US will occur on a per-country basis ―― 9to5Mac

Apple's efforts to prevent the spread of CSAM announced on August 5 include censoring content on the 'Messages app,' 'iCloud photos,' and 'Siri and search functions' without compromising user privacy. It warns when a user searches for CSAM, or notifies parents when a child sends a CSAM. Details of each feature Apple plans to implement to prevent the spread of CSAM are summarized in the following articles.

Apple announced that it will scan iPhone photos and messages to prevent sexual exploitation of children, and protests from the Electronic Frontier Foundation and others that 'it will compromise user security and privacy' --GIGAZINE

Apple's announced CSAM anti-proliferation system has been criticized by organizations such as the Electronic Frontier Foundation and the Center for Democracy and Technology as 'privacy is compromised,' but Apple has to protect children. It emphasizes that it is necessary and that it is all designed with privacy in mind.

Regarding Apple's CSAM spread prevention system, there are opinions that it is 'like installing a backdoor' as well as privacy concerns, specifically, 'Government agencies are trying to use the system for other purposes. There are voices that are worried about 'if you do'.

The Electronic Frontier Foundation said, 'The system that Apple is building is like having a narrow backdoor, and all that is needed to open this backdoor is a'machine to scan a wider range of content than just CSAM. It's 'extending learning parameters' and 'fine-tuning configuration flags' to scan all accounts, not just children, 'so that users can be monitored simply by adjusting the CSAM spread prevention system. It warns that it can turn into a dangerous system.

In addition, when 9to5Mac of Apple related media asked Apple 'How to detect CSAM content outside the United States?', Apple revealed details about when to expand its efforts outside the United States. Although it did not, he said that he would expand the system according to the laws and regulations of each country and region.

Related Posts:

in Smartphone, Posted by logu_ii