There are voices of concern from within the company regarding the 'iPhone photo / message scan' announced by Apple.

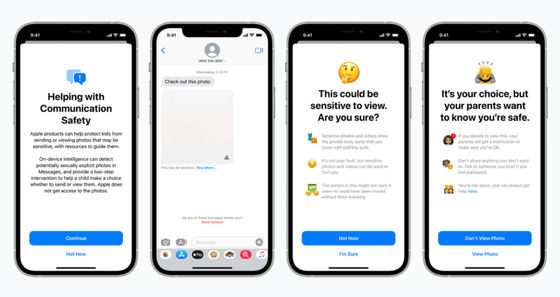

On August 5, 2021, Apple announced a measure to prevent the spread of data on child sexual exploitation (CSAM) by scanning iPhone photos and messages to check for the existence of applicable content. Did. In response to this, some groups and others have raised protests that 'it will impair the security and privacy of users', but there are new concerns from within Apple from the Reuters news report. Is known.

Exclusive: Apple's child protection features spark concern within its own ranks -sources | Reuters

https://www.reuters.com/technology/exclusive-apples-child-protection-features-spark-concern-within-its-own-ranks-2021-08-12/

Apple employees express concerns about new CSAM scanning --9to5Mac

https://9to5mac.com/2021/08/12/apple-employees-express-concerns-about-new-csam-scanning/

The measures to prevent the spread of CSAM announced by Apple are mainly carried out on 'Message App', 'iCloud Photo', 'Siri and Search Function'. Specifically, what happens is when you censor content in a way that doesn't compromise your privacy, warn you when a user searches for CSAM, or when your child sends a CSAM. Notify parents. Details of each function are summarized in the following articles.

Apple announced that it will scan iPhone photos and messages to prevent sexual exploitation of children, and protests from the Electronic Frontier Foundation and others that 'it will impair user security and privacy' --GIGAZINE

Apple's CSAM spread prevention measures have been criticized by organizations such as the Electronic Frontier Foundation and the Center for Democracy and Technology as 'privacy is compromised,' but according to Reuters, Apple employees use it. It seems that employees are raising concerns even in the internal Slack channel that is being used.

According to an anonymous Apple employee who provided information to Reuters, since Apple announced the CSAM spread prevention system on August 5, more than 800 messages have been sent on the company's Slack channel, and the system's pros and cons It is said that discussions are being held. Many employees are worried that the oppressive government may abuse the CSAM spread prevention system to censor or arrest people.

After that, Apple employees created a dedicated thread to discuss the CSAM spread prevention system, but some employees said that 'Slack is not the best place for this kind of discussion'. It seems that In addition, there are voices from within the company who support the CSAM spread prevention system, saying that it will lead to crackdown on illegal content.

The pandemic of the new coronavirus has made Apple more widely used in Slack internally when it was forced to close offices around the world. Speaking of Apple, it is also known that in order to protect confidential information such as new products, a top secret team consisting of former FBI and former Secret Service is formed to thoroughly prevent information leakage. However, the spread of Slack within the company has 'created a place to discuss what employees think about their products and services,' Apple-related media 9to5Mac points out.

In addition, Apple employees have been denied requests to work from home under the pandemic of the new coronavirus using Slack, and they are conducting a survey on equal pay for equal work, and it seems that communication within the company is accelerating. ..

On the other hand, Apple has informed employees that they should not discuss sensitive topics such as labor issues on Slack, but even so, the discussion has not been completely suppressed. It seems to be the current situation.

Related Posts:

in Hardware, Smartphone, Posted by logu_ii