90 human rights groups issue open letters protesting 'scanning iPhone photos and messages', fears that children's rights will be violated

90 groups around the world, including Japan, have released a letter expressing opposition to Apple's efforts to report child pornography by scanning Apple devices such as the iPhone and iCloud photos and messages.

International Coalition Calls on Apple to Abandon Plan to Build Surveillance Capabilities into iPhones, iPads, and other Products --Center for Democracy and Technology

Apple photo-scanning plan faces global backlash from 90 rights groups | Ars Technica

https://arstechnica.com/tech-policy/2021/08/apple-photo-scanning-plan-faces-global-backlash-from-90-rights-groups/

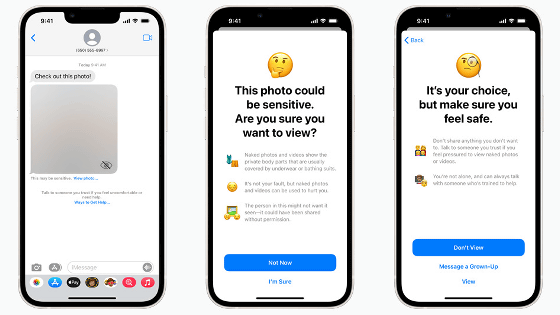

Apple's efforts to detect 'Child Sexual Abuse Material (CSAM)' in iPhone devices and iCloud announced on August 5, 2021 are being monitored and censored. The danger of leading to strengthening and the risk of false positives have been pointed out, and voices of concern are flooding from inside and outside Apple.

Apple's 'Child Pornography Detection System in iPhone' Turns Out to Have a Big Hole-GIGAZINE

On August 19, 2021, 90 organizations from around the world jointly issued an open letter (PDF file) calling on Apple to forgo plans to implement its efforts to scan images and messages. The letter includes the United States, Canada, Germany, United Kingdom, Hong Kong, Spain, Argentina, Belgium, Brazil, Colombia, Dominica, Ghana, Guatemala, Honduras, India, Kenya, Mexico, Nepal, Netherlands, Nigeria, Pakistan, Panama, Paraguay, Peru.・ Signed by organizations such as Senegal and Tanzania, JCA-NET, an NGO working in the field of telecommunications, is participating from Japan.

The content of the letter is of great concern that efforts to scan data could be used to censor speech, threatening the privacy and security of Apple product users around the world. It is also possible that the algorithms for detecting sexual expression are unreliable, and that parents who are abusing their children or who lack an understanding of LGBT + manage their children's iPhone accounts. 'We support efforts to protect children and are categorically opposed to the spread of CSAM,' he said, but the efforts announced by Apple are rather for children and others. It could endanger the user. '

Sharon Bradford Franklin, co-head of the Center for Democracy & Technology , an American non-profit organization that compiled the joint statement, said in a statement , 'We are the governments of Apple's iPhones and iPads. We anticipate that we will abuse the surveillance features built into devices such as, such governments will ask Apple to scan and block content that should be protected as freedom of expression. Let's do it, 'he said, pointing out concerns about the suppression of speech.

Related Posts:

in Web Service, Security, Posted by log1l_ks