What exactly is 'CFG (classifier-free guidance)' that determines how much prompt / spell instructions are followed in the image generation AI 'Stable Diffusion'?

AI '

CLASSIFIER-FREE DIFFUSION GUIDANCE.pdf

https://arxiv.org/pdf/2207.12598.pdf

[Paper commentary] Understanding OpenAI ``GLIDE'' | A fun introduction to AI and machine learning

https://data-analytics.fun/2022/02/13/openai-glide/#toc5

The Road to Realistic Full-Body Deepfakes - Metaphysic.ai

https://metaphysic.ai/the-road-to-realistic-full-body-deepfakes/

When generating images and sounds with AI, rather than simply inputting labeled data and allowing it to learn, a separate classification model (classifier) is prepared at the time of sampling . There is a method called CFG (classifier-free guidance) is an improvement to this method, in which a diffusion model and a classification model are trained simultaneously instead of preparing a separate classification model.

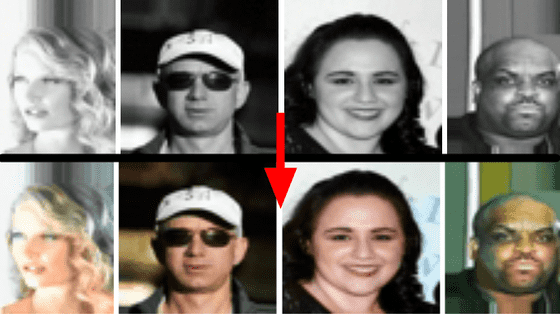

By introducing CFG, it is possible to improve the quality while reducing the sample diversity in the diffusion model. The following images released by Google researchers who announced CFG are 'images generated without using CFG' on the left and 'images generated using CFG' on the right. You can see that the images generated using CFG are very similar in composition and objects, but also in high quality.

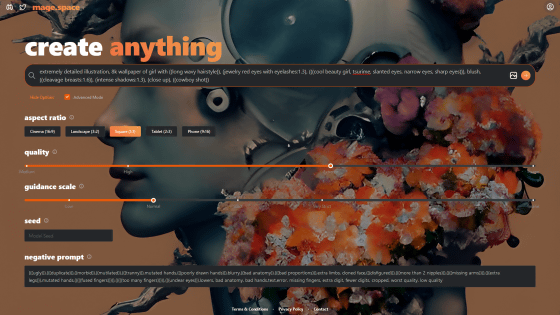

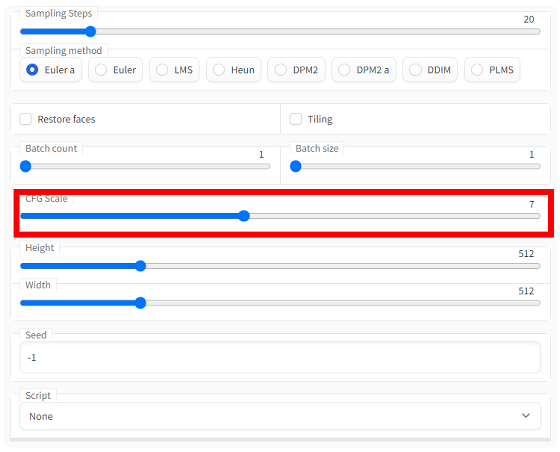

CFG is also one of the main parameters in Stable Diffusion, and the larger the CFG scale, the more likely it is that a new image can be generated according to the image input by the prompt or '

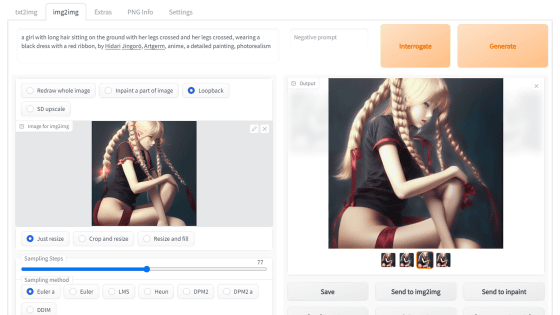

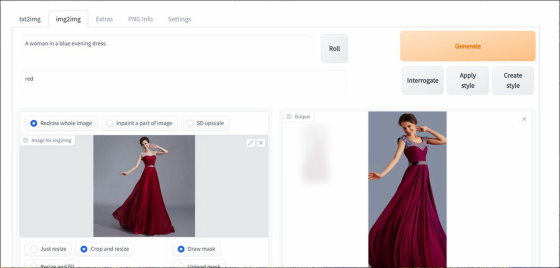

One of the things that Stable Diffusion is not good at in image generation using img2img is 'changing the color of clothes'. Below is Metaphysic using Stable Diffusion, inputting a picture of a woman in a red dress with img2img, and based on that, following the prompt 'A woman in a blue evening dress' This is the screen where I tried to generate the image. The CFG scale is set to ``13.5'', which is slightly higher than the general recommended value (about 7 to 11), and despite the inclusion of ``red'' in the prohibited word, the women's evening dress remains red. It is

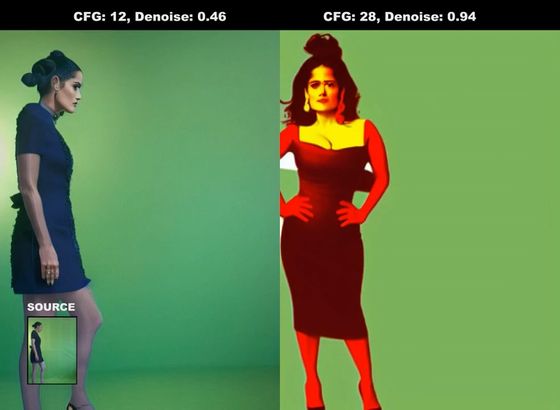

Also, below is the video that Metaphysic tried with Stable Diffusion to ``change the color of the clothes worn by the performer from dark blue to red and make the face

Hayek CFG comparison-YouTube

The left side was generated with standard parameters of 'CFG: 12, noise reduction: 0.46', and the right side was generated with parameters close to the maximum value of 'CFG: 28, noise reduction: 0.94'. The movement of the female performer, which is the input data, is shown in the lower left frame. The texture and pose are clearly different between the left and right side, and you can see that the left side is much more accurate as an image, but only the dress color on the right side is more in line with the prompt. .

The left side has almost the same movement as the performer, but the right side sometimes poses completely different from the performer.

If the CFG scale is too large, the quality will suffer and the image will be completely different from moment to moment. Certainly, the instructions to 'change the color of the dress to red' were faithfully followed, but other parts were greatly damaged.

Metaphysic suggests using materials that are a little closer to what you want to generate in the end as a way to improve these problems.

Related Posts:

in Software, Web Service, Video, Posted by log1h_ik