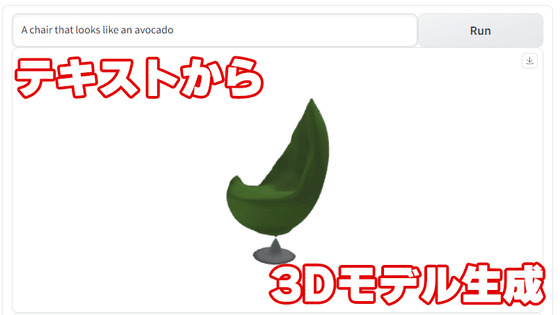

OpenAI announces open source AI ``Shap-E'' that generates 3D models from text and images

OpenAi, which develops large-scale language model GPT-4 and chatbot AI ChatGPT, has announced AI `` Shap-E '' that automatically generates 3D models by entering text and images. Shap-E is open source and free to use.

GitHub - openai/shap-e: Generate 3D objects conditioned on text or images

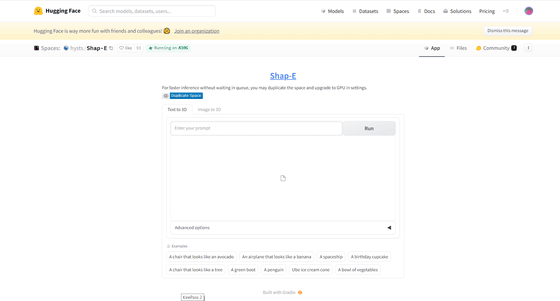

Mr. hysts , a machine learning engineer, has created a demo site where you can experience Shap-E on Hugging Face, a repository site for AI, and you can easily experience what kind of AI Shap-E is from your browser.

Shap-E - a Hugging Face Space by hysts

https://huggingface.co/spaces/hysts/Shap-E

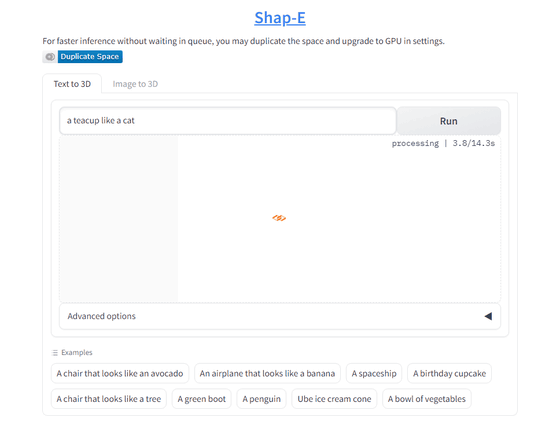

When you access the demo site, it looks like this.

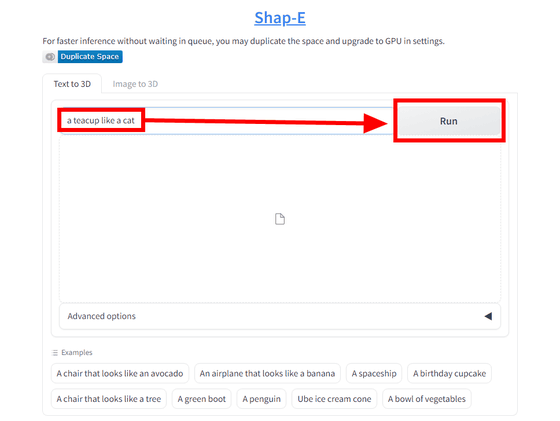

Enter the prompt in the text entry field and click 'Run'. This time, I entered 'a teacup like a cat'.

It will be processed for several tens of seconds.

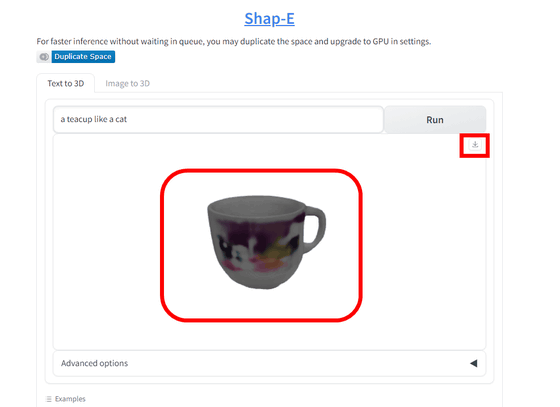

After processing, the generated 3D model will be displayed at the bottom. The 3D model can be viewed from various directions by dragging. Click the '↓' icon on the upper right to download the generated 3D model in glTF (GL Transmission Format) format.

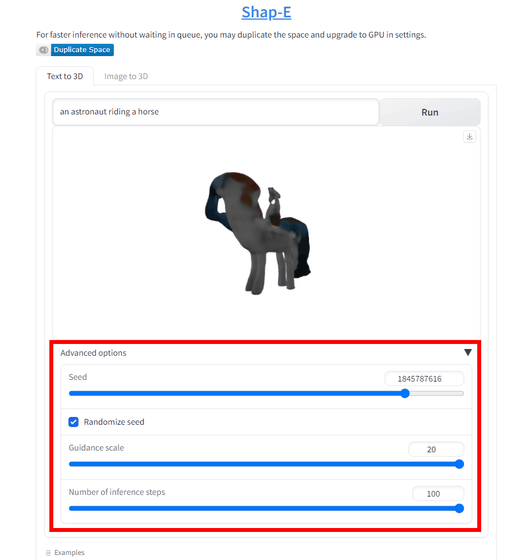

By clicking 'Advance options' under the space where the 3D model is displayed, you can change Seed (seed value), Guidance scale ( guidance scale ), Number of inference steps (number of steps) . I set the guidance scale to 20 and the number of steps to 100, and generated 'an astronaut riding a horse' below. Perhaps because of the setting of the guidance scale and the number of steps, the horse and the astronaut merged into a mysterious life form.

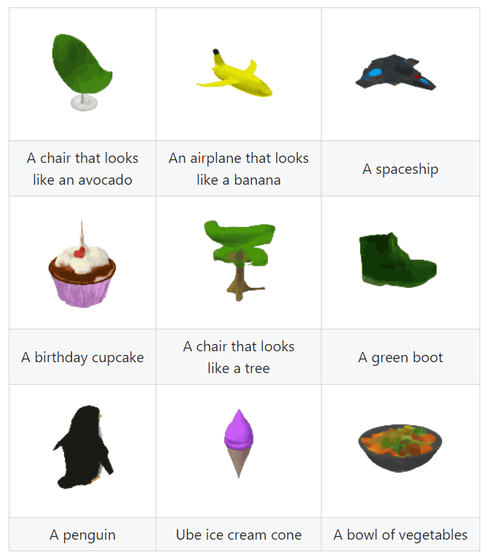

GitHub has a collection of prompts and generation examples.

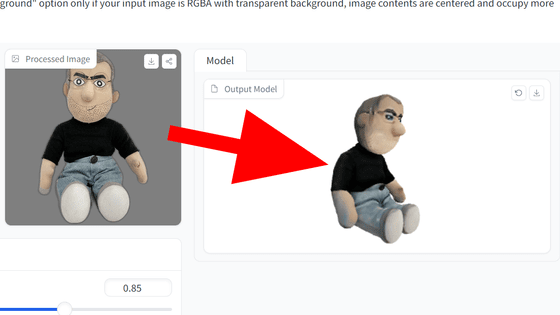

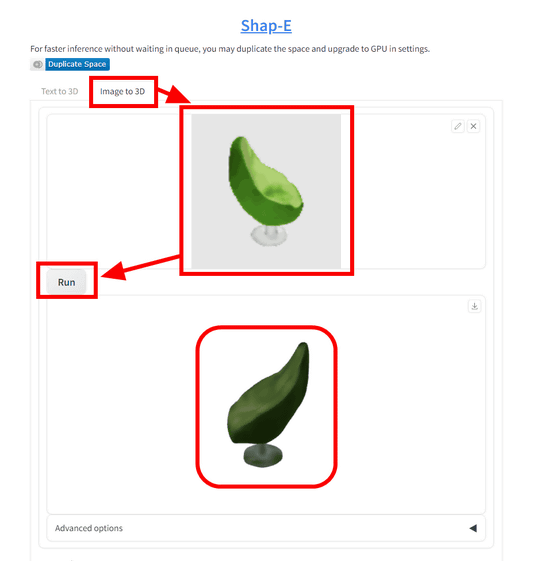

Shap-E also has an 'Image to 3D' mode that allows you to generate a 3D model from an image. Click the 'Image to 3D' tab, load the image you want to convert to 3D, and click 'Run'. When I read the still image of the generation example of 'A chair that looks like an avocado' in the generation example of GitHub, something close to the original 3D model was generated.

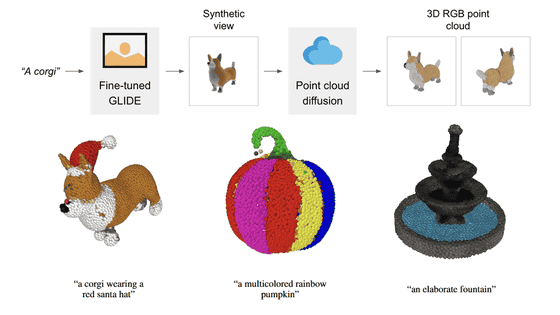

OpenAI has been developing AI 'Point-E' that generates 3D models so far. This Point-E was characterized by collecting colored points and generating a 3D model.

3D model generation AI ``Point-E'' is open sourced by OpenAI so that anyone can download it, 3D objects can be generated and displayed from prompts 600 times faster than before - GIGAZINE

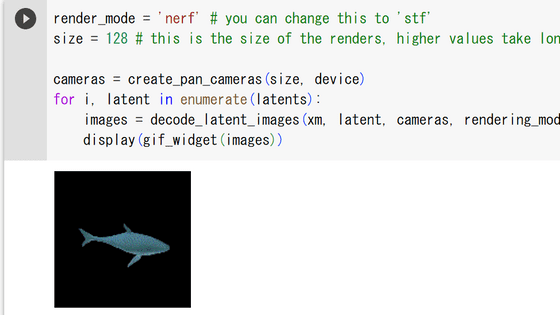

Unlike Point-E, Shap-E uses NeRF (Neural Radiance Fields), which generates 3D models from photographs taken from various angles, and textures to generate 3D models, allowing more flexible expression than before. It will be possible. However, there is a limitation that it is difficult to assign multiple attributes to the 3D model and set an appropriate number of polygons, and it seems that higher computing performance may be required compared to Point-E. OpenAI states that 'the reason is that there is little data for learning,' and that learning with a larger dataset will improve performance.

Related Posts:

in Review, Software, Web Application, Art, Posted by log1i_yk