What is the way to find out the fake video call by exploiting the weakness of deep fake?

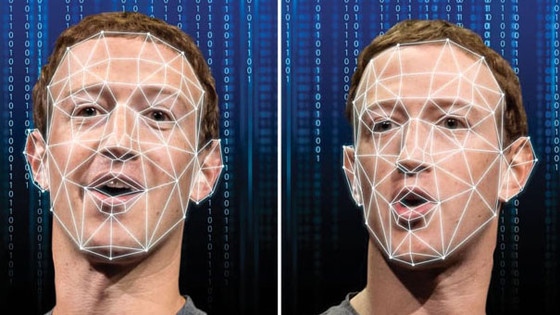

Deepfake , which can generate sophisticated fake content using technologies such as artificial intelligence (AI) and machine learning, can create `` photos of fictional people who do not exist in this world'' , and anyone can make movies While there is a service that can appear in the trailer of , and there is a pleasant use, there is a scam that uses deep fakes for recruitment images and video call images, the Federal Bureau of Investigation (FBI) of the United States said. There are also warning cases. Meanwhile, Metaphysic.ai , a technology media, explains 'how to tell if the other party of the video call is using deep fakes' while pointing out the weaknesses of deep fakes.

To Uncover a Deepfake Video Call, Ask the Caller to Turn Sideways - Metaphysic.ai

https://metaphysic.ai/to-uncover-a-deepfake-video-call-ask-the-caller-to-turn-sideways/

In July-August 2022, the Better Business Bureau , a Canadian-American non-profit organization that aims to promote advertising and marketplace credibility, announced that ``fraudsters are using deepfakes to , deceive consumers by making it appear that celebrities are endorsing their products or by falsely claiming that diet products are producing dramatic results.' Better Business Bureau's Amy Mitchell says that as a countermeasure against deepfakes, ``By looking closely at the image or video, you can discover that the screen is blurry. Please look for the part that is good.'

On the other hand, unlike viewing stored images and videos, in video calls that deliver images in real time, jerky movements and blurring of images tend to occur to some extent due to the accuracy of communication and cameras. However, according to Metaphysic.ai, although it was overlooked because real-time deep fakes in video calls were not noticed until very recently, deep fakes have fatal weaknesses and are easy to spot. The weakness of deepfakes pointed out by Metaphysic.ai is that deepfakes are not good at creating profile faces. Therefore, if you suspect that the other party of the video call is using deep fake, it seems better to ask the other party to 'turn to the side'.

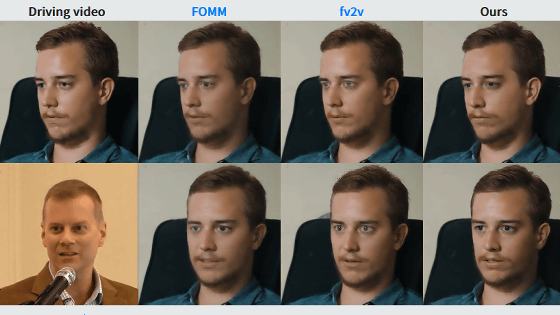

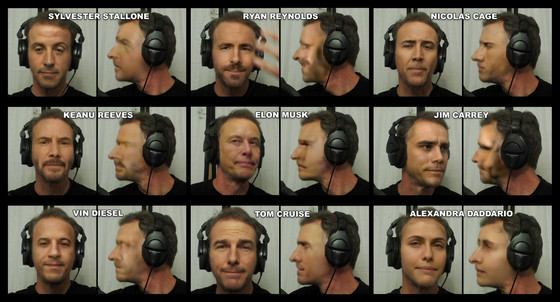

The image below shows Bob Doyle, who participated in the test, using deep fakes to disguise the appearance of various celebrities and make video calls. There are famous actors such as Sylvester Stallone and Ryan Reynolds, business owners like Elon Musk, and even actress Alexandra Daddario, all of which are convincing. However, looking at the sideways photo on the right side of each, you can see at a glance that there is a problem with the face generated by the deepfake, such as the surface of the face is greatly collapsed and the eyes are not depicted. .

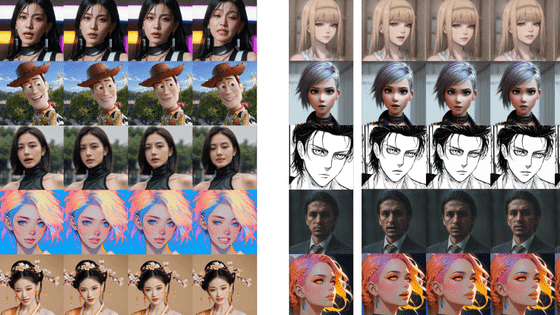

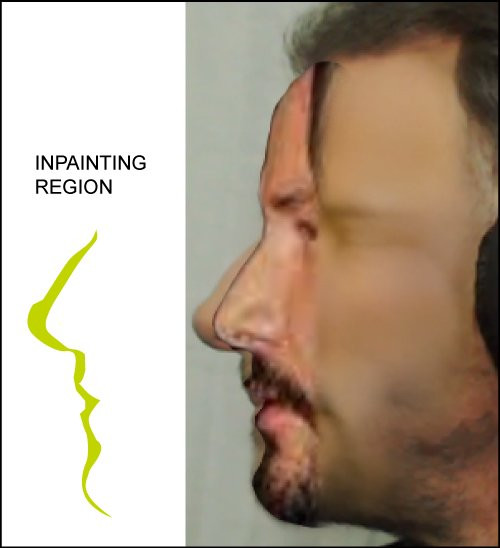

Metaphysic.ai points out that the reason why the profile of the deep fake collapses greatly is that ``the contour and profile information when facing sideways are not sufficiently trained.'' As shown in the image below, the deepfake model only captures the area near the front of the face, and not enough data from the temporal region to the cheeks, so the deepfake It is said that it is in a state of 'inventing' the shape of the face.

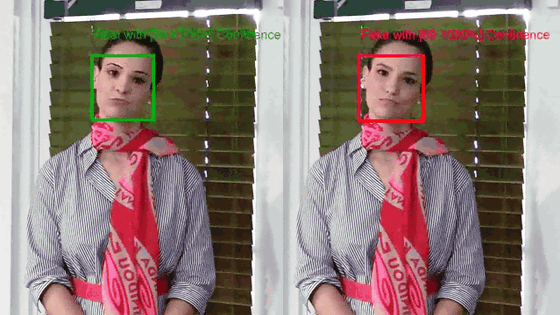

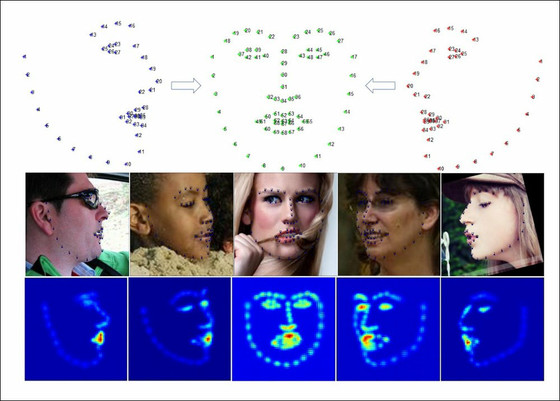

Metaphysic.ai explains why Deep Fake does not have much profile data as follows. The software that detects faces in deepfakes is centered around landmark points for face orientation and alignment algorithms. At that time, as you can see in the image below published in a

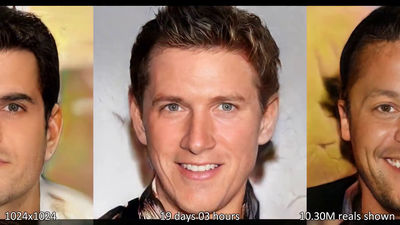

On the other hand, in the case of Hollywood actors and TV talents who have a lot of video data, there are quite a lot of profiles that can be detected as data, so movies that reflect profiles with a fairly advanced deep fake have also been released. In the following movie, a fairly tense scene of the movie '

Jerry Seinfeld in Pulp Fiction [DeepFake]-YouTube

Giorgio Patrini , representative of AI security company Sensity, said, ``As a countermeasure against deepfakes during video conference calls, having your profile submitted as an identity check in advance and confirming it during the call is actually a deepfake. And simply, most of the latest deepfake software will fail to portray when the face is moved or turned sideways,' he commented to Metaphysic.ai. , support the effectiveness of the method of 'turning to the side'. In addition, Liu Shiwei, a professor of engineering and applied sciences at the State University of New York at Buffalo and an expert in deepfakes, also supports the countermeasure against deepfakes, ``Have them look sideways.'' 'It's a big problem for deepfake technology. Deepfakes work very well on front faces, but they don't work very well on side faces, and the AI kind of guesses.' I'm here.

On the other hand, Mr. Seaway believes that a new generation 3D landmark location system may improve the performance of deep fake networks. However, this only makes it easier to acquire and reflect profile data, and the problem of deep fake that ``if you are not a celebrity with a lot of video data exposure, you generally cannot obtain much profile data'' It's not a solution.

However, in a paper (PDF file) published by Taipei University , a sample that generates profile data in a form with little discomfort is released from a frontal photo where the profile can hardly be seen.

According to Metaphysic.ai, movements such as touching your face or waving your hand in front of your face can also interfere with the quality of your deepfakes, but these 'deepfake face overlap issues' have been elaborated. Metaphysic.ai emphasizes that the technology to clear it is developing, and in the latest state, the profile has the least room for improvement. On the other hand, on Hacker News , a person who claims to work in deepfakes said, 'Lack of data is a problem for smaller scammers, and it's a relief against powerful and malicious scammers. It's hard to say material, and it's only a matter of time before deep learning like NeRF grows up.Will sideways cracking deepfakes still be a valid test five years from now?' Posted. Metaphysic.ai also repeats that ``the profile is protected from deep fakes'' is ``only in the current situation''.

Finally, Metaphysic.ai said, ``The most threatening aspect of deepfake spoofing and fraud is what we don't expect. Being there is an important point in countering deep fakes.'

Related Posts: