Google reports that sparse inference has achieved faster neural networks

Google reported that it has significantly improved inference speed by incorporating additional

Google AI Blog: Accelerating Neural Networks on Mobile and Web with Sparse Inference

https://ai.googleblog.com/2021/03/accelerating-neural-networks-on-mobile.html

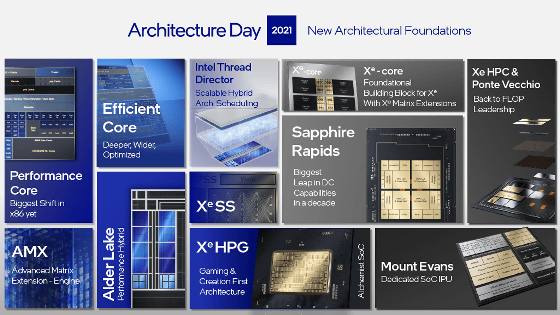

'Sparse' is an English word that means 'sparse'. In the analysis of big data, it is often the case that 'the whole data is large, but only a small part of the meaningful data'. Sparse modeling is a methodology that selects data with these properties and analyzes only meaningful data, and is used for improving the resolution of MRI and X-ray CT, and for increasing the speed and accuracy of three-dimensional structure calculation. I will.

Google has newly announced that it has updated TensorFlow Lite, a software library for machine learning for mobile, and XNNPACK, a neural network inference optimization library, to achieve further sparse optimization. With this new update, it will be possible to detect whether the model to be analyzed is sparse or not, and it will be possible to achieve a significant improvement in inference speed.

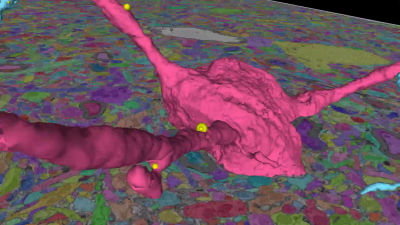

In the real-time processing of the video conferencing application Google Meet , you can see that the sparse model (right side) realizes lower processing time and higher FPS than the old model (left side) as shown in the image below. According to Google, it succeeded in increasing the processing speed by 30% by making it 70% sparse while maintaining the image quality quality of the subject coming in the foreground.

Google says sparsification is a simple and powerful way to improve neural network CPU inference and will continue to do this kind of research.

Related Posts:

in Software, Posted by darkhorse_log