Introducing PixArt-δ, an open source image generation model that spits out high-resolution AI images in 0.5 seconds

Researchers from Huawei Noah's Ark Lab, Dalian University of Technology, Hugging Face, and others have announced PixArt-δ, a framework that generates images from text.

[2401.05252] PIXART-δ: Fast and Controllable Image Generation with Latent Consistency Models

Meet PIXART-δ: The Next-Generation AI Framework in Text-to-Image Synthesis with Unparalleled Speed and Quality - QAT Global

Open-source PixArt-δ image generator spits out high-resolution AI images in 0.5 seconds

https://the-decoder.com/open-source-pixart-%CE%B4-image-generator-spits-out-high-resolution-ai-images-in-0-5-seconds/

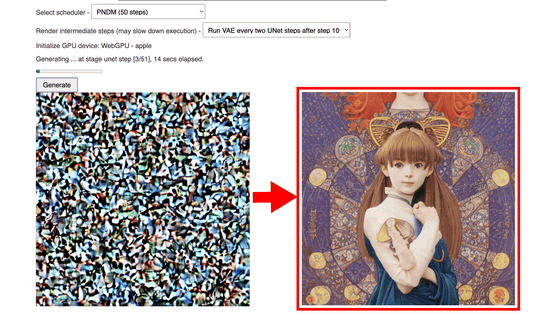

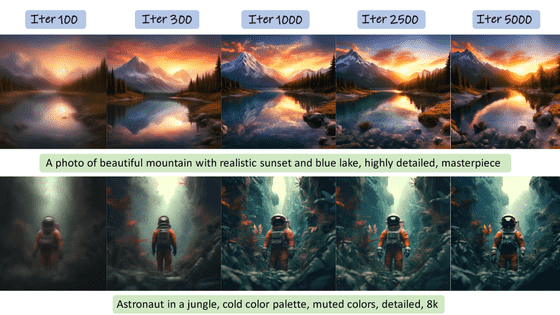

PixArt-δ is a major improvement on the already existing model 'PixArt-α' that quickly generates images with a resolution of 1024 x 1024 pixels. With just 2 to 4 steps, it can be generated in as little as 0.5 seconds, which is 7 times faster than PixArt-α.

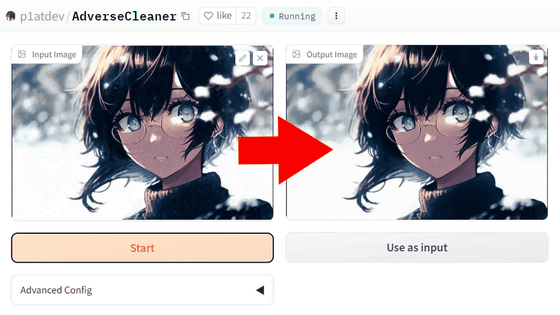

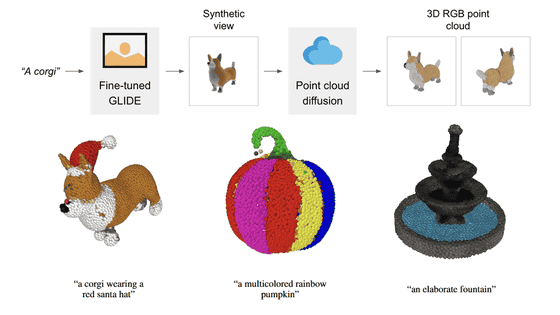

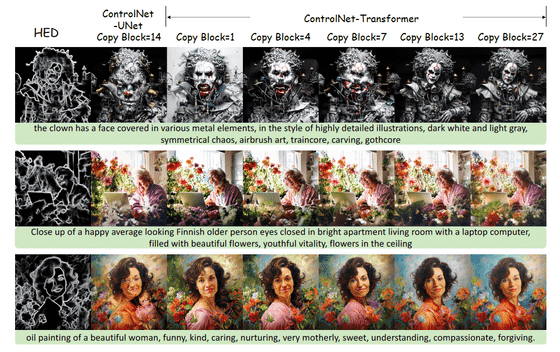

PixArt-α models include a type that supports ' Latente Consistency Model (LCM) ' that speeds up image generation processing, and 'ControlNet' that improves the quality of generated images by providing additional information such as pose and depth. There were two types compatible with this, but the δ model uses an architecture called 'ControlNet-Transformer' to integrate the two while maintaining the effectiveness of each.

At the time of writing the article, only the report of the δ model was published, and the demo version etc. has not been released yet. In addition, the LCM type and ControlNet type of the α model that have been released for a long time can be accessed from the link below.

PixArt LCM - a Hugging Face Space by PixArt-alpha

https://huggingface.co/spaces/PixArt-alpha/PixArt-LCM

PixArt-alpha/PixArt-ControlNet · Hugging Face

https://huggingface.co/PixArt-alpha/PixArt-ControlNet

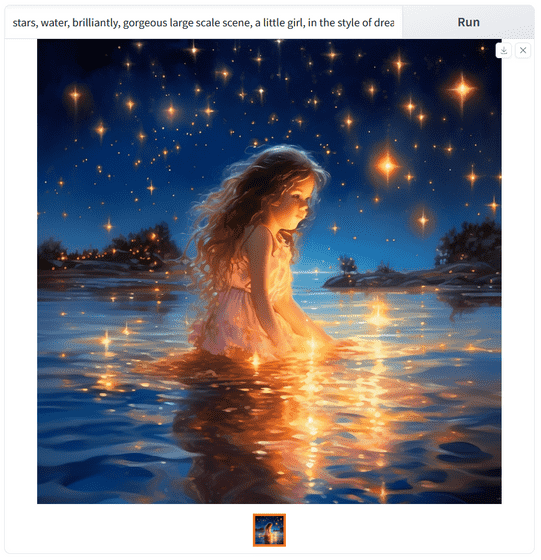

The LCM type generates images by inputting a text prompt in English.

Long prompts can take more than 10 seconds to generate.

The δ version takes a minimum of 0.5 seconds to generate, so it seems to be many times faster than the α version.

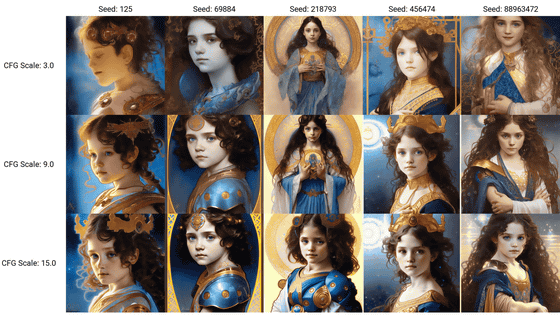

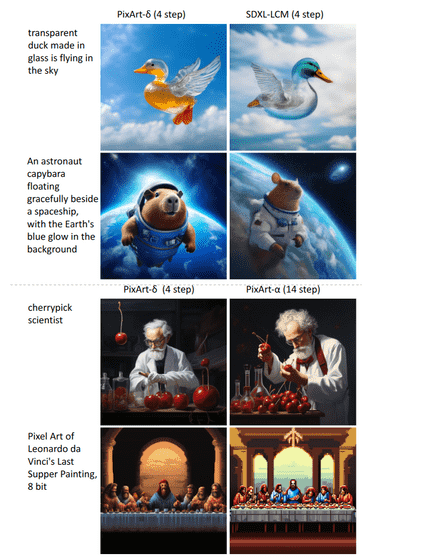

In the report, an image comparing PixArt-δ (left) and ' LCM SDXL ' (right), which can generate images in 2 to 8 steps, is also published. At first glance, PixArt-δ seems to have a slightly higher resolution.

The training efficiency has also improved, and the learning process is successful within the 32GB GPU memory constraint, and this efficiency makes it possible to learn even on ``consumer grade'' GPUs. Also, while existing models had the problem that the more weights (blocks) there were, the worse the quality was, PixArt-δ, which uses ControlNet-Transformer, seems to have succeeded in improving this problem. .

Related Posts: