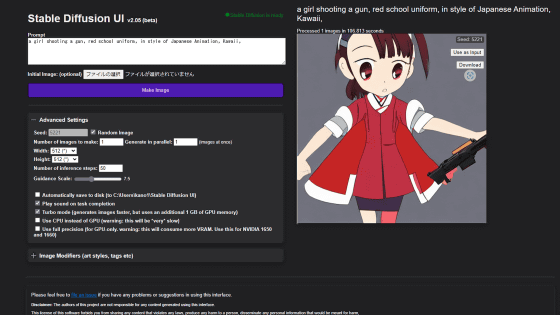

'Web Stable Diffusion', an image generation AI Stable Diffusion, is now available that can be operated from a browser without the need to install it.

In order to run Stable Diffusion, an image generation AI, a GPU and VRAM with sufficient performance are required, so you need to use a high-spec PC or workstation, or access a GPU server to borrow computing resources. Machine Learning Compilation, which distributes machine learning lectures for engineers, has released ` ` Web Stable Diffusion '' that allows you to run Stable Diffusion in your browser without requiring server support.

WebSD | Home

A demo version of Web Stable Diffusion has been released, but at the time of writing, it has been confirmed to work only on Macs equipped with M1 or M2. This time, I actually ran the demo on an M1-equipped iMac (8-core CPU, 8-core GPU, 256GB storage, 16GB RAM model).

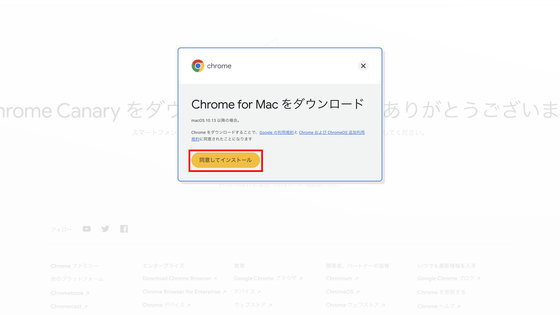

The demo version of Web Stable Diffusion is said to work with Chrome Canary , the experimental version of Google Chrome at the time of article creation, so first access the Chrome Canary distribution page and click ``Download Chrome Canary.''

Click 'Agree and Install' to download the DMG format installer. The installer file size is 218.6MB.

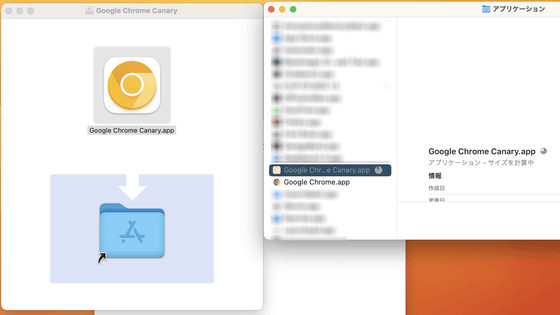

Launch the installer and install Google Canary.

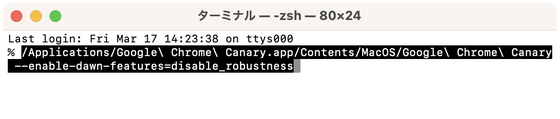

Next, enter the command /Applications/Google\ Chrome\ Canary.app/Contents/MacOS/Google\ Chrome\ Canary --enable-dawn-features=disable_robustness in the terminal to start Chrome Canary.

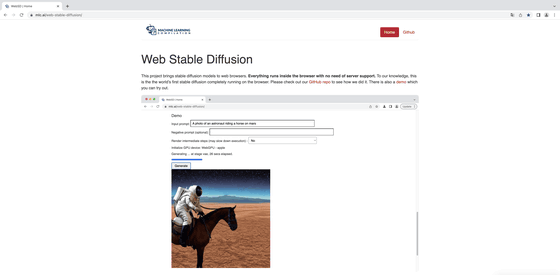

Visit the Web Stable Diffusion page.

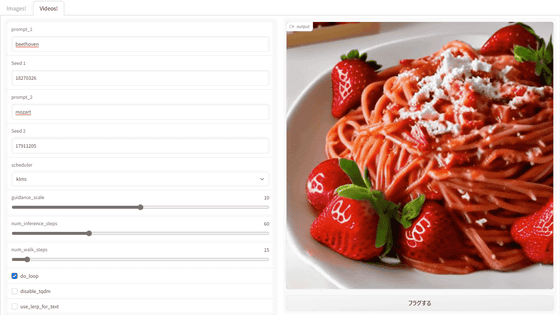

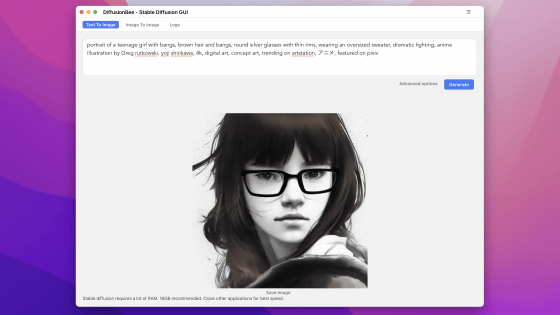

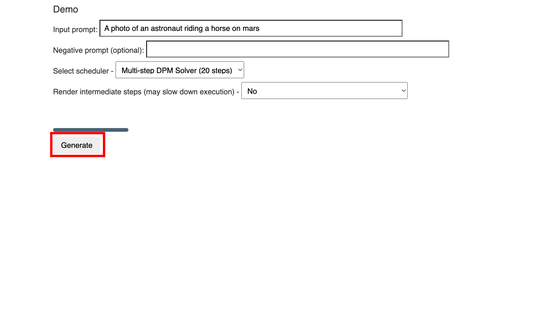

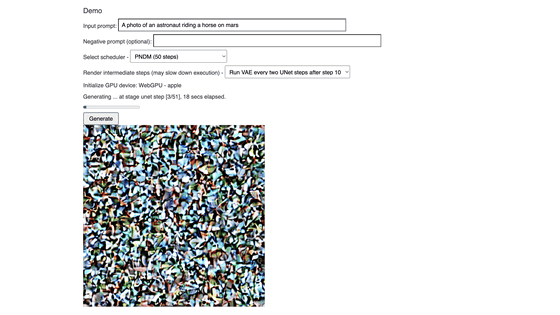

A demo of Web Stable Diffusion is installed in 'Demo' at the bottom of the page. Enter a prompt in 'Input prompt' and a negative prompt in 'Negative prompt (optional)'. This time, click 'Generate' at the default prompt 'A photo of an astronaut riding a horse on mars'.

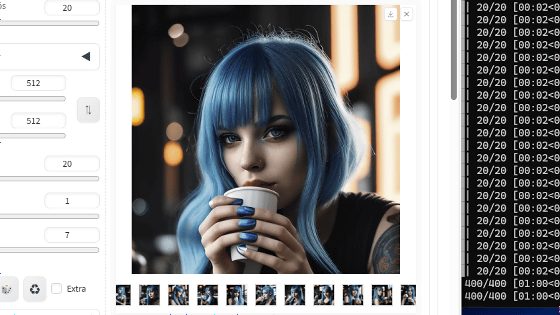

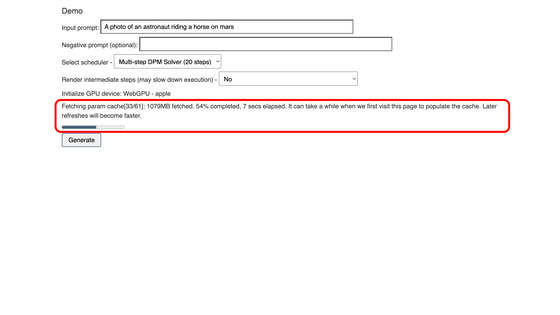

Image generation has started.

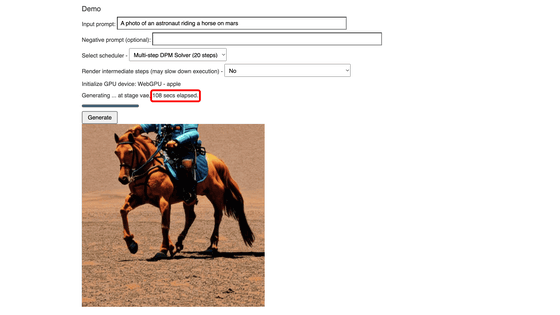

In about 2 minutes, the image below was generated.

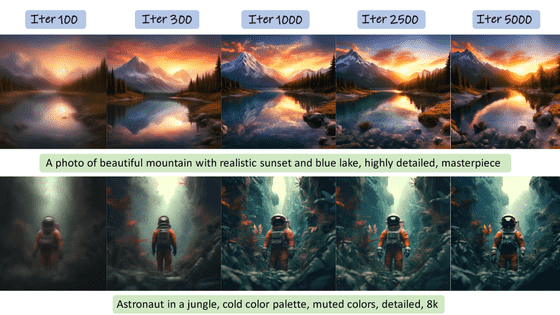

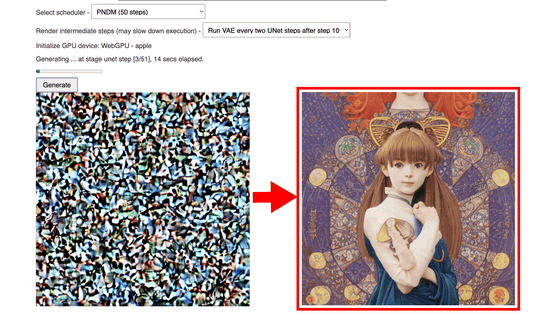

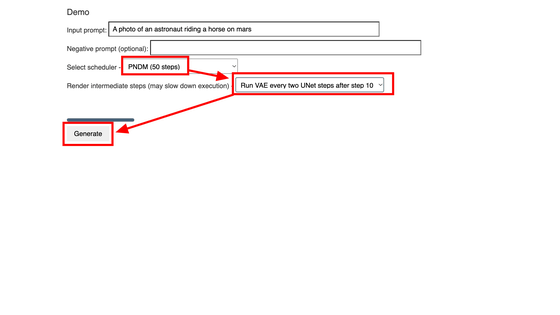

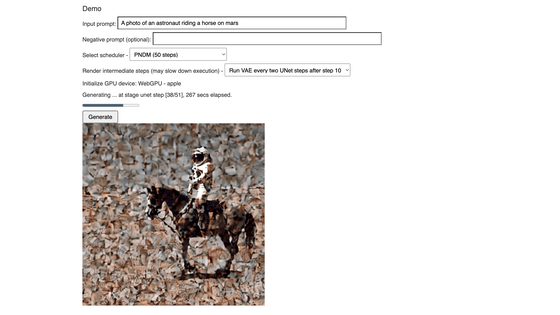

This time, I changed the number of scheduler steps with 'Select scheduler' and the rendering intermediate steps with 'Render intermediate steps'. The number of steps for the scheduler can be selected from 20 steps and 50 steps, but 50 steps increases the accuracy of the image but takes longer to generate. Also, setting intermediate rendering steps will allow you to see the noise being generated, but it will take longer to generate.

When generation started and we moved to

The image is gradually generated from noise.

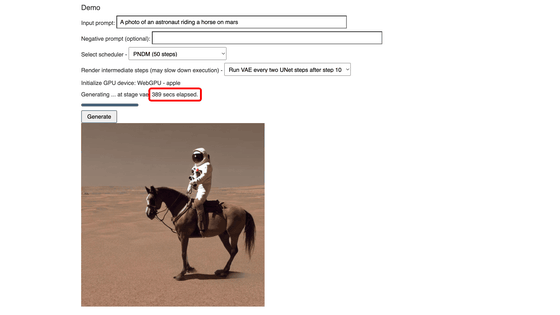

The final generated image is below. '389 secs elapsed (389 seconds elapsed)' means that it took about 6 and a half minutes to generate one image.

The code for Web Stable Diffusion is published on GitHub.

GitHub - mlc-ai/web-stable-diffusion: Bringing stable diffusion models to web browsers. Everything runs inside the browser with no server support.

https://github.com/mlc-ai/web-stable-diffusion

Related Posts:

in Software, Review, Web Application, Posted by log1i_yk