'MeshAnything' lets you build meshes for 3D models as if they were created by a human artist

There are AIs that can easily generate 3D models, such as '

(PDF file)MeshAnything: Artist-Created Mesh Generation

with Autoregressive Transformers

https://buaacyw.github.io/mesh-anything/MeshAnything.pdf

[2406.10163] MeshAnything: Artist-Created Mesh Generation with Autoregressive Transformers

https://arxiv.org/abs/2406.10163

MeshAnything

https://buaacyw.github.io/mesh-anything/

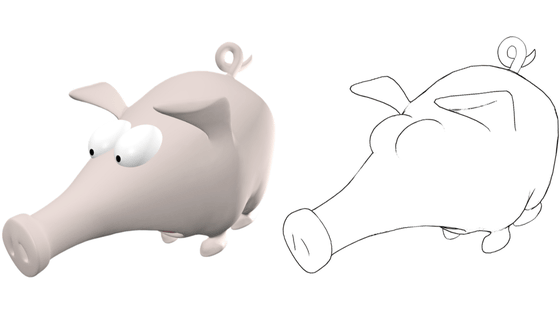

3D models can now be generated by AI from text or by reading and reconstructing objects, and the quality is comparable to that of hand-made 3D models. However, it has been pointed out that 3D models are not good at drawing contour lines because it is difficult to mechanically calculate the distance between pixels, and 3D assets need to be converted into meshes to be applied to various apps, and automatic mesh generation is not as advanced as 3D model creation.

Why is it difficult to draw 'contour lines' in 3DCG?

According to a paper published by Chen Yiwen et al., who is researching AI content generation at Nanyang Technological University, traditional mesh extraction methods rely on superficial surfaces and ignore geometric features, resulting in problems such as 'inefficiency,' 'the need for complex post-processing,' and 'degraded representation quality.'

To address these issues, MeshAnything was created as a model that generates artist-created meshes (meshes manually generated by humans) that fit a given shape by treating mesh extraction as a generative problem.

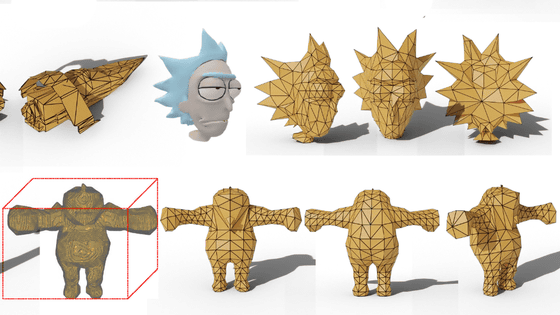

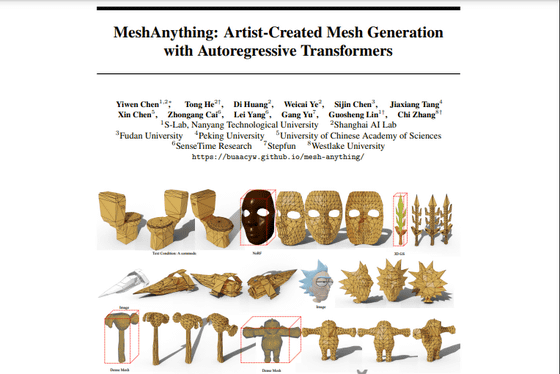

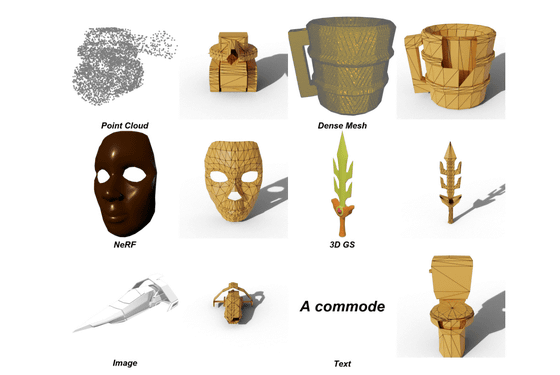

The following images are examples of mesh creation using MeshAnything. MeshAnything generates 3D models with meshes from point clouds , very detailed machine-generated meshes, NeRF models based on deep learning, 3D GS rendered into smooth models from point clouds, images, and text. The meshes extracted here are close to those created by hand, and can be seamlessly applied to any 3D industry purpose.

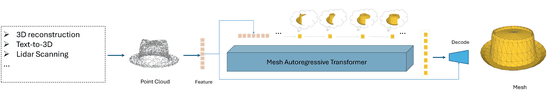

MeshAnything first samples a point cloud from a specified 3D model and encodes it into units called Features. The Features are then input to an autoregressive transformer to generate a shape-conditioned mesh.

Conventional techniques for mechanically generating meshes generally involve dividing a 3D model into small cells to approximately reproduce the surface, or analyzing the gradient of the surface with a neural network to optimize the mesh. However, in this case, there are problems such as unnatural meshes in areas with dense elements, or superficial meshes that ignore the characteristics of the shape. MeshAnything optimizes its generation AI to avoid learning complex 3D shapes and reconstruct the shape, significantly reducing the training burden and generating meshes that are closer to those made by artists.

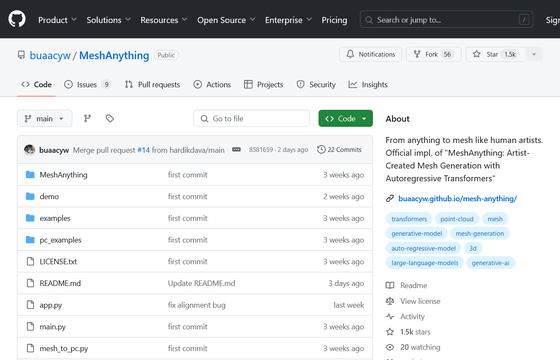

The MeshAnything project is published in a paper and can also be found on GitHub.

GitHub - buaacyw/MeshAnything: From anything to mesh like human artists. Official impl. of 'MeshAnything: Artist-Created Mesh Generation with Autoregressive Transformers'

https://github.com/buaacyw/MeshAnything

Related Posts:

in Software, Posted by log1e_dh