Stability AI announces 'Stable Virtual Camera,' a video generation AI that can generate immersive 3D videos with perspective just by inputting 2D images

Introducing Stable Virtual Camera: Multi-View Video Generation with 3D Camera Control — Stability AI

https://stability.ai/news/introducing-stable-virtual-camera-multi-view-video-generation-with-3d-camera-control

Stable Virtual Camera is a video generation AI that can convert 2D images into immersive 3D videos with realistic depth and perspective using a multi-view diffusion model, without the need for complex reconstruction or scene-specific optimization.

When outputting animations in 3DCG tools such as Blender, you can specify composition and movement by placing a virtual camera anywhere in 3D space. Stable Virtual Camera introduces the concept of a virtual camera to video generation AI, combining the familiar controls of a conventional virtual camera with the power of generation AI to enable precise and intuitive control of 3D video output.

Unlike traditional 3D video models that rely on a large number of input images and complex pre-processing, Stable Virtual Camera is able to generate new views of a scene from one or more input images at user-specified camera angles. Stable Virtual Camera outputs consistent and smooth 3D video and can provide seamless trajectory video across dynamic camera paths.

You can get a good idea of what kind of video can be output by inputting an image into the Stable Virtual Camera by watching the video below.

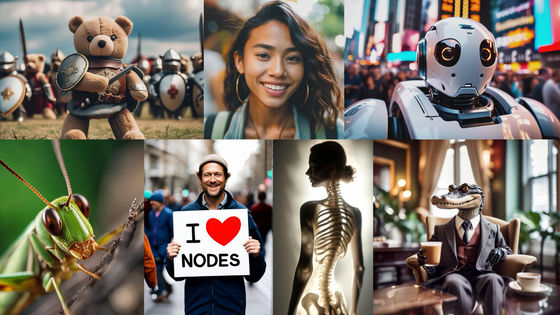

Introducing Stable Virtual Camera: This multi-view diffusion model transforms 2D images into immersive 3D videos with realistic depth and perspective—without complex reconstruction or scene-specific optimization. pic.twitter.com/pHPkYhaKH3

— Stability AI (@StabilityAI) March 18, 2025

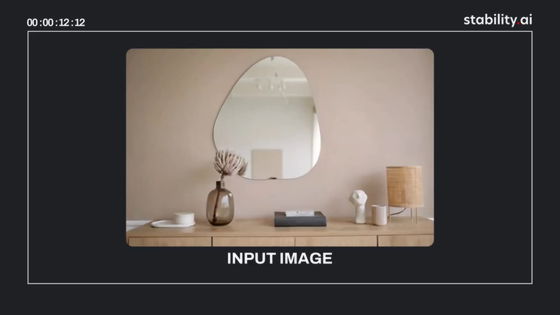

The input image is as follows:

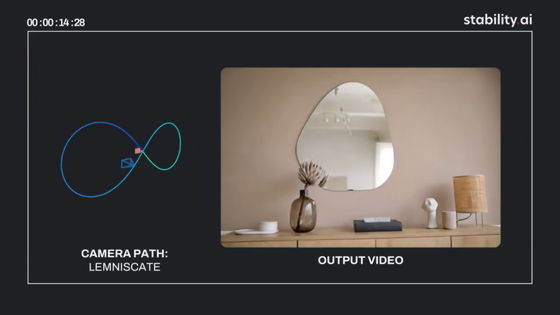

By specifying the path (trajectory) of the virtual camera (left), it is possible to generate a 3D video based on that path.

The four advanced features available with the Stable Virtual Camera are as follows:

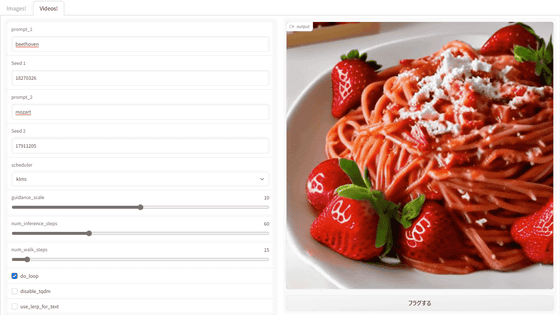

Dynamic camera control

Supports user defined camera trajectories and multiple dynamic camera paths including 360 degrees, lemniscate (infinite shaped path), spiral, dolly zoom in, dolly zoom out, zoom in, zoom out, forward, backward, pan up, pan down, pan left, pan right and roll.

- Flexible input

3D videos can be generated from either a single input image or up to 32 input images.

-Multiple aspect ratios

Create videos in square (1:1), portrait (9:16), landscape (16:9), and other custom aspect ratios without any additional training.

・Long video generation

Ensure 3D consistency with videos of up to 1000 frames, enabling seamless looping and smooth transitions even when revisiting the same viewpoint.

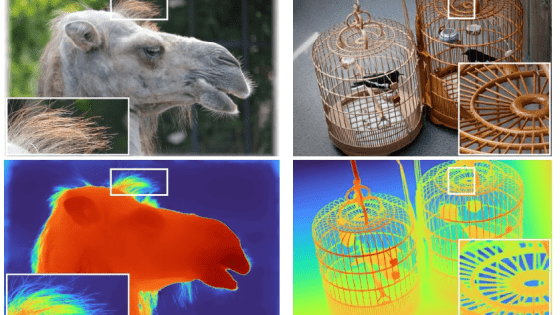

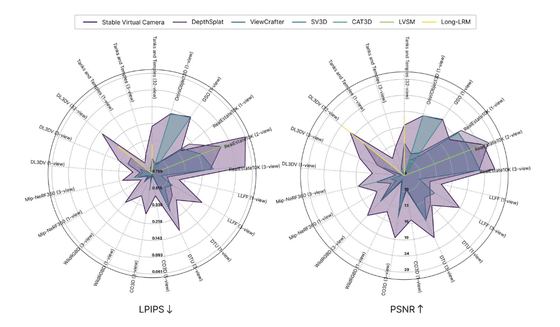

The Stable Virtual Camera achieves state-of-the-art results on new view generation (NVS) benchmarks, outperforming models such as ViewCrafter and CAT3D. Below is a graph comparing the results of measuring perceived quality (LPIPS) and accuracy (PSNR) on various benchmarks, with the purple line showing the score for the Stable Virtual Camera. The Stable Virtual Camera's LPIPS and PSNR scores significantly outperform competing AI models in most benchmarks.

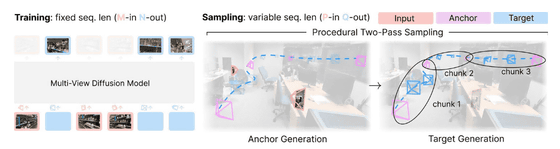

The Stable Virtual Camera is trained as a multi-view diffusion model with a fixed sequence length, using a set number of input and target views (M-in, N-out). During sampling, it acts as a flexible generative renderer that accommodates variable input and output lengths (P-in, Q-out), achieved by a two-pass procedural sampling process. By generating anchor views first and then rendering target views in chunks, smooth and consistent results can be guaranteed.

Since the Stable Virtual Camera is in the research preview stage at the time of writing, it may generate low-quality video in certain scenarios. In addition, input images that feature dynamic textures such as humans, animals, and water often suffer from poor output quality. In addition, 'highly ambiguous scenes,' 'complex camera paths that intersect with objects or surfaces,' and 'irregularly shaped objects' can cause flickering artifacts, especially when the target's viewpoint is significantly different from the input image.

The Stable Virtual Camera is available for research purposes under a non-commercial license. Not only is the paper open to the public, but you can also download the weights from Hugging Face and access the code from GitHub.

LICENSE · stabilityai/stable-virtual-camera at main

https://huggingface.co/stabilityai/stable-virtual-camera/blob/main/LICENSE

GitHub - Stability-AI/stable-virtual-camera: Stable Virtual Camera: Generative View Synthesis with Diffusion Models

https://github.com/Stability-AI/stable-virtual-camera

Related Posts: