Google's "Transformer" to realize higher quality translations to a level beyond RNN and CNN

In the neural networkRecurrent neural network(RNN) is regarded as a major approach to language understanding tasks such as language modeling, machine translation, question and answer. Meanwhile, Google is a new neural network architecture that excels at language understanding tasks than RNNTransformer"We are developing.

Research Blog: Transformer: A Novel Neural Network Architecture for Language Understanding

https://research.googleblog.com/2017/08/transformer-novel-neural-network.html

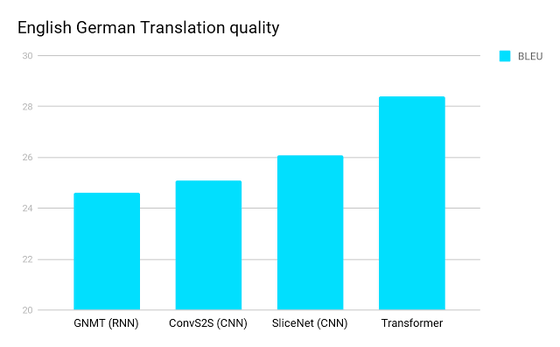

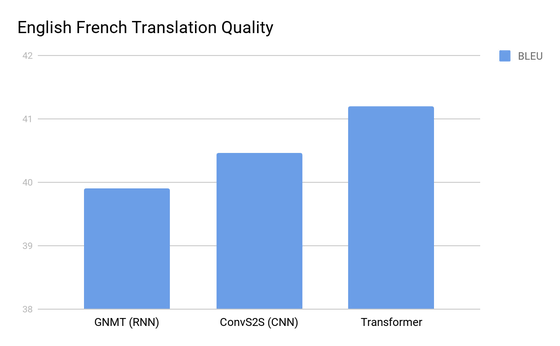

"Transformer" of the neural network architecture excelled in the language understanding task by Google benchmarks two kinds of translations from English to German and from English to French, both of which made use of RNN and convolution neural network (CNN) It is superior to the architecture. Because Transformer has overwhelmingly less computational complexity for learning than other neural networks, it is suitable for modern machine learning hardware and it will be able to provide higher quality translations.

"GNMT (Google Neural Machine Translation)" of a translation model using a neural network "ConvS 2 S"SliceNet"Transformer translated from English to German and scored the quality of translation is the graph below. A higher score indicates that the quality of translation is higher, and Transformer has succeeded in the highest quality translation.

Below is a similar benchmark when translating from English to French. Again Transformer showed excellent translation.

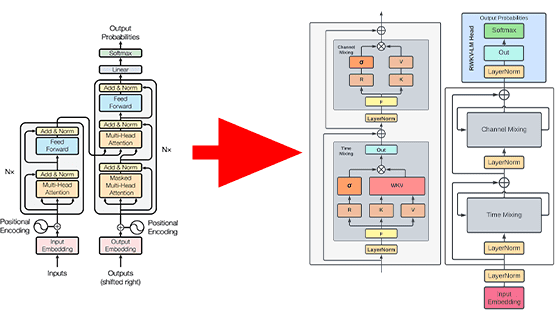

Normal neural networks process languages by generating fixed length or variable length vector space representations. Specifically, we start with the representation of individual words and words, aggregate the information from the surrounding words, and determine the specific meaning of the language in the context. In the case of RNN, which is becoming a standard in the field of translation in recent years, language processing goes from left to right or from right to left in order. When recognizing one word, it is RNN to execute multiple steps to determine the meaning of words and process them one by one. In contrast, in the case of CNN, processing is not performed as much as RNN, but in the case of ByteNet and ConvS 2 S of the CNN architecture, the processing necessary for connecting the meanings of words is RNN It seems to be more than.

In contrast, Transformer has the advantage of running only a certain number of steps. At each step in Transformer, a self-attention mechanism is applied that directly models the relationship between all the words in the sentence regardless of the position of each word. More specifically, each word is compared with all the words in the sentence, the result remains as the attention score of all the words in the sentence, what kind of meaning of each word has meaning based on this score It is judged whether it is.

The following GIF image is a simple illustration of the flow of machine translation by Transformer.

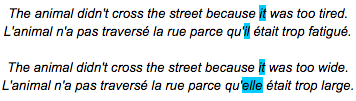

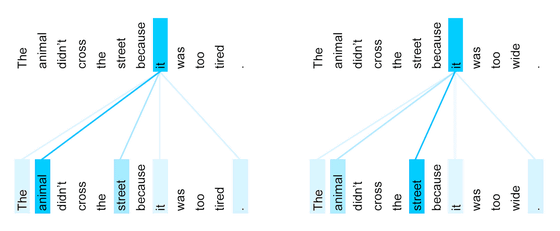

In addition to improving computing performance and translation accuracy, Transformer has advantages. In processing or translating a specific word it is possible to visualize other parts of the sentences involving the network and gain insight into how the information moves through the network. The following example sentences are used to explain this easily. Original English sentences and French translated texts are listed respectively.

In the first English sentence, "it" refers to "animal" and in the second English word "it" refers to "street". When translating this English sentence into French, the translation of "it" will depend on the gender of the noun it points to. And in French, "sex" is different between "animal" and "road", so it needs to be different translation. However, Google Translate can not accurately translate changes in the French translation that differ depending on what it points to, but in Transformer you can translate it exactly like "il" and "elle".

The reason why Transformer can translate correctly is as follows: "When you process or translate specific words, visualize other parts of the sentences involving the network and gain insight into how the information moves through the network It is possible to understand by visualizing how the network judged what "it" points to according to the feature "possible". The line extending from "it" is a candidate for the meaning pointed by "it", the one with the highest score is marked with the darkest color, and the network understands that they point to different meanings, so they are correctly in French That's why I could translate it.

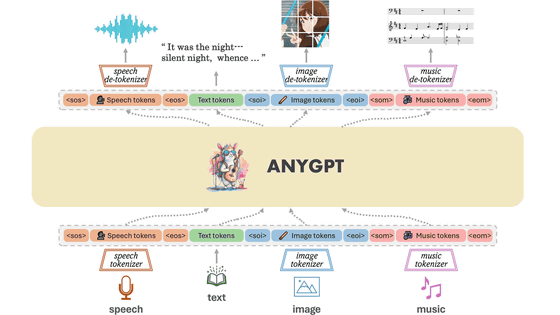

Google is excited about the potential possibilities of Transformer and it has begun to be applied not only to natural languages but also to images and movies that have greatly different inputs and outputs. " Also, development of Transformer is accelerated considerably by Google 's Tensor 2 Tensor library, and it seems that the environment where it is possible to let you learn Transformer network just by downloading the library and calling some commands is also in place.

Related Posts:

in Software, Posted by logu_ii