Google Brain team developing AI publishes "Looking Back on 2017" part 1 looking back on the results of 2017 machine learning research

The Google Brain team, which conducts AI development by Google, looks back on activities in 2017The Google Brain Team - Looking Back on 2017 (Part 1 of 2)We announced. In Part 1, results are reported on themes such as open source software and hardware updates for machine learning which was held in 2017.

Research Blog: The Google Brain Team - Looking Back on 2017 (Part 1 of 2)

https://research.googleblog.com/2018/01/the-google-brain-team-looking-back-on.html

Google Brain senior fellow Jeff Dean says that the emphasis of the Google Brain team is "research that improves understanding and ability to solve new problems in machine learning" . To this end, the activities the Google Brain team worked on in 2017 are as follows.

◆ AutoML

According to Google Brain, if trying to construct a truly intelligent system by machine learning, it is to develop technology to automatically solve new problems occurring in machine learning. Therefore, what is being developed is an approach called "AutoML"ReinforceWhenEvolutionary algorithmIt seems that we are designing a neural network structure newly using both of them.

AutoMLUsed for image classification and object detectionIt has been.

Understanding and generating speech

The ability to understand human language and generate words to establish conversation is an important task in computing. GoogleSpeech recognition technology using end-to-end approach, We reduced the relative word error rate of the speech recognition system by 16%.

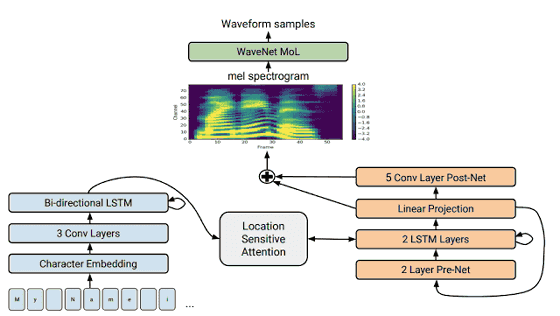

We also succeeded in developing a text-to-speech conversion approach that dramatically improves the quality of the speech produced by collaborating with Google's machine recognition team. While the mean opinion score (MOS) of the existing voice generation system was 4.34, the newly developed model achieved MOS 4.5.3. Since the MOS of professional narrator is 4.58, it seems that it reaches to one step further to professional human's way of speaking.

◆ New Machine Learning Algorithm and Approach

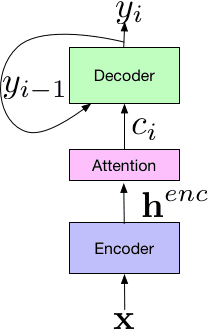

Google Brain will continue to work on developing new algorithms and approaches in machine learning,Routing between Capsules""newMultimodal model"Attention base mechanism",SeveralNew reinforceAnd so on.

◆ Privacy and security

Google Brain is trying to leverage the machine learning approach to enhance privacy and security. About a method of providing different levels of privacy security to be protected by applying machine learning techniquespaperIt is announced in.

A deep understanding of machine learning

Google Brain is thinking that it is important to deepen the understanding of the work of machine learning, and we are actively conducting fundamental research as well. We can not explain the impressive result of deep learning with the theoretical framework of current machine learningInterpretationOr analyze the random matrix to understand how training proceeds in the deep architecturepaperWe are also announcing.

In addition, Google Brain is a machine learning journal "DistillWe also participated in the launch of. More research results will be announced in 2018.

◆ Open dataset for machine learning

Open data sets such as MNIST, CIFAR - 10, ImageNet, SVHN, WMT have greatly developed machine learning.

Google Brain and Google Research are working on open sourcing the data set obtained in the past year's research as follows.

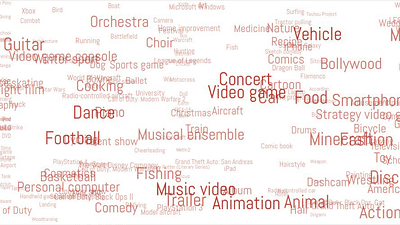

·YouTube-8M: More than 7 million YouTube movies in 4716 class

·YouTube-Bounding Boxes: 5 million rectangles from 210,000 YouTube movies

·Speech Commands Datase: Short voice command of thousands of speakers

·AudioSet: 527 sound events from 2 million YouTube movies

·Atomic Visual Actions (AVA): 210,000 actions labeled from 55,000 video clips

·Open Images: Image of 9 million Creative Commons license labeled 6000 class

·Open Images with Bounding Boxes: 1,200,000 rectangles consisting of 600 classes

◆ TensorFlow

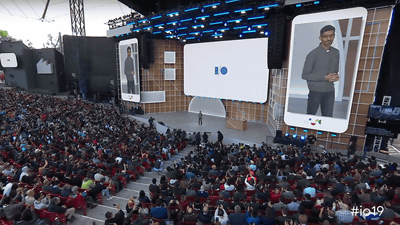

TensorFlow 1.0 is released in February 2017 and TensorFlow v1.4 was released in November, although the second generation machine learning framework TensorFlow was made open source in 2015. Also, in February 2017 the first "TensorFlow Developer Summit"450 developers gathered at Google headquarters and 6,500 people watched the conference with live streaming. Google Brain will also hold a TensorFlow Developer Summit on March 30, 2018.

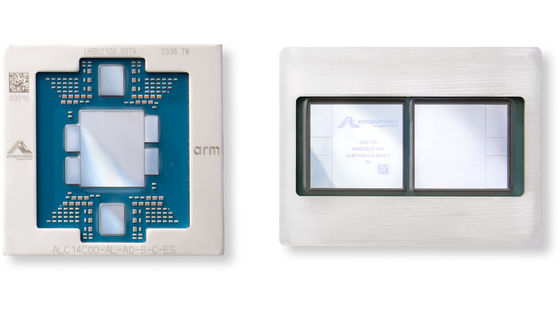

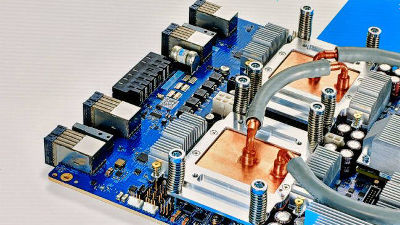

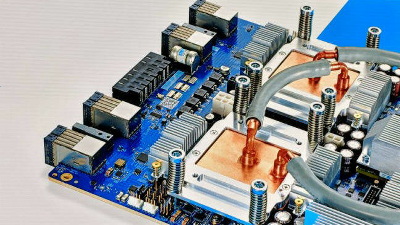

◆ TPU

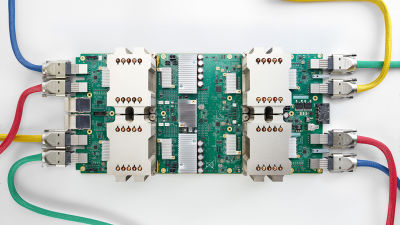

Recognizing the need to dramatically change the hardware structure for deep learning five years ago, Google Brain began to develop a machine Tensor Processing Unit (TPU) equipped with a dedicated ASIC chip,First generation TPUCompleted.

And in 2017 announced second generation TPU. We realized a machine with performance per watt of 30 times higher than GPU and CPU.

2nd generation of Google machine learning machine "TPU", 1 board 180 TFLOPS reaching 11.5 PFLOPS at 64 grid - GIGAZINE

Training to the ResNet-50 ImageNet model shows that we had completed the work that took days on a conventional workstation to complete in just 22 minutes using the 2nd Generation TPUAnnouncement(PDF file)doing. Google also has a program to lend TPU clusters to researchers, and in 2018 many researchers and engineers are planning to shorten the machine learning process and increase the research speed.

Part 2 of The Google Brain Team - Looking Back on 2017 seems to feature activities in 2017 on the application of machine learning in the fields of healthcare, robotics, science and creators.

Related Posts: