Meta discusses the current state and outlook for open hardware for AI

At

Meta's open AI hardware vision - Engineering at Meta

https://engineering.fb.com/2024/10/15/data-infrastructure/metas-open-ai-hardware-vision/

Demand for AI hardware is increasing year by year, and Meta has scaled up its clusters for training AI by 16 times in 2023 and expects this to continue to increase in the future. In addition to strengthening computing power, the company also feels the need to increase network capacity by more than 10 times.

To keep up with this expansion speed, Meta is actively investing in open hardware. This time, Meta said, 'Building on the principles of openness is the most efficient and effective way,' and 'Investing in open hardware will maximize the potential of AI and drive continuous innovation,' and announced three new innovations: 'cutting-edge open rack design,' 'new AI platform,' and 'advanced network fabric and components.'

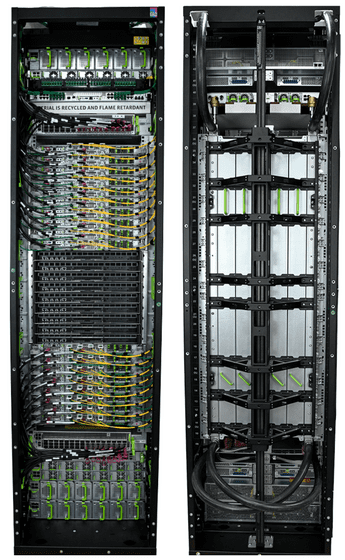

The first is Meta's high-performance rack 'Catalina' designed for AI workloads. Catalina supports the latest NVIDIA GB200 Grace Blackwell superchip as of October 2024, and is said to 'reliably meet the ever-growing demands of AI infrastructure' with power capacity of up to 140kW and mechanisms such as liquid cooling. In addition, the design also emphasizes modularity, allowing other users to customize the rack to accommodate specific AI workloads.

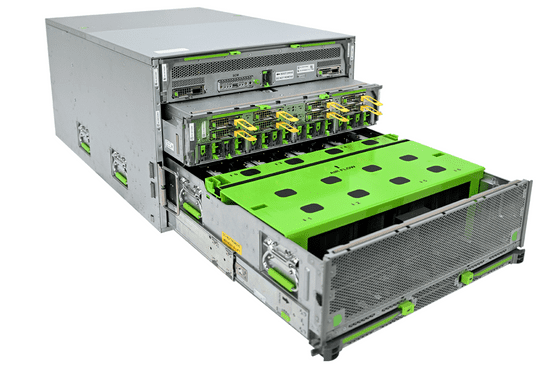

The second is an update to Meta's next-generation AI platform 'Grand Teton,' announced in 2022. Grand Teton is a single monolithic system that integrates power, control, computing, fabric interfaces, etc., making it easy to deploy and quickly scaling large-scale AI inference workloads while improving reliability. This time, in addition to supporting the new AMD Instinct MI300X, the computing power, memory size, network bandwidth, etc. have been enhanced.

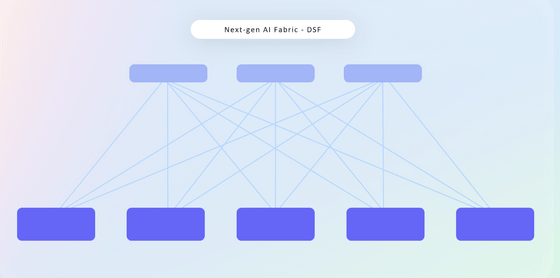

The third is an open, vendor-independent network backend. Meta has built a new Disaggregated Scheduled Fabric (DSF), a distributed network architecture to provide scalable, high-performance networks in response to the demands of data-intensive applications such as AI and machine learning. It also developed and built a new 51T fabric switch based on Broadcom and Cisco ASICs, and a new NIC module called FBNIC.

Meta has also been promoting open innovation in collaboration with Microsoft, and as of October 2024, is working on a new distributed power rack called 'Mount Diablo.' Mount Diablo will increase the number of AI accelerators per rack, and is said to be a 'significant advancement in AI infrastructure.'

Meta said, 'It goes without saying that open software frameworks are important to realizing the full potential of AI,' but 'open hardware frameworks are also important to provide the high-performance, cost-effective, and adaptable infrastructure needed to advance AI.' They called for participation in the Open Compute Project community.

Related Posts:

in Hardware, Posted by log1d_ts