NVIDIA releases 'NVIDIA Dynamo,' an acceleration library for running inference AI at low cost and high efficiency, claiming it can speed up DeepSeek-R1 by 30 times

Semiconductor giant NVIDIA has released ' NVIDIA Dynamo ,' a library for accelerating inference AI such as

Scale and Serve Generative AI | NVIDIA Dynamo

https://www.nvidia.com/en-us/ai/dynamo/

NVIDIA Dynamo Open-Source Library Accelerates and Scales AI Reasoning Models | NVIDIA Newsroom

https://nvidianews.nvidia.com/news/nvidia-dynamo-open-source-library-accelerates-and-scales-ai-reasoning-models?linkId=100000349576608

Inference AI generates tens of thousands of tokens to 'infer' per prompt. Improving inference performance while continuously lowering inference costs accelerates growth and increases revenue opportunities for service providers.

NVIDIA Dynamo is the successor to the NVIDIA Triton Inference Server, which NVIDIA describes as 'new AI inference serving software designed to maximize token revenue generation for AI factories that deploy inference AI.'

NVIDIA Dynamo coordinates and accelerates inference communication across thousands of GPUs and uses distributed serving to separate the processing and generation phases of large language models (LLMs) onto different GPUs, allowing each phase to be individually optimized for its specific needs and making the most of GPU resources.

With the same number of GPUs, NVIDIA Dynamo can double the performance and revenue of AI factories serving Llama models on the NVIDIA Hopper platform. When running the DeepSeek-R1 model on a large cluster of GB200 NVL72 racks, NVIDIA Dynamo's intelligent inference optimizations can increase the number of tokens generated by more than 30x per GPU.

To achieve these inference performance improvements, NVIDIA Dynamo incorporates features that enable increased throughput and reduced costs. It can dynamically add, remove, and reallocate GPUs in response to changing request volumes and types, and it can identify specific GPUs in large clusters to minimize response calculations and route queries. It can also minimize inference costs by offloading inference data to more affordable memory and storage devices and retrieving it quickly when needed.

To improve inference performance, NVIDIA Dynamo maps a 'KV cache' that the inference system maintains in memory from processing requests to thousands of GPUs. It is then designed to route new inference requests to the GPU with the best matching 'KV cache,' avoiding costly recomputation and freeing up the GPU to respond to new incoming requests.

'To process hundreds of millions of requests per month, Perplexity AI uses NVIDIA GPUs and inference software to deliver the performance, reliability and scalability that businesses and users demand,' said Dennis Yarrats, chief technology officer at Perplexity AI. 'By leveraging NVIDIA Dynamo with its enhanced distributed services capabilities, we hope to further improve the efficiency of our inference services and meet the computing demands of new AI inference models.'

In addition, the NVIDIA Dynamo inference platform also supports distributed services, allowing different computational phases of LLM, including building understanding of user queries and generating optimal responses, to be assigned to different GPUs. This approach is ideal for inference models such as the new

Together AI , an AI acceleration cloud, wants to integrate its proprietary Together inference engine with NVIDIA Dynamo to seamlessly scale inference workloads across GPU nodes, allowing Together AI to dynamically resolve traffic bottlenecks at different stages of the model pipeline.

'To cost-effectively scale inference models, new advanced inference techniques such as distributed services and context-aware routing are required,' said Choi Chang, chief technology officer at Together AI. 'Together AI uses its own inference engine to deliver industry-leading performance. NVIDIA Dynamo's openness and modularity allow its components to be seamlessly plugged into the engine to serve more requests while optimizing resource utilization, maximizing investments in accelerated computing. We are pleased to be able to cost-effectively provide our users with open source inference models by leveraging the groundbreaking capabilities of NVIDIA Dynamo.'

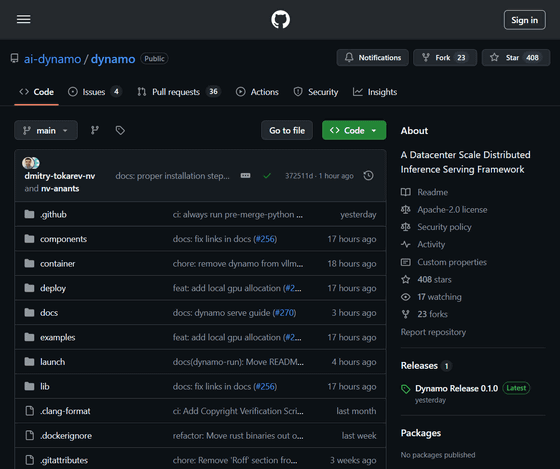

NVIDIA Dynamo is open source and supports PyTorch, SGLang, NVIDIA TensorRT-LLM and vLLM, enabling enterprises, startups and researchers to develop and optimize how they serve AI models across distributed inference. The source code is available on GitHub.

GitHub - ai-dynamo/dynamo: A Datacenter Scale Distributed Inference Serving Framework

https://github.com/ai-dynamo/dynamo

Related Posts: