Google declared "not using AI technology for weapons development", announced "principle of AI development"

byRobert Scoble

In response to criticism from Google employees saying "We are diverting development AI technology to military technology", Google announced "Principles of AI technology development". Here, it is declared that not only seven objectives for developing AI technology but also specific examples such as "Do not use for weapons development" are decided to rule themselves.

AI at Google: our principles

https://www.blog.google/topics/ai/ai-principles/

For a series of incidents that Google employees were repelling that Google provided AI technology to the US Department of Defense (Pentagon), you can see it in the following article.

Google is helping with image analysis AI for drone developed by Pentagon with confidentiality - GIGAZINE

In response to Google's military technical cooperation leading the world with AI technology, the public has a strong influence, and above all, there is also a strong opposition from more than 4000 Google employees, finally CEO Thunder Pichai himself "Google AI Principles of use "to be declared in the situation.

Google said that "AI has already expanded its use to various fields beyond its practical use in Google services and will further increase its use scenarios in the future," according to which AI technology will have a significant impact on society I am going to say. And as a leader in AI technology development, we have declared as clear guidelines on AI technology as follows.

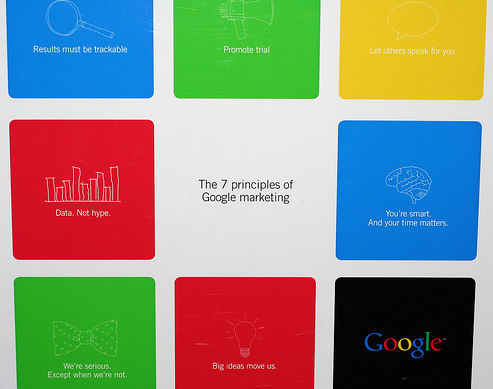

◆ Objectives for developing AI

byPietro & Silvia

· 1: be socially beneficial

In developing and utilizing potential AI technology, considering a wide range of social and economic factors, develop AI technology when judging that there is "possibility" which greatly exceeds predictable risks and failures .

· 2: Do not promote unfair prejudice

We recognize that algorithms and databases are susceptible to "unfair bias" and their mechanism is complicated. Efforts shall be made not to give undue influence to particularly sensitive things such as race, ethnicity, sex, nationality, income, sexuality, ability, political thought, religious belief.

· 3: Safe to create and test

Continue to pay attention to "safety" to avoid unintentional harm. Test AI technology under restricted circumstances and monitor after service deployment.

· 4: Responsible for people

Design the AI system to provide appropriate feedback, related explanation, appeal opportunities.

· 5: Privacy-conscious design

Incorporate the principle of privacy in developing and using AI technology. Provide opportunities for notification and consent, encourage architectures with privacy protection functions, and have appropriate transparency and control over data usage.

· 6: We will support the standard of high scientific virtue

Technological innovation is based on scientific verification, open investigation, intellectual rigor, integrity, cooperation. Given that AI tools have the potential to open up research and knowledge in important fields such as biology, chemistry, medicine and environmental studies, AI development aims to maintain high standards for scientific virtues. We will cooperate with various stakeholders to promote thoughtful leadership in the science field.

· 7: We strive to make use according to these principles

In the development of AI technology, "How much is related to the main purpose and usage method of technology, whether there is a possibility of misuse" "Is the technology creative or more general availability" " Does it have a big impact on society? "" Consider factors such as whether you are providing general tools, integrating tools, developing solutions. "

In addition to these "purpose of developing AI", we also disclose "fields not developing AI".

◆ AI technology not to develop

· 1: Technology that is likely to cause or cause major harm

· 2: Weapons and other technologies aimed at making it easy to directly hurt or hurt people

· 3: Collection and use of information in a form that violates international norms

· 4: Technology with a purpose contrary to international law and the principles of human rights

Related Posts: