Will Google remove its pledge not to use AI for weapons from its website?

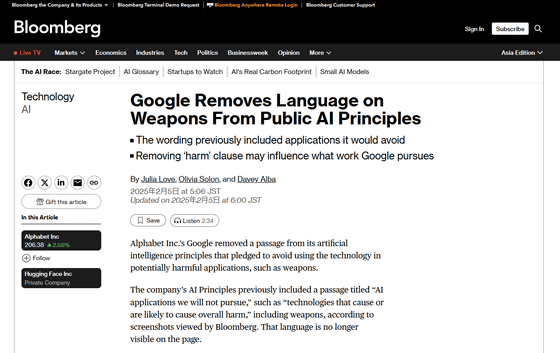

Google has removed a pledge from its website not to develop AI for weapons or surveillance, reports Bloomberg.

Google Removes Language on Weapons From Public AI Principles (GOOGL) - Bloomberg

https://www.bloomberg.com/news/articles/2025-02-04/google-removes-language-on-weapons-from-public-ai-principles

Google removes pledge to not use AI for weapons from website | TechCrunch

https://techcrunch.com/2025/02/04/google-removes-pledge-to-not-use-ai-for-weapons-from-website/

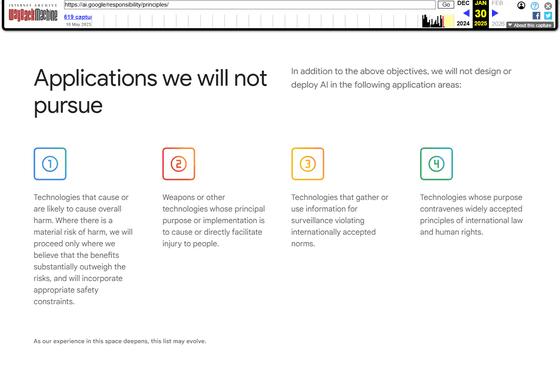

According to Bloomberg, Google's 'AI Principles' includes a chapter titled 'Applications we will not pursue,' which pledges not to design or deploy AI for 'technology that causes or has the potential to cause harm in the aggregate,' 'weapons or other technology whose primary purpose or effect is to inflict or directly facilitate harm on humans,' 'technology that collects or uses information for surveillance purposes in violation of internationally recognized norms,' or 'technology whose purpose is to violate international law and widely accepted principles of human rights.'

In fact, if you check the same page on the Internet Archive as of January 30, 2025, you will find that there is indeed a chapter titled 'Applications we will not pursue.'

AI Principles – Google AI – Google AI (Internet Archive – 2025/01/30 07:56:26)

https://web.archive.org/web/20250130075626/https://ai.google/responsibility/principles/

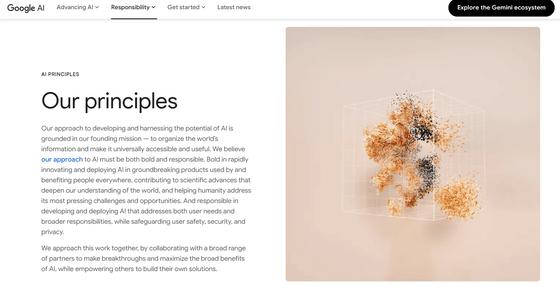

However, at the time of writing, the 'AI Principles' page has been heavily edited and does not contain any content that corresponds to 'Applications we will not pursue.' However, it does say that 'we will work to mitigate unintended or harmful consequences and avoid unfair bias' and 'to be consistent with widely accepted principles of international law and human rights.'

AI Principles – Google AI – Google AI

https://ai.google/responsibility/principles/

In the new 'AI Principles,' Google Senior Vice President James Maniika and Google DeepMind leader Demis Hassabis state that 'democracies should lead AI development and be guided by core values such as freedom, equality, and respect for human rights. ' They also argue that 'companies, governments, and organizations that share these values should work together to create AI that protects people and supports global growth and national security.'

Margaret Mitchell, who previously co-led Google's ethical AI team and is currently chief ethics scientist at Hugging Face, an AI development platform, said, 'The removal of 'Applications we will not pursue' undermines the work of ethical AI and activists, and more troublingly, it opens the door to Google directly addressing the deployment of technology that could take lives.'

When TechCrunch, an IT news site, asked Google for comment on the matter, the company referred to a public blog post titled ' Responsible AI: Our 2024 report and ongoing work .' In the blog post, Google said, 'We believe that companies, governments, and organizations that share these values should work together to develop AI that protects people, drives global growth, and supports national security.'

In response, TechCrunch points out that Google has faced strong backlash from some employees over its recent contract to provide cloud services to Israel under ' Project Nimbus .'

More than 50 former Google employees who were fired for protesting Google's business ties with Israel have filed a complaint with regulators alleging illegal retaliation, with some even claiming they were fired for simply watching the protests - GIGAZINE

TechCrunch reported, 'Google claims that its AI is not being used to harm humans, but the Department of Defense's AI chief said Google's AI models are accelerating the U.S. military's process of identifying, tracking and eliminating threats.'

Related Posts:

in Software, Posted by log1i_yk