Apple reveals for the first time Artificial intelligence development confidentiality by deep learning

ByVincent Noel

IT-related media Backchannel interviewed so-so members such as Eddie Cue, senior vice president of Internet software and services at Apple, Craig Federigi, senior vice president of software engineering, Mr. Alex Aceero, senior director of Siri Then, it was revealed for the first time about artificial intelligence development that Siri and other Apple have done.

An Exclusive Look at How AI and Machine Learning Work at Apple - Backchannel

https://backchannel.com/an-exclusive-look-at-how-ai-and-machine-learning-work-at-apple-8dbfb131932b

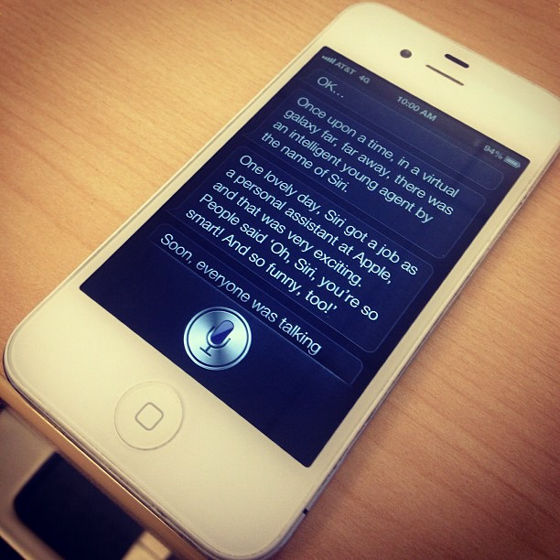

Although the voice recognition assistant "Siri" installed in the iPhone was first installed in the iPhone 4S released in 2011, there was a problem with the precision of the speech recognition function at the very end, and the voice of dissatisfaction was raised from the user Thing. To improve the problem, Apple changed Siri's speech recognition to a system based on a neural network. This change was delivered in the United States in July 2014 and was delivered to the world in August of the same year, but Apple did not notify users that their functionality was improved.

ByDave Schumaker

"By changing the system to a neural network base, Siri's error rate has been reduced to about half of what it was before," said Alex Acero, leader of Siri's speech team, that deep learning of machine learning greatly contributed Also, one of the reasons why the error rate dropped greatly as to how we optimized deep learning was told in an interview.

Deep learning is a machine learning method that adopts a neural network of a mechanism that imitates the human brain and is suitable for pattern recognition processing such as image and speech recognition. Using this deep learning, the recognition accuracy of Siri has greatly improved, but there was a development background unique to Apple.

Unlike Android terminals where Google develops OS and manufacturers develop terminals, Apple is developing both iOS / iPhone software and hardware. Even when using Siri for deep learning, development was done while communicating between developers of both software and hardware so that deep learning ability can be maximized.

Craig Federigi, senior vice president of software engineering says, "In order to maximize the capabilities of deep learning, we have to deal with hardware, microphones, and voice, such as how many microphones to install and where to put the microphone It is like a concert to play with different types of instruments.In the development of speech recognition system, Apple develops both hardware and software What kind of terminal We had a big advantage compared to companies developing speech recognition software which we do not know whether they are installed in the market. "

ByMartin Hajek

Apple's deep learning technology is not only active in Siri. Such as predicting the application that the user is about to open, making a short list, notifying the schedule not registered in the calendar, telling the location of the hotel he / she is reserving before searching The feature seems to be realized because Apple adopted deep learning and neural network.

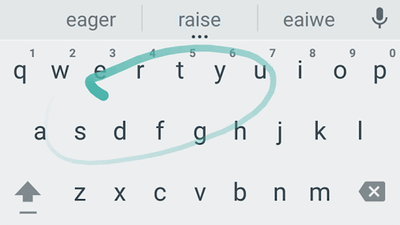

However, in order to maximize the ability of deep learning, it is very important to collect user's data and to "learn". For example, on the iPhone, the text information entered by the user using the QuickType keyboard is monitored by the neural network and collected all, but it seems that the collected data is not sent to the server but stored on the device. We are focusing on user data security while measuring the user experience by deep learning.

Que says, "Some people are lagging behind AI's development because Apple does not own user data", but we are finding ways to gather necessary data while protecting privacy. " I talked in an interview. The way to gather necessary data while protecting the privacy that Mr. Que spoke is the technology called "Differential Privacy" planned to be introduced in iOS 10 that is expected to appear in the fall of 2016. Differential privacy allows you to analyze data without having to identify individuals by gathering samples of users' usage patterns and adding random data to them, predictive conversion of iOS 10's message application, text and pictograms It is used for Notes application · Spotlight.

Also, deep learning is not only for the iPhone but for the stylus penApple PencilIt is also utilized. Apple Pencil has a feature called Palm rejection, which makes the iPad display not react to the hands of users using Apple Pencil. Thanks to palm rejection, users can concentrate on working without concern for where to put their hands.

ByAaron Yoo

Deep learning supports technologies that recognize the difference between operations by Apple Pencil and hand touch and swipe. Federigi said, "If palm rejection does not work correctly, I do not feel like writing, so Apple Pencil is not an excellent product."

Related Posts:

in Software, Smartphone, Posted by darkhorse_log