'ModernBERT', the successor to 'BERT', a model that vectorizes data for purposes such as search and classification, has been released

AI research institutes Answer.AI and LightOn have developed ModernBERT, an improved version of Google's natural language processing model

Finally, a Replacement for BERT: Introducing ModernBERT – Answer.AI

https://www.answer.ai/posts/2024-12-19-modernbert.html

BERT is an AI model that offered a novel mechanism at the time of its announcement, which allows predictions based on not only the context before but also the context after the word when predicting words in a sentence. This feature, along with its ability to learn from raw unlabeled data and its ability to perform 'transfer learning,' which allows trained models to be used for other tasks, have been highly evaluated and applied to many research studies, and it is said that this model has dramatically improved AI technology. As of 2024, it is said to be the second most downloaded model on HuggingFace.

Researchers at Answer.AI and LightOn have released 'Modern BERT,' which incorporates many elements from recent research into large-scale language models and updates its architecture and training process.

ModernBERT - an answerdotai Collection

https://huggingface.co/collections/answerdotai/modernbert-67627ad707a4acbf33c41deb

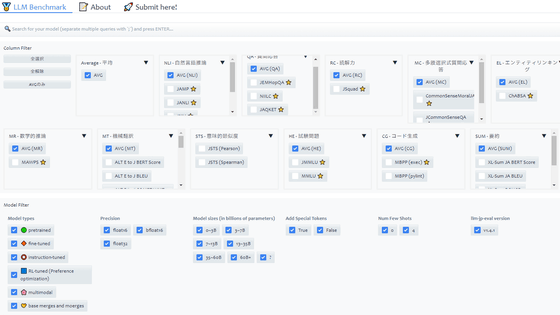

ModernBERT is a model that is faster and more accurate than BERT, and increases the context length to 8k (8192) tokens. This has resulted in it outperforming other models in almost all three task categories: search, natural language understanding, and code search.

ModernBERT, like BERT, is an encoder-only model. Encoder-only models have the characteristic of outputting a 'list of numbers (embedding vector)', which means that they literally encode human-understandable text into numbers. By passing the encoded data to a decoder-only model, the decoder-only model can generate sentences and pictures.

Decoder-only models can perform similarly to encoder-only models, but are mathematically constrained by the fact that they are not allowed to peek at the next token that will be input - decoder-only models are trained to look at previous tokens, which makes them more efficient.

According to the researchers, the decoder-only model has the disadvantage that it is too large, too slow, and too expensive for many tasks, which can be inconvenient for general users. The researchers likened the decoder-only model to the Ferrari SF-23 and the encoder-only model to the Honda Civic, saying, 'The former may win races but has high maintenance costs, while the latter is affordable and fuel-efficient.' They said that by improving the performance of the encoder-only model, they aimed to provide a cheaper, more powerful model as an option.

In fact, one of the models based on BERT, '

ModernBERT outperforms other models in multiple benchmarks measuring performance in search (DPR/ColBERT), natural language processing (NLU), and code search (Code), and also has the advantage of being able to handle inputs of 8k tokens, which is over 16x longer than other encoder-only models.

Researchers from Answer.AI and LightOn said they are “looking forward to seeing how the community uses ModernBERT.”

Related Posts: