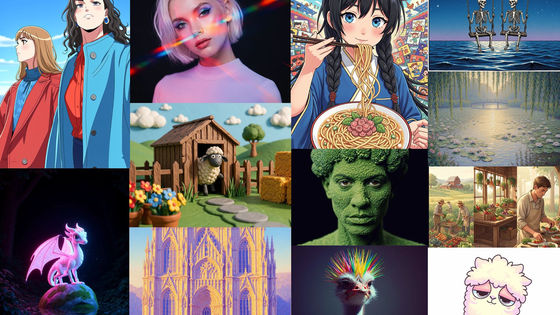

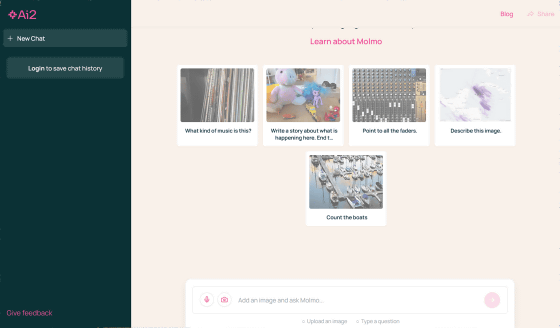

A multimodal AI called 'Molmo' that is small but has performance comparable to OpenAI and Google's AI will be released as open source, and a browser version demo page will also be released

On September 25, 2024,

Molmo by Ai2

https://molmo.allenai.org/

molmo.allenai.org/blog

https://molmo.allenai.org/blog

Meet Molmo: a family of open, state-of-the-art multimodal AI models.

— Ai2 (@allen_ai) September 25, 2024

Our best model outperforms proprietary systems, using 1000x less data.

Molmo doesn't just understand multimodal data—it acts on it, enabling rich interactions in both the physical and virtual worlds.

Try it… pic.twitter.com/kS4W1wYDPx

Ai2's Molmo shows open source can meet, and beat, closed multimodal models | TechCrunch

https://techcrunch.com/2024/09/25/ai2s-molmo-shows-open-source-can-meet-and-beat-closed-multimodal-models/

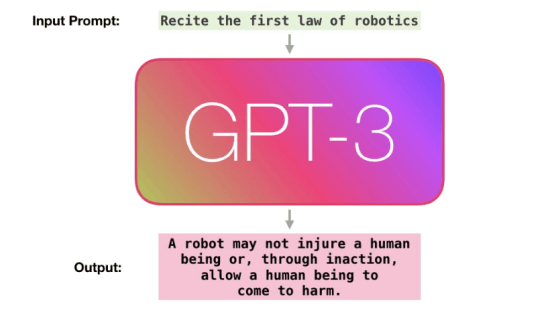

You can see what kind of AI Molmo, which Ai2 has released this time, is by watching the video below.

👋 Meet Molmo: A Family of Open State-of-the-Art Multimodal AI Models - YouTube

A man picks up a bag of snacks on his smartphone and asks in a voice, 'Is this vegan?'

Molmo responded in an AI voice, 'No, this is not a vegan meal.' In this way, Molmo can correctly recognize what is in the image and answer questions from people.

It can also recognize the number of specific objects in an image and point to them with a pointer. For example, you can take a picture of a table with many people sitting around and ask the robot to 'count the people.'

The person then used a pink pointer to point out each person in the photo, and answered that there were 21 people in total.

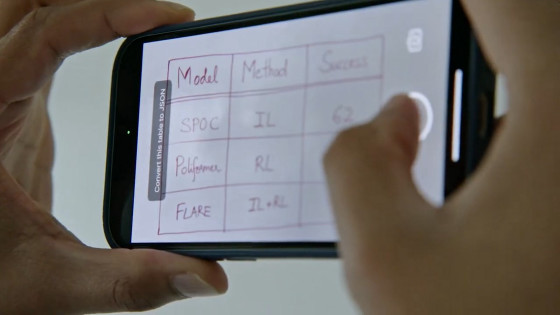

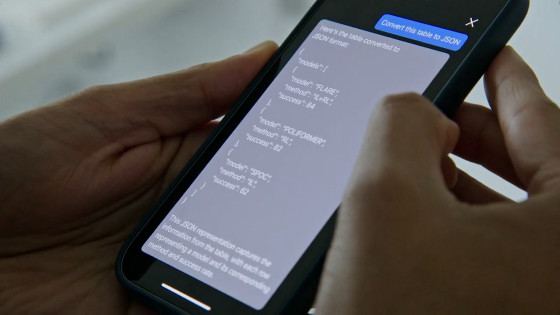

It also supports tasks such as coding, and can respond to requests such as 'Convert this table into a JSON file.'

It is also possible to take a photo of the bike and have them create a description for selling the bike on Craigslist.

It can even take a picture of a sign next to a parking space and answer more complex questions like, 'Can I park here at 1pm on Monday? If so, for how long?'

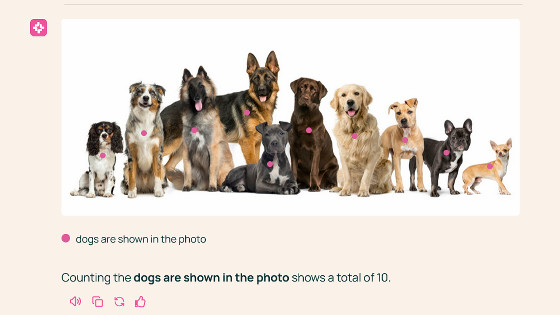

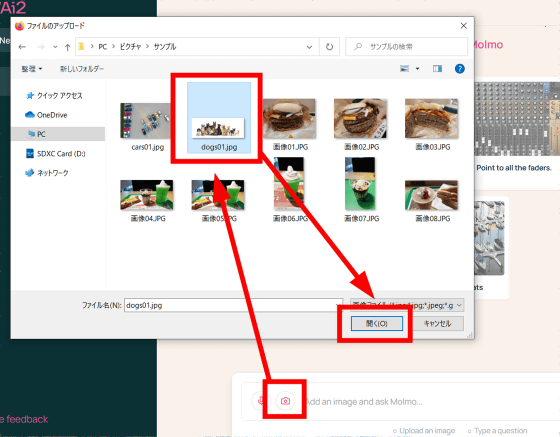

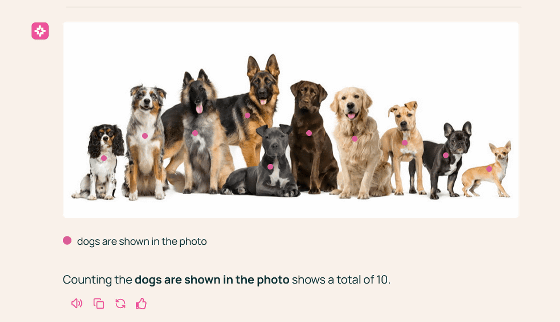

Click the camera icon in the input form at the bottom and select an image you like. In this example, I chose an image with lots of dogs in it.

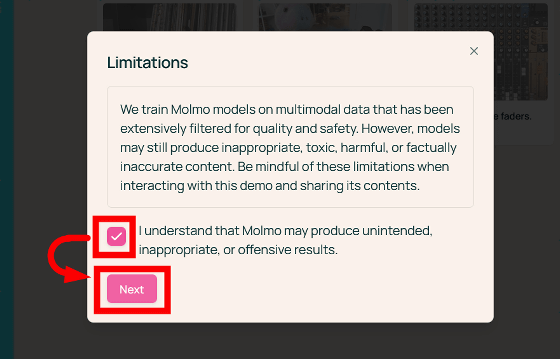

A warning will then be displayed stating, 'Molmo is trained on multimodal data that has been filtered for quality and safety, but may generate inappropriate, harmful, or inaccurate content.' Check the statement, 'I understand that Molmo may produce unintended, inappropriate, or offensive results,' and click 'Next.'

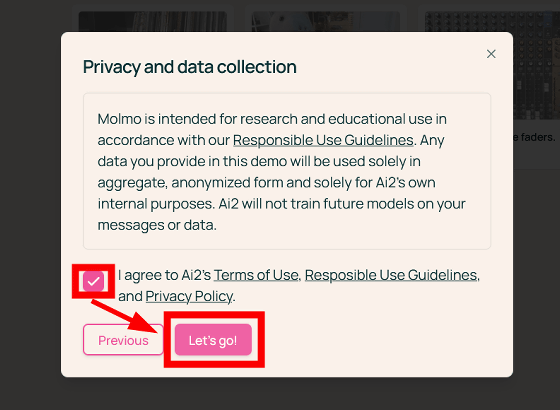

Next, you will be informed that the data you provided on the demo page will be anonymized and used as aggregate data within Ai2. Ai2 will not train future models with the messages or data you enter on the demo page. If you agree to Ai2's

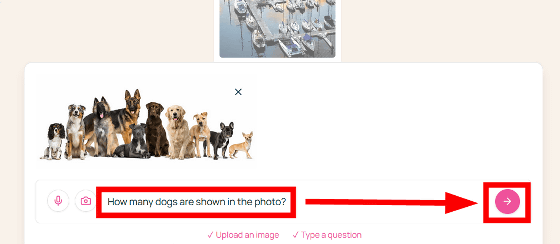

Next, enter a question about the image in text. This time, try asking, 'How many dogs are shown in the photo?'

After a few seconds, the process was completed and the answer was returned: 'Counting the dogs are shown in the photo shows a total of 10.' Each dog is shown as a pink dot, making it easy to see.

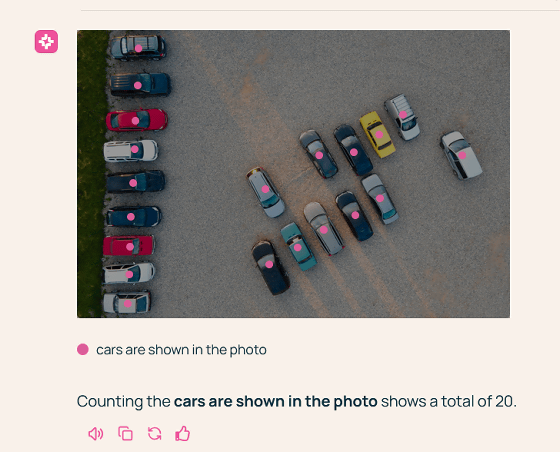

Next, I selected a photo with multiple cars and asked, 'How many cars are shown in the photo?' The child quickly counted and told me, '20 cars.'

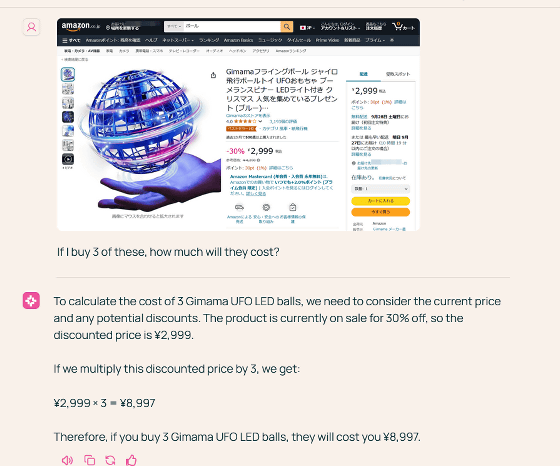

Next, they send a screenshot of an Amazon page selling

He then understood that the sale price was 2,999 yen, 30% off the regular price, and correctly answered that buying three would cost 8,997 yen.

Molmo is not a full-service chatbot like ChatGPT, nor does it have an API or enterprise integration capabilities, but like other multimodal AIs, it can answer questions about a variety of everyday situations and objects.

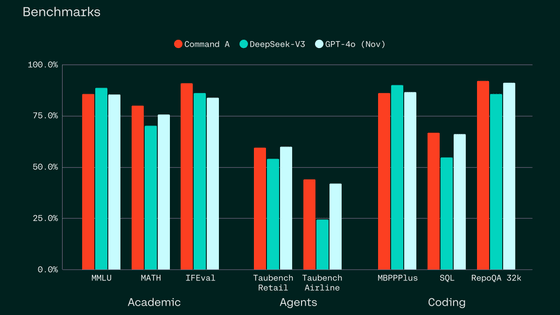

In AI development, it has been consistently said that 'the more training data and parameters, the better,' but if we continue this way, at some point we will face a shortage of data and rising computing costs. In contrast, Molmo is said to exhibit extremely high performance despite having very few parameters, '72B/7B/1B,' compared to state-of-the-art AI.

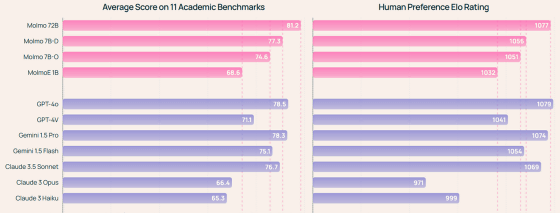

Below is a graph comparing Molmo's performance with OpenAI's GPT series and Google's Gemini series. The left shows the average of 11 academic benchmarks, and the right shows human ratings. Pink indicates the scores of various Molmo models, and blue indicates the scores of AIs such as the GPT series, Gemini, and Claude. It can be seen that Molmo has achieved scores comparable to cutting-edge AIs such as GPT-4o and Gemini 1.5 Pro. Despite this, Molmo's model size is about one-tenth of these AIs.

The reason why Molmo's training data is small yet its performance is high is because of the high quality of the data. Molmo uses a dataset of 600,000 high-quality images annotated with 'data from people describing the images in audio,' rather than a dataset of billions of images that contain low-quality or duplicated ones.

The release of Molmo, which is completely free and open source, enables developers and creators to create AI-powered apps, services and experiences without the need for permission from or payment to major technology companies. Ai2 CEO Ali Farhadi said, 'We are targeting researchers, developers, app developers, and people who don't know how to handle these large-scale models. The key principle in targeting such a wide audience is what we have been promoting for some time: making it more accessible.'

Molmo's model is open-sourced and available on the machine learning platform Hugging Face.

Molmo - a Allenai Collection

https://huggingface.co/collections/allenai/molmo-66f379e6fe3b8ef090a8ca19

Related Posts:

in AI, Video, Software, Web Service, Review, Posted by log1h_ik