Google announces Gemma 2 2B, a compact parameter size model based on the large-scale language model Gemma 2, ShieldGemma, which filters the input and output of AI models, and Gemma Scope, a model interpretation tool

Google announced the large-scale language model (LLM) ' Gemma 2 ' in June 2024. Gemma 2 was initially announced with two parameter sizes, 9 billion (9B) and 27 billion (27B), but the company has announced ' Gemma 2 2B ', which has a more compact parameter size than these but still provides superior performance, ' ShieldGemma ', which can filter the input and output of AI models based on Gemma 2 to protect users' safety, and ' Gemma Scope ', a model interpretation tool that can provide unparalleled insight into the inner workings of the model.

Smaller, Safer, More Transparent: Advancing Responsible AI with Gemma - Google Developers Blog

https://developers.googleblog.com/en/smaller-safer-more-transparent-advancing-responsible-ai-with-gemma/

Gemma Scope: helping the safety community shed light on the inner workings of language models - Google DeepMind

https://deepmind.google/discover/blog/gemma-scope-helping-the-safety-community-shed-light-on-the-inner-workings-of-language-models/

Google releases Gemma 2 2B, ShieldGemma and Gemma Scope

https://huggingface.co/blog/gemma-july-update

Google DeepMind's new Gemma 2 model outperforms larger LLMs with fewer parameters

https://the-decoder.com/google-deepminds-new-gemma-2-model-outperforms-larger-llms-with-fewer-parameters/

Google's tiny AI model 'Gemma 2 2B' challenges tech giants in surprising surprise | VentureBeat

https://venturebeat.com/ai/googles-tiny-ai-model-gemma-2-2b-challenges-tech-giants-in-surprising-upset/

Google releases new 'open' AI models with a focus on safety | TechCrunch

https://techcrunch.com/2024/07/31/google-releases-new-open-ai-models-with-a-focus-on-safety/

Google Expands Gemma 2 Family with New 2B Model, Safety Classifiers, and Interpretability Tools

https://www.maginative.com/article/google-expands-gemma-2-family-with-new-2b-model-safety-classifiers-and-interpretability-tools/

Google Unveils Gemma 2 2B, Outperforms GPT-3.5-Turbo and Mixtral-8x7B

https://analyticsindiamag.com/ai-news-updates/google-unveils-gemma-2-2b-outperforms-gpt-3-5-turbo-and-mixtral-8x7b/

Google DeepMind launches 2B parameter Gemma 2 model

https://thenextweb.com/news/google-deepmind-2b-parameter-gemma-2-model

Gemma 2, announced by Google in June 2024, was released with parameter sizes of 9B and 27B. After its release, the larger 27B model ranked highest in the LMSYS Chatbot Arena , a crowdsourced open-source leaderboard that evaluates LLMs, and outperformed popular AI models with more than twice the parameter size in conversations with humans. In addition to this, Gemma 2 is built on a responsible AI foundation that prioritizes safety and accessibility.

To further develop these features of Gemma 2, Google has announced three new products as part of the Gemma 2 family.

◆ Gemma 2 2B

'Gemma 2 2B' is the most compact and lightweight model among existing models, with a parameter size of 2 billion (2B). Google claims that Gemma 2 2B is able to demonstrate exceptional performance despite its compact size by learning from models with larger parameter sizes.

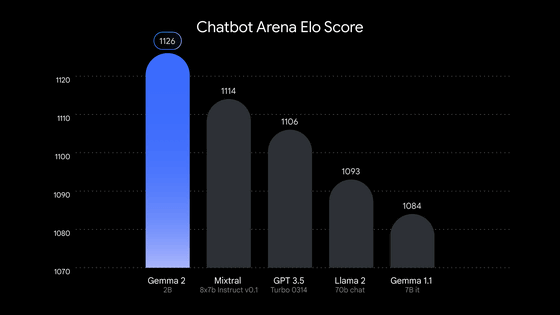

The graph below compares the scores of Gemma 2 2B, Mixtral 8x7b instruct v0.1 , GPT 3.5 Turbo 0314 , Llama 2 70b chat , and Gemma 1.1 7B it on the LMSYS Chatbot Arena. It has been demonstrated that it outperforms LLMs with larger parameter sizes. The scores used for the comparison were obtained on July 30, 2024.

Google lists the features of the Gemma 2 2B as follows:

- Outstanding performance

It offers best-in-class performance relative to parameter size, outperforming other open models in its category.

- Flexible and cost-effective deployment

Gemma 2 2B runs efficiently on a wide range of hardware, from edge devices and laptops to robust cloud deployments with

・Open access available

It's available under a commercially friendly license for research and commercial applications, and is compact enough to run on the free tier of Google Colab's T4 GPUs , making 'experimentation and development easier than ever,' Google boasts.

In addition, model weights for Gemma 2 2B are available for download from Kaggle , Hugging Face , and Vertex AI Model Garden , and you can also try them out in Google AI Studio .

◆ Shield Gemma

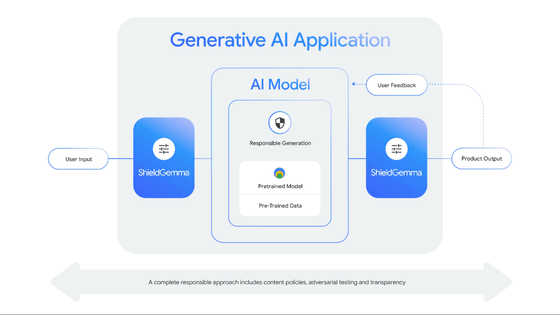

Responsibly deploying open models and ensuring compelling, safe and inclusive AI output requires significant effort from developers and researchers. To assist developers in this process, ShieldGemma is a state-of-the-art safety classifier designed to detect and mitigate harmful content within the input and output of AI models. ShieldGemma targets four main areas of harm: hate speech, harassment, sexually explicit material and dangerous content.

These open classifiers complement the existing suite of safety classifiers in

・SOTA performance

Built on Gemma 2, ShieldGemma is the industry-leading safety classification tool.

Flexible size

ShieldGemma offers a variety of model sizes to meet diverse needs. The 2B model is best suited for online classification tasks, while the 9B and 27B models perform better in offline applications where latency is less of a concern. All sizes leverage NVIDIA speed optimizations to ensure efficient performance across hardware.

・Open and collaborative

The open nature of ShieldGemma will foster transparency and collaboration within the AI community and contribute to the future of safety standards for the machine learning industry.

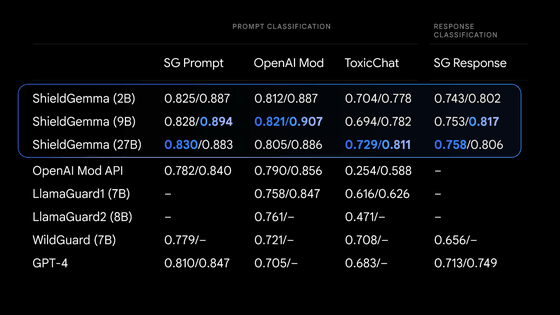

Below are the scores for 'prompt calassification' and 'response classification' of multiple AI models, including ShieldGemma. A higher score indicates better performance. ShieldGemma (SG) Prompt and SG Response are datasets created by Google for testing, while OpenAI Mod and ToxicChat are external benchmark datasets.

◆ Gemma Scope

Gemma Scope offers researchers and developers unprecedented transparency into the decision-making process of Gemma 2 models. Acting like a powerful microscope, Gemma Scope uses sparse autoencoders (SAEs) to zoom in on specific points within the model, making the inner workings of the model easier to interpret.

SAEs are specialized neural networks that help parse the dense, complex information processed by Gemma 2 and expand it into a form that is easier to analyze and understand. By studying these expanded views, researchers can gain valuable insights into how Gemma 2 identifies patterns, processes information, and ultimately makes predictions. Gemma Scope aims to help the AI research community discover ways to build more understandable, accountable, and trustworthy AI systems.

Google cites three groundbreaking features of Gemma Scope:

・Open SAEs

There are over 400 freely available SAEs covering all layers of Gemma 2 2B and 9B.

・Interactive demo

Neuronpedia allows you to explore the functionality of SAEs and analyze the behavior of your models without writing any code.

Easy to use repository

Code and examples for interfacing with SAEs and Gemma 2 are also provided.

Related Posts:

in Software, Posted by logu_ii