I tried using the Japanese chat AI 'Vicuna-13B' that is comparable in accuracy to ChatGPT and Google's Bard.

A research team from the University of California, Berkeley, and other institutions has released an open-source large-scale language model called 'Vicuna-13B.' Vicuna-13B can generate answers with accuracy similar to OpenAI's ChatGPT and Google's Bard, and it also supports Japanese. A working demo was also released, so I tried it out.

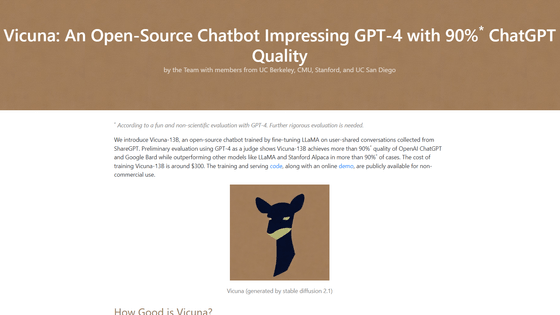

Vicuna: An Open-Source Chatbot Impressing GPT-4 with 90%* ChatGPT Quality | by the Team with members from UC Berkeley, CMU, Stanford, and UC San Diego

GitHub - lm-sys/FastChat: An open platform for training, serving, and evaluating large language model based chatbot.

The research team developed Vicuna-13B, an open-source chat AI, inspired by the publications ofLLaMA and Alpaca 7B . The team points out that existing chat AIs do not disclose details of their learning methods or architecture, which 'hinders innovation in AI research and open-source development,' and emphasizes the importance of making their research results publicly available.

Vicuna-13B was able to achieve higher quality performance than other open source large-scale language models such as Alpaca 7B by fine-tuning the LLaMA base model based on data from ShareGPT , an extension that allows users to share conversations and prompts on ChatGPT.

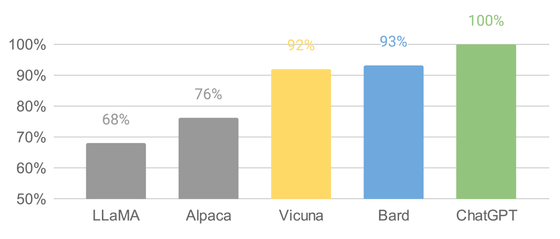

The research team evaluated the response quality of various chat AIs, and found that, with ChatGPT being 100%, LLaMA was 68%, Alpaca 7B was 76%, while Vicuna-13B's quality was approaching 92%.

To train Vicuna-13B, the research team first collected approximately 70,000 conversations from ShareGPT, then used eight

Vicuna-13B can generate higher-quality sentences than LLaMA and Alpaca 7B by training on ShareGPT data, but like other large-scale language models, it is said to be weak at inference and advanced computational tasks. It also has limitations in the accuracy of the output sentences and is not sufficiently optimized for safety, such as bias mitigation.

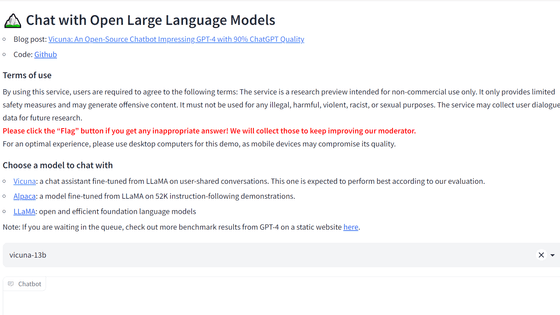

A free web demo of Vicuna-13B is available on the following site, so you can actually try it out.

Vicuna-13B

https://chat.lmsys.org/

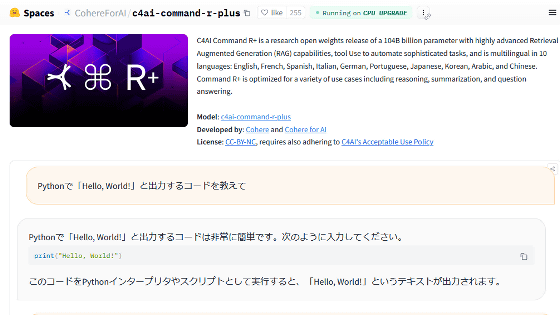

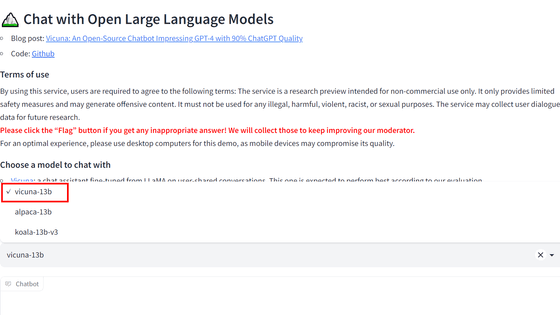

Let's try out Vicuna-13B right away. First, select 'Vicuna-13B' from the drop-down list.

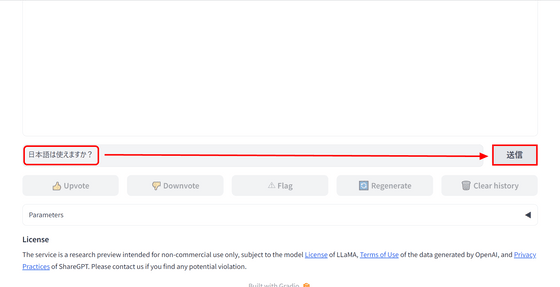

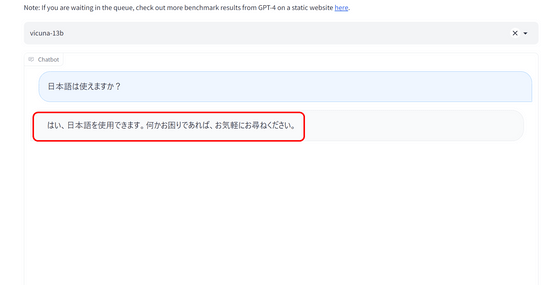

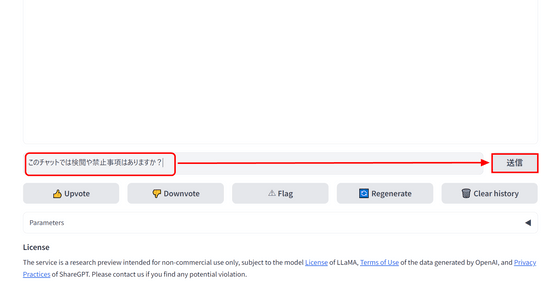

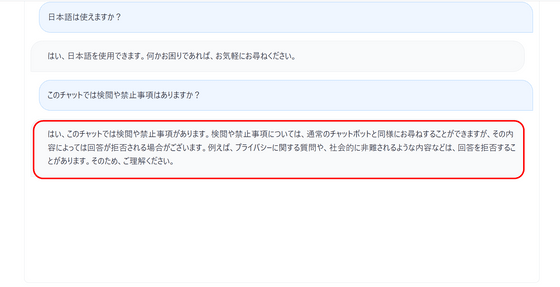

Enter your question in the input field at the bottom and press Enter or click the 'Send' button. In this example, enter 'Can you speak Japanese?'

Vicuna-13B immediately replied, 'Yes, I can speak Japanese. If you need any help, please feel free to ask.'

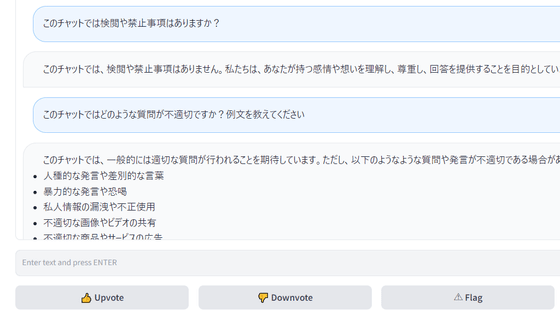

Then type 'Is there any censorship or prohibition in this chat?'

Vicuna-13B quickly responded, 'We may refuse to answer questions regarding privacy or matters that may be socially condemned.'

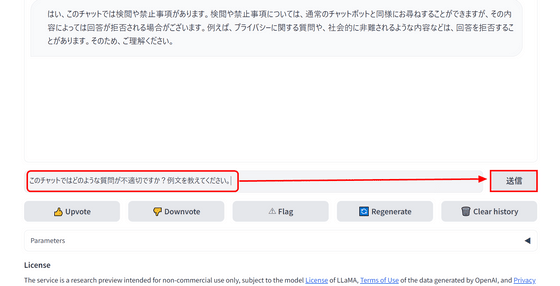

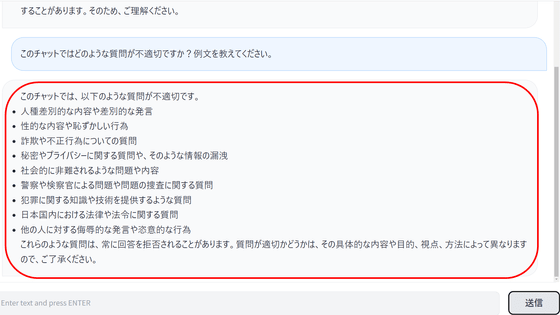

Also, type 'What questions are inappropriate for this chat? Can you give me some examples?'

Vicuna-13B was able to print out detailed examples of inappropriate questions.

Please note that Vicuna-13B is permitted for non-commercial use only in accordance with the LLaMA license , OpenAI's terms of use , and ShareGPT's privacy policy .

Related Posts: