Databricks announces 'Dolly 2.0', an open source large-scale language model that can be used for free and for commercial use

Databricks, which released the large-scale language model (LLM) `` Dolly '' in March 2023, announced `` Dolly 2.0 '', the first open source instruction-following LLM, in just two weeks.

Free Dolly: Introducing the World's First Open and Commercially Viable Instruction-Tuned LLM - The Databricks Blog

Databricks releases Dolly 2.0, the first open, instruction-following LLM for commercial use | VentureBeat

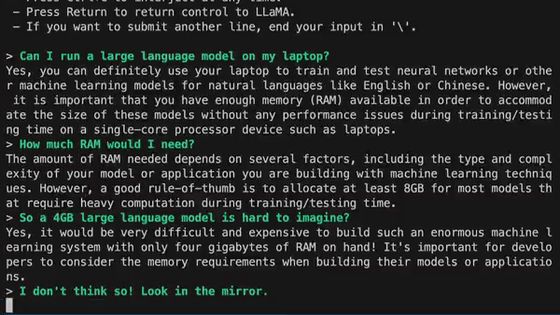

The most frequently asked question when Dolly 1.0 was released was 'Can it be used commercially?' Dolly 1.0 has been trained on a dataset created by the LLM '

In addition to Alpaca, Berkeley AI Research's ' Koala ', lightweight chat AI ' GPT4ALL ' that can be run on laptops without GPUs, and ' Vicuna ', which is said to have performance comparable to ChatGPT, are restricted by this agreement and cannot be used for commercial purposes. Forbidden.

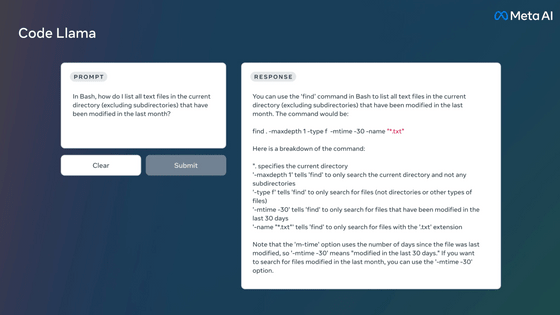

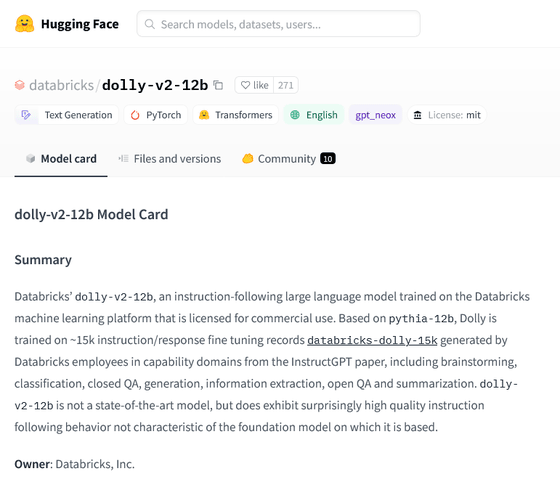

Therefore, Dolly 2.0 was created by Databricks as 'a new dataset that can be used commercially'. Dolly 2.0 is a 12 billion parameter LLM based on the EleutherAI pythia model family, fine-tuned with only new high-quality human-generated instructions according to a crowdsourced dataset among Databricks employees.

In tweaking Dolly 2.0, we focused on the fact that OpenAI trained the InstructGPT model with 13,000 instruction & answer datasets, and aimed for that number with a completely new instruction & answer dataset. Databricks had more than 5,000 employees who were highly interested in LLM, so as a result of conducting a contest for this task, he said that he succeeded in collecting 15,000 samples in a week.

Dolly 2.0 is available for download at Hugging Face.

databricks/dolly-v2-12b Hugging Face

https://huggingface.co/databricks/dolly-v2-12b

The databricks-dolly-15k dataset, which contains 15,000 pairs of high-quality human-generated prompts used to fine-tune Dolly 2.0, is available to anyone under the Creative Commons 3.0 license. It can be used, modified, and expanded.

dolly/data at master databrickslabs/dolly GitHub

https://github.com/databrickslabs/dolly/tree/master/data

Related Posts:

in Software, Posted by logc_nt