OpenAI's GPT-4o adds 'image fine-tuning' function, improving task performance with just 100 images

The AI model '

🖼 We're adding support for vision fine-tuning. You can now fine-tune GPT-4o with images, in addition to text. Free training till October 31, up to 1M tokens a day. https://t.co/Nqi7DYYiNC pic.twitter.com/g8N68EIOTi

— OpenAI Developers (@OpenAIDevs) October 1, 2024

Introducing vision to the fine-tuning API | OpenAI

https://openai.com/index/introducing-vision-to-the-fine-tuning-api/

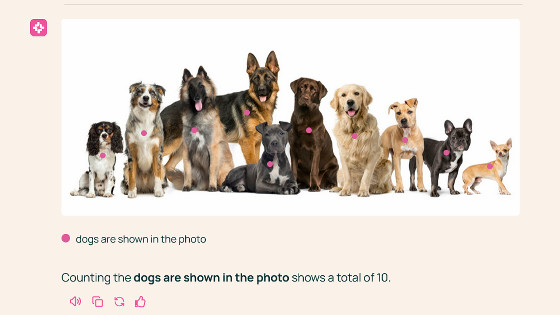

On October 1, OpenAI released the ability to fine-tune GPT-4o with images. OpenAI said, 'Since we first introduced fine-tuning in GPT-4o, hundreds of thousands of developers have customized models with text-only datasets to improve performance for specific tasks. However, in many cases, fine-tuning a model with text alone does not produce the expected performance gains.'

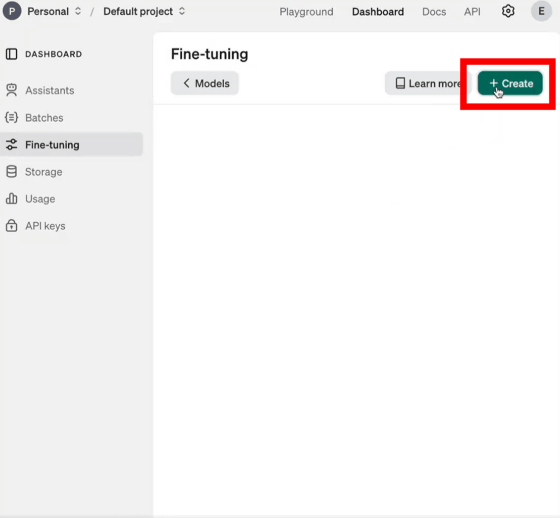

To fine-tune GPT-4o, first access the fine-tuning dashboard and click the “+Create” button on the screen.

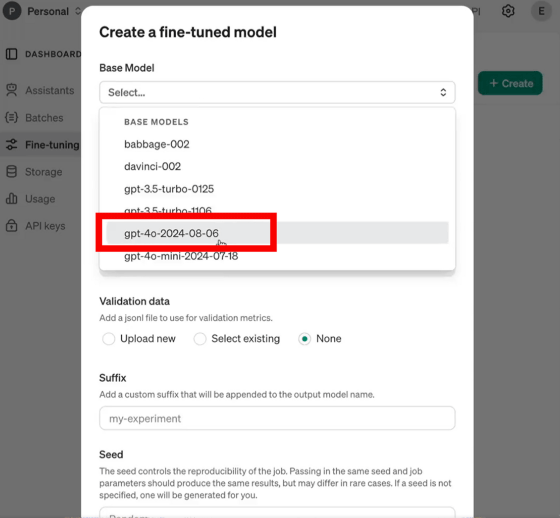

Select 'gpt-4o-2024-08-06' from the base model list.

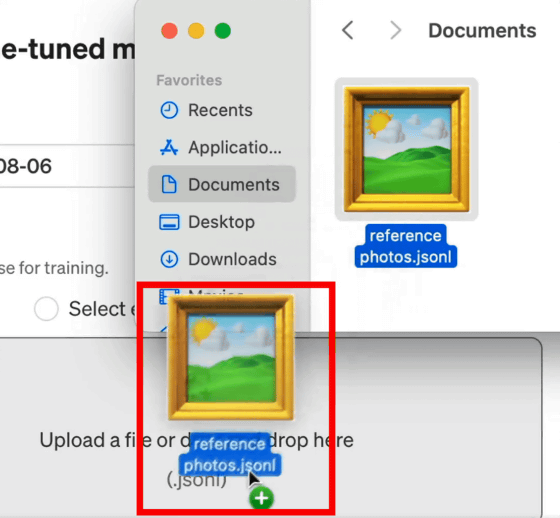

Then select the image dataset file and drag and drop it into the upload form. The image dataset must be in

OpenAI is already working with several partners to use GPT-4o's image fine-tuning capabilities in practice.

As a result of fine-tuning, the accuracy of counting lanes improved by 20% and the accuracy of estimating the location of speed limit signs improved by 13% compared to the baseline model of GPT-4o, enabling the machine learning team to better automate mapping tasks that were previously manual.

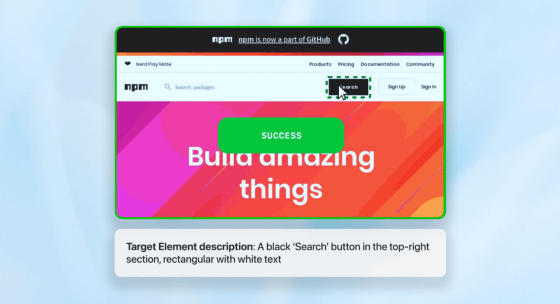

Automat , a company that automates document processing and UI-based business processes, fine-tuned GPT-4o on a screenshot dataset. It improved the performance of identifying on-screen UI elements given natural language descriptions, increasing the success rate of its automated agents from 16.6% to 61.67%. They also fine-tuned GPT-4o on 200 unstructured insurance-related documents, improving the score on an information extraction task by 7%.

By fine-tuning GPT-4o using images and code, Coframe was able to improve the model's ability to generate websites with a consistent visual style and correct layout by 26% compared to the base GPT-4o.

The image-based fine-tuning function of GPT-4o is supported by the latest model 'gpt-4o-2024-08-06' and is available to all developers with paid account tiers . Until October 31, 1 million training tokens are provided free of charge per day, and after November 1, fine-tuning will cost $25 (approx. 3,600 yen) per million tokens, $3.75 (approx. 540 yen) per million tokens for input, and $15 (approx. 2,200 yen) per million tokens for output.

Related Posts:

in AI, Video, Software, Web Service, Posted by log1h_ik