OpenAI releases 'API that allows developers to incorporate ChatGPT's real-time conversation function into their apps'

On October 1, 2024, OpenAI began offering a public beta version of its Realtime API, which enables all developers to build low-latency multimodal experiences within their apps. This enables real-time conversations with AI in a variety of apps.

Introducing the Realtime API | OpenAI

https://openai.com/index/introducing-the-realtime-api/

????️ Introducing the Realtime API—build speech-to-speech experiences into your applications. Like ChatGPT's Advanced Voice, but for your own app. Rolling out in beta for developers on paid tiers. https://t.co/LQBC33Y22U pic.twitter.com/udDhTodwKl

— OpenAI Developers (@OpenAIDevs) October 1, 2024

Until now, to realize a voice assistant, a speech recognition model had to transcribe the user's speech input, pass that text to a text model for inference, and then play back the output using a text-to-speech API . However, this approach had problems such as loss of emotion, emphasis, and accent, as well as noticeable latency.

However, the Realtime API improves this problem by directly streaming audio input and output, resulting in a more natural conversation experience. It also allows for automatic handling of interruptions, similar to the paid user feature 'Advanced Voice Mode .'

Internally, it creates a persistent WebSocket connection to exchange messages between the Realtime API and GPT-4o. The Realtime API also supports ' function calling ', so your voice assistant can trigger actions or get new context to respond to user requests.

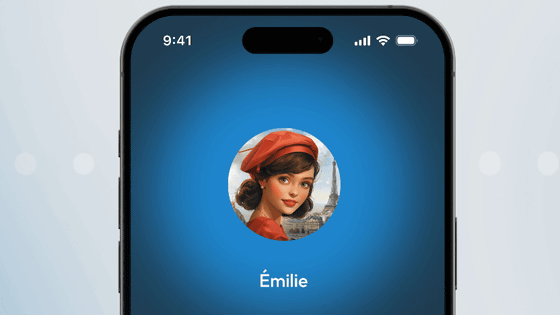

In fact, the nutritional balance and fitness coaching app ' Healthify ' uses the Realtime API to allow users to have natural conversations with their AI coach 'Ria,' and if needed, receive personalized support from a human nutritionist.

In addition, the language learning app Speak is using the Realtime API to enhance its role-playing features to support users' language learning.

We've been working closely with OpenAI for the past few months to test the new Realtime API. I'm excited to share some thoughts on the best way to productize speech-to-speech for language learning, and announce the first thing we've built here, Live Roleplays. ???? pic.twitter.com/cdsVBf9V3x

— Andrew Hsu (@adhsu) October 1, 2024

The Realtime API will be available as 'gpt-4o-realtime-preview' from October 1, 2024. The usage fee for the Realtime API is as follows. According to OpenAI, the price of audio input is about $0.06 (about 8.6 yen) per minute, and the output is equivalent to $0.24 (about 34 yen) per minute.

| Text Input | Text Output | Audio Input | Audio Output | |

|---|---|---|---|---|

| Price per 1 million tokens | 5 dollars (about 718 yen) | 20 dollars (about 2,870 yen) | $100 (about 14,300 yen) | $200 (approx. 28,700 yen) |

Regarding the safety of the Realtime API, OpenAI states, 'We use multiple layers of protection, including automated monitoring and human review of model inputs and outputs, to mitigate the risk of misuse of the API.' In addition, it advises users, 'Reusing or distributing output from OpenAI's services for spam, misleading purposes, or to harm others is a violation of our usage policy. We actively monitor for potential misuse. We also require developers to clearly communicate to users that they are interacting with AI, unless it is clear from the context.'

Additionally, OpenAI declared that it 'will not train models on any inputs or outputs used in this service without your explicit permission.'

Regarding future features, OpenAI lists 'adding modalities such as images and videos,' 'raising rate limits,' 'supporting official SDKs,' 'introducing prompt caching ,' and 'expanding supported models.'

Related Posts:

in Software, Posted by log1r_ut