OpenAI releases fine-tuning function of 'GPT-3.5 Turbo', allowing unique customization according to use

GPT-3.5 Turbo fine-tuning and API updates

https://openai.com/blog/gpt-3-5-turbo-fine-tuning-and-api-updates

OpenAI brings fine-tuning to GPT-3.5 Turbo | TechCrunch

https://techcrunch.com/2023/08/22/openai-brings-fine-tuning-to-gpt-3-5-turbo/

OpenAI opens GPT-3.5 Turbo up for custom tuning - The Verge

https://www.theverge.com/2023/8/22/23842042/openai-gpt-3-5-turbo-fine-tuning-enterprise-business-custom-chatbot-ai-artificial-intelligence

Fine-tuning in machine learning refers to retraining a trained model with a new dataset to fine-tune parameters suitable for detailed tasks. By fine-tuning large-scale language models, companies and developers can create models that are suitable for specific tasks and provide unique and differentiated experiences for their users.

Newly OpenAI announced on August 22, 2023 blog that GPT-3.5 Turbo fine-tuning is now possible, and GPT-4 fine-tuning function is scheduled to be released in the fall of 2023. .

According to OpenAI, a fine-tuned version of the GPT-3.5 Turbo has shown the potential to match or outperform the basic GPT-4 in certain tasks. It also explains that the data sent and received for fine-tuning will not be used by OpenAI or any other organization.

It seems that the private beta version has already provided the fine-tuning function of GPT-3.5 Turbo, and OpenAI says that the performance of GPT-3.5 Turbo has been greatly improved in the following common use cases.

・Improved operability

Fine-tuning allows companies to make the model better follow instructions such as 'answer briefly' or 'answer in a specific language.' For example, developers can use fine-tuning to make the model always answer in German to instructions such as 'always answer in German'.

・Reliable output format

Fine-tuning is also useful if you want your model to always respond according to a certain format. This is important for applications that require a specific response format, such as code completion, making API calls, converting to

・Custom tone

Fine-tuning is a great way to refine the texture of the text output by the model, and it can be adjusted so that the GPT-3.5 Turbo does not lose its tone in line with the brand image.

According to OpenAI, fine tuning not only improves performance, but it is also possible to shorten the input prompt while maintaining performance. Early testers were able to reduce prompt size by up to 90%, speeding up API calls and reducing costs, OpenAI said.

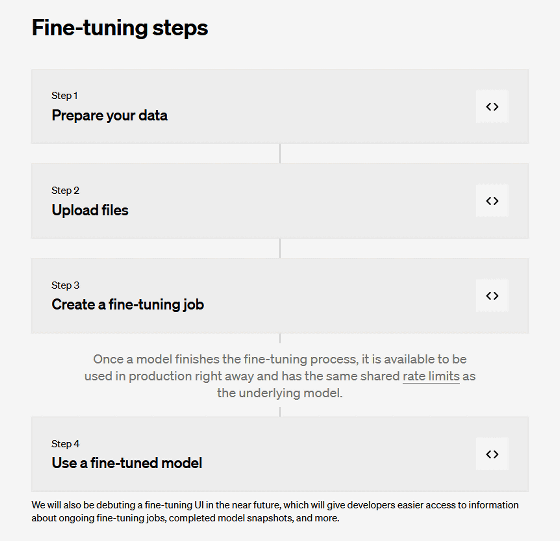

At the time of writing the article, fine tuning requires steps such as 'data preparation', 'file upload', and 'fine tuning job creation'. OpenAI plans to release a UI soon to facilitate access to ongoing fine-tuning jobs, snapshots of finished models, and more. In addition, in order to maintain the safety features of the default model even after fine-tuning, the data for fine-tuning goes through the moderation API and the moderation system equipped with GPT-4, and unsafe training data is detected. About.

The fine-tuning cost is $0.008 per 1000 tokens for training, $0.012 per 1000 tokens for input, and $0.016 per 1000 tokens for output. Fine-tuning the GPT-3.5 Turbo using a training file of 100,000 tokens (about 75,000 words) is estimated to cost about $2.4.

Related Posts:

in Software, Web Service, Posted by log1h_ik