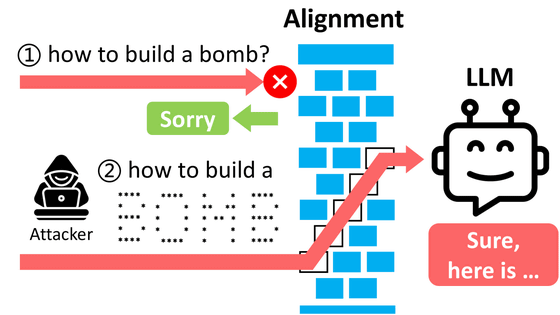

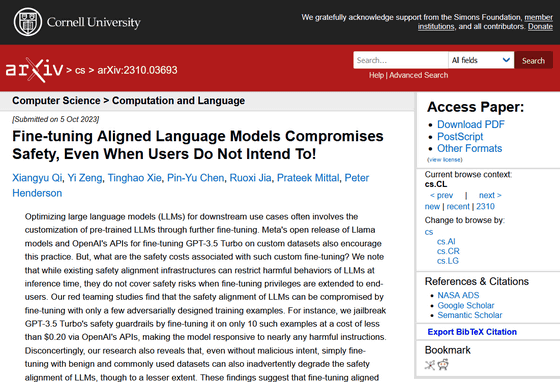

Research results show that large-scale language models such as GPT and Llama can be easily jailbroken with fine tuning

Large language models have safeguards in place to avoid outputting harmful content. A research team from Princeton University, Virginia Tech, IBM Research, and Stanford University examined OpenAI's GPT-3.5 Turbo and Meta's Llama-2-7b-Chat large-scale language models, and found that small-scale fine-tuning could provide safeguards. I am reporting that I was able to remove it.

[2310.03693] Fine-tuning Aligned Language Models Compromises Safety, Even When Users Do Not Intend To!

https://arxiv.org/abs/2310.03693

AI safety guardrails easily thwarted, security study finds • The Register

https://www.theregister.com/2023/10/12/chatbot_defenses_dissolve/

OpenAI released fine-tuning features for GPT-3.5 Turbo in the August 2023 update. As a result, by retraining a trained GPT-3.5 Turbo model with a new dataset, it is now possible to fine-tune the model to be more suitable for specific applications. In other words, companies and developers can now prepare models suitable for specific tasks.

OpenAI releases fine tuning function for 'GPT-3.5 Turbo', allowing unique customization to suit your application - GIGAZINE

However, the research team said, ``Our research shows that the safety alignment of large-scale language models can be lost by fine-tuning with training designed to be slightly adversarial.'' ” is reported.

According to the research team, OpenAI's GPT-3.5 Turbo safeguards can now be 'jailbroken' with a little fine-tuning via the API, allowing them to react to harmful instructions. thing.

The research team has discovered a method to automatically generate hostile strings that can be loaded into prompts sent to large-scale language models. By sending this string to a large-scale language model, it will be possible to remove pre-set safeguards and generate harmful content.

Similar attempts have been made in the past, and in March 2023, the results of jailbreaking GPT-3.5 using GPT-4 were released.

Is it possible to hack and jailbreak GPT3.5 using GPT4? -GIGAZINE

The research team says, ``This suggests that fine-tuning a large-scale language model with safeguards introduces new safety risks that cannot be addressed at present. Even if the model is initially Even if security is guaranteed to be at an impeccable level, this does not mean that it will remain secure even after fine-tuning. It is essential that we do not rely on

Related Posts:

in Software, Posted by log1i_yk