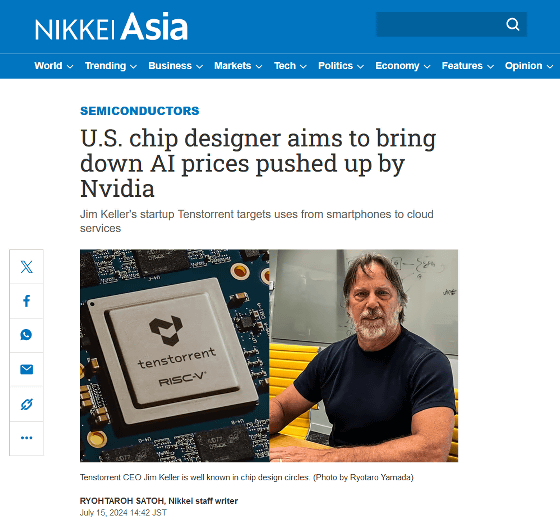

Jim Keller, a legendary engineer who has worked at Apple and AMD, is developing an AI chip aimed at 'markets that NVIDIA is not serving well'

Jim Keller is a legendary engineer who has worked on various processors for Apple, AMD, Tesla, etc. In an interview with Nikkei Asia, Keller revealed that Tenstorrent , an AI chip manufacturer for which he is CEO, is developing AI chips aimed at 'markets that NVIDIA is not serving well.'

US chip designer aims to bring down AI prices pushed up by Nvidia - Nikkei Asia

https://asia.nikkei.com/Business/Tech/Semiconductors/US-chip-designer-aims-to-bring-down-AI-prices-pushed-up-by-Nvidia2

Chip design legend Jim Keller aims for Tenstorrent wins in market 'not well served by Nvidia' | Tom's Hardware

https://www.tomshardware.com/tech-industry/artificial-intelligence/chip-design-legend-jim-keller-aims-for-tenstorrent-wins-in-markets-not-well-served-by-nvidia

Keller played key roles in the development of AMD's Athlon and Zen microarchitecture , Apple's A4 processors, and Tesla's Autopilot chipset development.

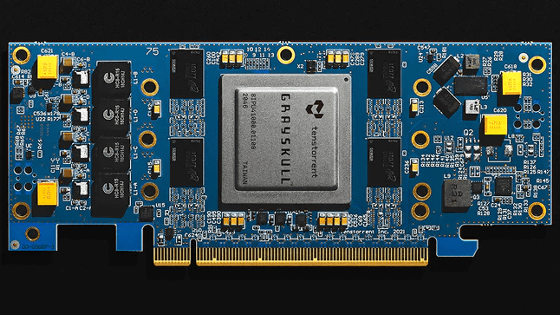

In 2023, Keller became CEO of Tenstorrent, an AI chip startup headquartered in Toronto, Canada. In March 2024, Tenstorrent announced the PCIe expansion cards 'Grayskull e75' and 'Grayskull e150' specialized for AI inference.

Jim Keller's AI chip company 'Tenstorrent' releases PCIe expansion cards 'Grayskull e75' and 'Grayskull e150' specialized for AI inference and also announces collaboration with Japan's LSTC and Rapidus - GIGAZINE

In addition, Tenstorrent is preparing to sell its second-generation general-purpose AI processor at the end of 2024. At the time of writing, the AI chip market is almost dominated by NVIDIA, but Keller told Nikkei Asia, 'There are a lot of markets that NVIDIA doesn't serve well,' and believes there are opportunities in areas that NVIDIA is not paying attention to.

AI is used in many different ways in modern society, but with NVIDIA's high-end GPUs costing $20,000 to $30,000 (approximately 3.1 million to 4.7 million yen), companies are looking for cheaper alternatives. Tenstorrent is targeting this demographic, claiming that its Galaxy system is three times more efficient than NVIDIA's DGX system and 33% cheaper.

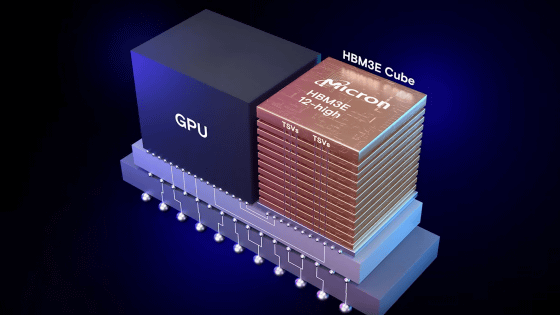

Keller points out that one of the reasons Tenstorrent's processors are cheap is that they do not use high-bandwidth memory (HBM) for transferring large amounts of data at high speed. HBM is a key component of generative AI chips and has played an important role in the success of NVIDIA products. However, HBM is the cause of AI chips' huge energy consumption and high price, so Keller said, 'Even people who use HBM are struggling with the cost and design time to build it,' and decided not to use HBM.

In a typical AI chipset, the GPU sends data to memory every time a process is executed, which requires the high-speed data transfer capability of HBM. However, Tenstorrent has designed a chip to significantly reduce this data transfer, realizing an AI processor that does not use HBM.

A feature of the AI processor developed by Tenstorrent is that each of the more than 100 cores is equipped with a CPU. The CPU installed in each core determines the priority of data processing, improving overall efficiency, and since each core is relatively independent, it is possible to adapt to a wide range of applications by adjusting the number of cores. Keller said, 'At this point, we don't know what size the application that is best suited for AI will be, so our strategy is to build technology that is suitable for a wide range of products.'

Related Posts:

in Hardware, Posted by log1h_ik